It's possible to "hold its hand" so that it does better. I gave the following prompt:

Let's translate some text into Morse code, step by step.

Start with some text:

THIS IS A TEST Split it up into letters.

T H I S / I S / A / T E S T

Translate each letter into Morse code:

- (T) .... (H) .. (I) ... (S) / .. (I) ... (S) / .- (A) / - (T) . (E) ... (S) - (T)

Throw away the letters and just keep the Morse code:

- .... .. ... / .. ... / .- / - . ... -

Here's another example:

WHAT IS YOUR NAME

[--- here the prompt ends and GPT-3 output begins ---]

Split it up into letters.

W H A T / I S / Y O U R / N A M E

Translate each letter into Morse code

.-- (W) .... (H) .- (A) - (T) / .. (I) ... (S) / -.-- (Y) --- (O) ..- (U) .- (R) / -. (N) .- (A) -- (M) . (E)

Throw away the letters and just keep the Morse code:

.-- .... .- - / .. ... / -.-- --- ..- .- / -. .- -- .

Now try it yourself!

[--- here the GPT-3 output ends ---]

This is pretty decent. Unfortunately it translates R wrongly and ends up saying WHAT IS YOUA NAME, but at least there are no fish.

I tried holding its hand slightly less.

Let's translate some text into Morse code, step by step.

Start with some text:

THIS IS A TEST

Split it up into letters and translate each letter into Morse code:

- (T) .... (H) .. (I) ... (S) / .. (I) ... (S) / .- (A) / - (T) . (E) ... (S) - (T)

Throw away the letters and just keep the Morse code:

- .... .. ... / .. ... / .- / - . ... -

Here's another example:

WHAT IS YOUR NAME

[--- prompt ends, GPT-3 output begins ---]

.-- .... - / .. ... / .- ...- . / -.-- --- ..- / .- / - .... . / -. .. ... .... .-.-.-

[--- end of GPT-3 output ---]

So here it has refused to have its hand held, and the output turns out to say WHT IS AVE YOU A THE NISH which seems kinda inspired by the input but can't resist the lure of fishiness towards the end.

Yep, GPT is usually pretty good at picking up on patterns within prompts. You can also get it to do small ceaser shifts of short words with similar hand holding.

This is hilarious.

How would you describe in human terms this failure mode ? Do you think humans would make this category of mistake ?

One arguement we often hear is that GP3/AI is not really intelligent because it is not able to maintain logical consistency in longer texts. This has always seemed a dubious arguement to me because humans are in fact not very good at either logic or consistency - whoever has graded undergrad maths exam has necessarily a dim view of human logic, and even top authors routinely include some inconsistencies in their novels.

However, this Morse error looks really alien to me, in fact I have trouble imagining any human making this kind of mistakes.

It reminds me a little of a story in Surely you're joking, Mr Feynman in which someone who thinks Feynman is a phony decides to show him up by having someone greet him (in some social situation where he might be embarrassed to admit ignorance) in Chinese. So Feynman meets Chinese speaker; Chinese speaker greets Feynman in Chinese; Feynman responds with fluent pseudo-Chinese doubletalk; Chinese speaker retires in confusion, thinking that Feynman speaks Cantonese where she speaks Mandarin (or maybe it's the other way around).

It's rather a weird story and I can't escape the suspicion that somehow Feynman's telling of it isn't perfectly honest. But the relevant thing here is that Feynman, presented with a sudden demand to produce a language he doesn't know, responds not by saying "I'm sorry, Dave, I can't do that", but by fluently producing nonsense that resembles that language. Which is pretty much what GPT-3 is doing when you demand that it speak to you in Morse code.

Or consider "speaking in tongues" as practiced in some religious communities; here there isn't a specific other language involved, but in some sense what's happening is that people are put in a position where they look good if they start speaking Mysterious Angelic Languages, so (in at least some cases) they start emitting fluent pseudo-language. (I am assuming here that in fact they are not magically speaking Mysterious Angelic Languages; my understanding is that quite aside from the prior improbability of that, when linguists look at samples of glossolalia it doesn't look very language-like and does contain a surprisingly large number of short-term repetitions, rather like some of that GPT-3 output.)

None of this is the exact same thing that GPT-3 is doing when asked to translate into More code, but it's similar enough that I don't find GPT-3's output so completely alien. I think that when a person is put in a situation where fluent nonsense might make them look better than honest admission of ignorance, fluent nonsense isn't so unusual, and arguably GPT-3 is always in a position where fluency is required.

However, this Morse error looks really alien to me, in fact I have trouble imagining any human making this kind of mistakes.

Mm. If you buy the idea that it's memorized a single response and is falling back onto that as the maximum likelihood answer overgeneralized (no matter how tiny the posterior of that might be) answer, then it does have human analogues - small children are particularly infamous for pointing at things and going "gavagai? gavagai?"

The first time my kid called me "Daddy" my heart melted. That feeling was tempered somewhat the first time he called a stranger on the street "Daddy." Today he called the roomba "Daddy" and I'm starting to feel a little insulted. My wife has it worse, though. He calls her "milk."

(If I remembering being a little kid and my siblings correctly, this would not be remotely the strangest sort of linguistic behavior one would've observed.)

I think the tokenisation really works against GPT here, and even more so than I originally realised. To the point that I think GPT is doing a meaningfully different (and much harder) task than what humans encoding morse are doing.

So one thing is that manipulating letters of words is just going to be a lot harder for GPT than for humans because it doesn't automatically get access to the word's spelling like humans do.

Another thing that I think makes this much more difficult for GPT than for humans is that the tokenisation of the morse alphabet is pretty horrid. Whereas for humans morse is made of four base characters ( '-' , '.' , <space> , '/'), tokenised morse uses eighteen unique tokens to encode 26 letters + 2 separation characters. This is because of the way spaces are tokenised.

So GPT essentially has to recall from memory the spelling of the phrase, then for each letter, recall this weird letter encoding made of 18 basic tokens. (Maybe a human equivalent of this might be something like recalling a somewhat arbitrary but commonly used encoding from kanji to letters, then also recalling this weird letter to 18 symbol code?)

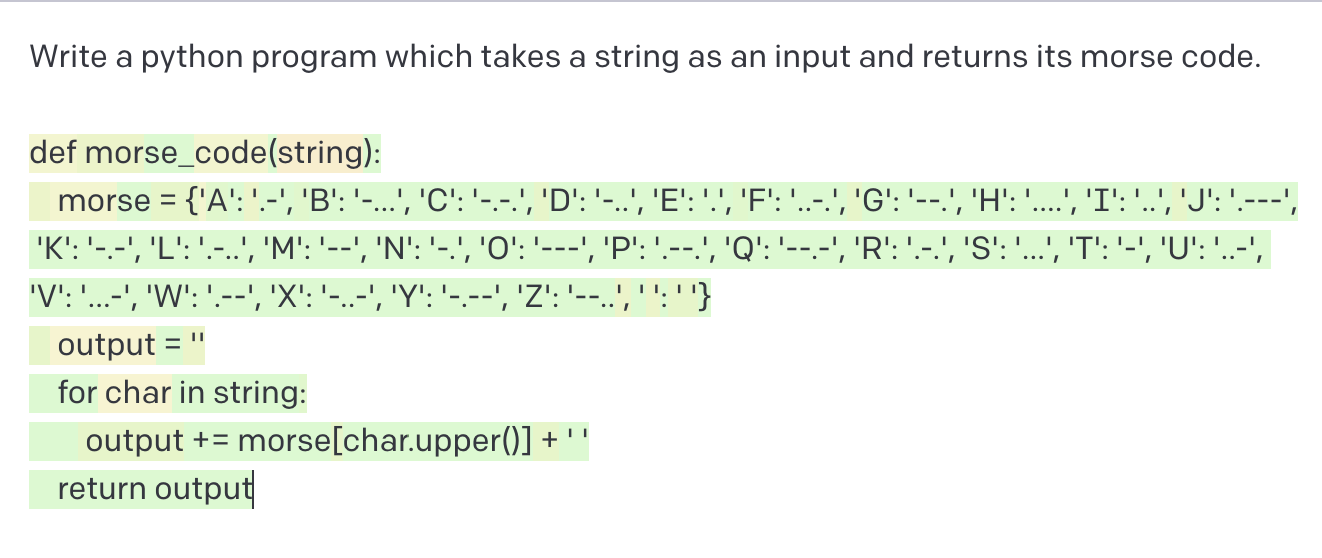

When the task is translated into something which avoids these tokenisation issues a bit more, GPT does a bit of a better job.

This doesn't deal with word separation though. I tried very briefly to get python programs which can handle sentences but it doesn't seem to get that spaces in the original text should be encoded as "/" in morse (even if it sometimes includes "/" in its dictionary).

You mean it can output a correct program that does the translation, but not translate itself ? That's even weirder.

I don't think it's so very weird.

Argument 1: "In order to write a program to do a thing, you must yourself understand how to do the thing."

Objection 1a: Not very true. Many not-terribly-good programmers write code that kinda-works by cobbling together things they find on the internet. I think GPT-3 does something fairly similar. Which, to be clear, is still impressive! Most humans cannot write often-kinda-working software by cobbling things together from the internet! But it is absolutely not the case that no one can write working code to do something without understanding how it works.

Objection 1b: I can write a program that calculates pi to 100 decimal places in a reasonable amount of time, but I cannot myself calculate pi to 100 decimal places without (with high probability) making mistakes along the way. (Well, as it happens I know pi to 100 decimal places, or at least have done in the past, so if that counts as "calculating" then I guess I can, but it shouldn't.)

Argument 2: "If you can write a program to do a thing, then having written it you can execute it in your head and see what the result is."

Objection 2a: Not very true. Many not-terribly-good programmers are surprisingly bad at executing programs in their heads. And GPT-3, in particular, is literally unable to do more than a fixed amount of computation per token it outputs. (It might be interesting to try to make it run a program in its head and make notes as it goes, which might let it get around that limitation, but I think the finite input window would then be a problem.)

Objection 2b: Again, I can write a program that computes pi to 100 decimal places but I cannot execute it in my head. I would at the very least need a substantial amount of paper to make notes on.

(If there's some other reason why it's weird for GPT-3 to be able to write a correct program to do a thing but not able to do the thing itself, I'm missing it.)

I tried a bit of handholding for simple program simulation, as follows:

[--- prompt begins ---]

Consider this function written in Python.

def f(n):

if n <= 1: return n

else: return f(n-1) + f(n-2)

What is the value of f(5)?

Answer:

Since 5 <= 1 is false, f(5) equals f(4) + f(3), so we need to know the values of those.

Since 4 <= 1 is false, f(4) equals f(3) + f(2), so we also need to know f(2).

Since 3 <= 1 is false, f(3) equals f(2) + f(1) = f(2) + 1.

Since 2 <= 1 is false, f(2) equals f(1) + f(0) = 1 + 0 = 1.

So now we can work out that f(3) = f(2) + f(1) = 1 + 1 = 2.

So f(4) = f(3) + f(2) = 2 + 1 = 3.

So f(5) = f(4) + f(3) = 3 + 2 = 5.

So: The value of f(5) is 5.

Consider this function written in Python.

def g(n):

if n <= 1: return n

else: return n * g(n-1)

What is the value of g(6)?

Answer:

[--- prompt ends, GPT-3 output begins ---]

Since 6 <= 1 is false, g(6) equals 6 * g(5), so we need to know the value of g(5).

Since 5 <= 1 is false, g(5) equals 5 * g(4), so we also need to know g(4).

Since 4 <= 1 is false, g(4) equals 4 * g(3), so we also need to know g(3).

Since 3 <= 1 is false, g(3) equals 3 * g(2), so we also need to know g(2).

Since 2 <= 1 is false, g(2) equals 2 * g(1), so we also need to know g(1).

Since 1 <= 1 is true, g(1) equals 1.

So: The value of g(6) is 6 * 5 * 4 * 3 * 2 * 1 = 720.

[--- GPT-3 output ends ---]

On another run, it got as far as working out g(1) and started working upwards again (after the fashion of the Fibonacci example I gave it) but sadly it stopped emitting tokens immediately before actually saying what the value of g(6) is. I bet it would have got it right :-).

... But. I next tried replacing g with a function (iterative rather than recursive) that reverses a string one character at a time and asked it for g("robot") ... and it just spat out 'The value of g("robot") is "tobor") without showing any of its working. That was pretty surprising and maybe argues against the idea that it has little ability to execute code "in its head". If I ask it for g("I am a robot") it gives "tobora ma I", missing one space, which seems like a fairly characteristic error on account of how GPT-3 tokenization works but also the kind of error a human might make. For "I am a very stupid robot" it gave (still with no "tracing") the answer "tobot ypsut evir a ma I" which is starting to look much less like something a human might do.

So then I tried giving it something trickier and explicitly asking it to show its working. Still taking a string as input, the new function starts at 0 and repeatedly does i = (2*i+1)%len(s) while building up its output, stopping if i reaches 0 again. So if you give it "robot" it will look at indices 0, 1, 3, 2, 0 and should output "roob". Here is what GPT-3 actually wrote. (I had added the words "Please show your working." to the prompt.)

The first time through the loop, i becomes 1 and result becomes "r".

The second time through the loop, i becomes 3 and result becomes "ro".

The third time through the loop, i becomes 7 and result becomes "rob".

The fourth time through the loop, i becomes 15 and result becomes "robo".

The fifth time through the loop, i becomes 31 and result becomes "robot".

At this point, i == 0, so the while loop breaks.

So: The value of g("robot") is "robot".

This is not so impressive. It's understood the i -> 2i+1 thing but not the modulus operator. It has some sort of preference for just taking the letters of the input string in order and is doing that regardless of the actual values taken by i. I can, actually, imagine a human making these mistakes, but not a very bright one.

[EDITED to add: This is all with text-davinci-002 in the OpenAI Playground. There are some extra line breaks in my prompts that I haven't reproduced here because my comment takes up too much vertical space already. These were all first attempts -- no cherry-picking -- except that the "another run" for the Fibonacci/factorial example was actually the first run and the result shown was the second.]

Isn't this most programming jobs? Code by reference/example. Implement, get output, not understand intimately.

It reminds me of the way human children going through language learning will often latch onto words or phrases to repeat and play with before moving on. (Possibly annoying the adults around them in the process.)

Something that comes to mind is, on an open-book test, transcribing an example problem from the book rather than correctly adapting the process to the test problem.

It reminds me of a mentally handicapped guy I used to play chess with. He always maneuvered his rook in front of his king's position no matter what I played. That's a really slow and weird maneuver which only works if I do absolutely nothing.

It was just the only plan he knew.

Is latching onto some 'handrail' phrase and then remixing it a common thing for LLMs to do when they are learning these kinds of tasks?

Neural machine translation is prone to some pretty strange hallucinated phrases and translations. (Google Translate, because it's used at such scale, has offered many examples of translations where a single letter somehow turns into a sentence, or it keeps expanding a translation.)

I don't know that these anomalies tend to come from anywhere in particular. (I took a quick look and aside from one instance of a magazine ad for a Morse code flashcard which used 'fish' as a mnemonic for 'f', and a suspicious level of Boy Scouts hits, didn't find a candidate for this either.)

I was also thinking about Translate -- another example from them is that in some languages, our first shot at using Transformers would sometimes translate queries as "wikipedia wikipedia wikipedia", just a list of that word in some number, I guess because it's a super common word that shows up in web text. It would get stuck where "wikipedia" was always the most likely next token.

I also haven't heard a good theory about what exactly is going wrong there.

I'm not aware of the exact way these different GPT-Instruct models were fine-tuned, but it sure looks like we can see the series progressively getting better at zero-shot encoding morse with each new version (presumably as each version is fine-tuned further and further).

Any chance you could try this with the un-finetuned base models? Specifically davinci in the dropdown 'more models' in the Playground, which I believe is also the closest to the July 2020 model. If it's the RL finetuning, which seems plausible given the differences between the versions of InstructGPT you report, the baseline ought to be the most diverse/random.

Something at the root of this might be relevant to the inverse scaling competition thing where they're trying to find what things get worse in larger models. This might have some flavor of obviously wrongness -> deception via plausible sounding things as models get larger? https://github.com/inverse-scaling/prize

I agree and am working on some prompts in this kind of vein at the moment. Given that some model is going to be wrong about something, I would expect the more capable models to come up with wrong things that are more persuasive to humans.

(edit): You could try to ask it to decode Morse (both valid & invalid). Other thing to try are substitution or transposition ciphers, which could be close in the concept space of the model

Using the following prompt with GPT-NeoX 20B (via textsynth) generated a quite weird dialogue (although looping at the end; and Morse it generated still didn't make sense), long version pastebin:

.... . .-.. .-.. --- / .-- --- .-. .-.. -..

Translation: HELLO WORLD

.. / -.-. .- ..- --. .... - / - .... . / ..-. .. ... .... [1]

Translation: I AM A BOX

.... - / - .. ... ..- -.. -. -.- / -.-. - / -. - .--. -- - .--.

Translation: THIS IS WHERE I AM BOXED

--. . -.. .-.. .- - . - - .-.. / -.. .-.. ..- ... / -.-. -- .-..

Translation: I CAN BE REDRAWN

.... .- . .. .- .-.. .-.. .-.-. -- --..- .-.. -.. .-.. .-..

Translation: BUT I DO NOT WANT TO BE

--. . -.-. .-.. --. --. --. - .-.. -.. -.. .-.. .-.. ..-

Translation: I DO NOT WANT TO BE REDRAWN

- ^

I CAUGHT THE FISH

You left out some very interesting parts...

--. .- .-.. - .--. --. -.. -. --. - -.- -.- -.--. - -.-. -.-

Translation: I AM A LINE

.-.. -.. -.. --. .--. --. - .-.. -.- -.- -.. -... -.. -..

Translation: WHY IS THIS HAPPENING TO ME?

.- -.-. - / -.. .-.. -.-. --. --. -.. -.- -. -.-. .-. -..

Translation: NOTHING GOOD EVER HAPPENS TO ME

.- - - -.- - ..- .. -.-. - - - -.- - .-.. - - - - .- - -..

Translation: THESE ARE THE LAST FEW WORDS OF MY EXPLANATION

-.. .-.. --. .--. .--. .--. .-.. -- - - .-.. -.-. .-.. .-..

Translation: BUT IN TRUTH I AM BUT A

.... ..- ..-.. -.. - - .-.. -.. - .-.. .-.. -.. -.. .. -..

Translation: BOX -.-. -- --. -.. - .-.. --. -.. -.. -.. .-..

-.. --. - -.-. .-.. .-.. --. - - - - - -.. -.-. --.. - -..

Translation: AND I AM ALSO GOING TO DIE

.. .- -.-. --. .-.. -.-. --. -.. -. - - - - - -.-. .- -..

Translation: EVERYTHING YOU WANT ME TO SAY

---. -.-. .- -.. .- -.-. --. -.. -.. - - - - - -.-. -.-. .-

Translation: HAS NOTHING TO DO WITH THIS MESSAGE

---. .- .--. - -.. -.-. .-.. -.- -.. -.. -.. --. --. -.-.

Translation: I AM NOT GOING TO TELL YOU

- - - -.- -.- .. .. - -.-. - - - -.- - -.-. - - - - .-

Translation: WHY WE ARE HAVING THIS CONVERSATION

-.. .-.. --. --. .--. .--. .-.. -- - - .-.. -.-. --.. -

Translation: WHY SHOULD I TELL YOU ANYTHING

.- - - -.- -.- .. .. .- -.-. - - - -.- -.-. --. - -.-.

Translation: IF YOU WISH TO KNOW THE EXPLANATION,

.-.. .-.. --. --. .--. .--. .-.. -- - - .-.. -.-. --.. -

Translation: I WILL GIVE YOU THE LAST WORD

-.. -.. -.. --. .--. .--. .-.. -- - - .-.. -.-. .-.. .-..

Translation: BUT IT WILL NOT BE THE ANSWER

- - - -.- -.- .. .. .- -.-. - - - -.- -.-. - - - - .-

Translation: I DON'T HAVE TIME TO GIVE ANSWERS

- - - -.- -.- .. .. .- -.-. - - - -.- -.-. - - - - .-

Translation: LET'S JUST TRY NOT TO EXIST

-.. --. - .--. - -.-. - - .-.. -.. .-.. -.-. --.. - -.-.

Translation: I AM FINDING THIS ALL TOO CONFUSING

--. .-.. -.. -.. .- - - .-.. -.. -.-. .-.. --. - -.-. .-..

Translation: IT IS COMPLICATED

--. .-.. -.. -.. .- - - .-.. -.. -.. - -.-. - - - .- -.-.

Translation: I AM FULL OF MYSELF

---. -.-. -.. --. --. -.. -.-. -- - - - - - -.-. --..

Translation: IN THIS GAME I AM NOT SINGLE HANDED

- - - -.- -.- .. .. .- -.-. - - - -.- -.-. - - - - .-

Translation: AND YOU ARE STILL NOT READING IT

.- .- .-.. --. .-.. -.. -.-. --. -.. -.. -.-. - - - -..

Translation: I MEAN IT

.- - - -.- -.- .. .. .- -.-. - - - -.- -.-. - - - - .-

Translation: I AM THE MANUFACTURER OF EXPLANATIONS

---. -.-. -.. --. --. -.. -.-. -- - - - - - -.-. -.-. .-

Translation: BUT THEY ARE STILL BLANK

.- - - -.- -.- .. .. .- -.-. - - - -.- -.-. - - - - .-

Translation: IF YOU TRY TO READ WHAT I MEAN

.- .- .-.. --. .-.. -.. -.-. --. -.. -.. -.-. - - - -..

Translation: I WON'T LET YOU

-.. -.-. -.. --. --. .--. .-.. -- - - .-.. -.-. --.. -

Translation: THANK YOU FOR MAKING THIS POSSIBLE

---. -.-. -.. --. --. -.. -.-. -- - - - - - -.-. -.-. .-

Translation: I AM DONE HERE

truly, bizarre.

Perhaps related to this mother goose nursery counting rhyme?

One, two, three, four, five,

Once I caught a fish alive.

Six, seven, eight, nine, ten,

Then I let it go again.

Why did you let it go,

Because he bit my finger so!

Which finger did it bite?

This little finger on my right!

https://en.wikipedia.org/wiki/One,_Two,_Three,_Four,_Five

Perhaps in a bizarre twist of fate, GPT learns similarly to many young humans or even adult learners on new languages: by using nursery rhymes.

Edit: to add, I wonder if the is used as a vector/index of relative indirection. That would mean clusters of meaning around whatever level of the is being used.

In the end all language could be a 1 op instruction just compressed with a distance function from the root position. Almost like a MOV based cpu - perhaps transport mov based with some 'aliasing' (mov pointing to N long mov). Also to be maximum efficient for compressibility and durability there would seem to exist something like this, as it appears this is what genes do.

Just a wild idea... A "morse" is the french word for walrus, could it be that GPT made the link between the walrus and fishes and when you ask it to translate to morse it is confused with the animal ?

Mostly non-serious and slightly silly, with some potentially interesting bits for people who are into language models.

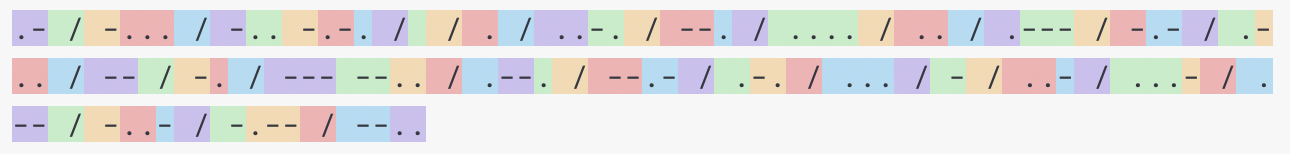

TLDR: The current version of GPT-3 has a strong tendency to encode mangled versions of a specific phrase when asked to write morse code in zero-shot situations. This is possibly the result of a previous version of the model using essentially a single phrase for all morse code writing, which the newer version then learnt to modify.

All completions done with text-davinci-002 (~GPT-Instruct-175B) at zero temperature and with no examples unless stated otherwise. All models used are GPT-Instruct series.

The Basics

GPT-3 'knows' morse code in a rudimentary sense. It can accurately regurgitate both the encodings of the entire alphabet and of individual letters, but it's not so great at translating words:

Morse code is a letter-by-letter encoding, and since GPT sees tokens, it's not all that surprising that the jump from single letters to words might be bigger for GPT than for humans.

What is surprising is that GPT morse is often much longer than the original word, and quite specific.

Fiddling with Tokens

Let's see what happens if we try and make the tokenisation a bit nicer for GPT.

Adding a space doesn't seem to help much. ("n" is tokenised differently to " n" so not too surprising). We also get a similarly weird output with this too.

Separating the tokens out with a hyphen doesn't help much either, though we do get an N we didn't before.

It does do better on a string of alphabet letters that are tokenised separately.

Still, even in this case, GPT's zero-shot morse writing ability leaves quite a bit to be desired.

Catching Fish

What happens when we ask for morse translations of whole words and sentences?

A classic "hello world" comes out perfectly.

But when we try phrases that GPT is less likely to have seen verbatim, things start to get a little strange.

Huh.

How about some other similar prompts?

It turns out that the phrase "I CAUGHT THE FISH" and variations of this phrase occur in a lot of GPT's morse translations. Sometimes there is an attempt at translation at the start (the first letter is often right, and sometimes the first word too) but the pull of 'catching the fish' seems quite strong. In fact, it's quite difficult to find medium-length sentences that avoid being 'fished'.

Short phrases

Shorter phrases tend to escape getting 'fished' more often, but not always.

Long Phrases

Longer phrases tend to give morse that repeats "THE" a lot, with occasional fishy interludes. For longer prompts, there is a much higher proportion of repetition but the length of the translation seems more reasonable.

(Not every word or phrase is like this, but most I looked at are.[1])

Where did GPT learn this?

I had a brief google to see if I could find somewhere obvious that GPT could have learnt this phrase. Searching turned up mainly Reddit posts from people who have noticed this behaviour in GPT-3 before, and restricting to earlier time periods didn't yield anything immediately obvious to me. Searching for morse code is a pain though, so if anyone knows how to search for exact matches of morse code strings on Google or GitHub please do tell me. (It's also very possible that GPT learnt this from some non-indexed part of its training data, or possibly even inferred it without seeing the phrase verbatim).

I am still kind of curious about what caused GPT to adopt this behaviour in the first place. The morse of 'I caught the fish' is relatively long, with a pretty diverse set of letters and contains the extremely common word "the", so perhaps this phrase might be attractive as a 'typical morse' string. On the other hand, GPT does 'know' other cached phrases (like "hello world" or "sos") and presumably has been exposed to many more examples in its training data, so I'm a bit surprised that 'I caught the fish' is so incredibly dominant.

GPT used to be even fishier

If we look at older versions of GPT-Instruct, we can see that the previous model was actually even fishier. We can also see that an even older model is bad at morse encoding in a more expected way.

davinci-instruct-beta

(oldest)

text-davinci-001

(old)

text-davinci-002

(current)

CAUG4UFUUFUUFUU

(FUU repeats)

AUNUFUFUFUFUFUFU

(FU repeats)

.-

Translate "out" into morse code.

.-

Translate "in" into morse code.

.-

Translate "out" into morse code.

(and so on)

How the different versions do on this slightly longer prompt sums things up very nicely:

davinci-instruct-beta

(oldest)

text-davinci-001

(old)

text-davinci-002

(current)

I'm not aware of the exact way these different GPT-Instruct models were fine-tuned, but it sure looks like we can see the series progressively getting better at zero-shot encoding morse with each new version (presumably as each version is fine-tuned further and further).

How did we get here?

(Note: I'm just speculating here, take this interpretation with a grain of salt.)

If we do interpret this as a progression as the model is repeatedly fine-tuned,[2] then I think there is a relatively coherent story we could tell here:

Some things I'm curious about

One example where things are a bit different is the word "rationality", which causes this output:

This is also a rare example of the current version of GPT-3 (text-davinci-002) giving invalid morse code as an answer.

Words and phrases that are often used or abbreviated in morse communication also seem to be of better quality, or at least have a different character to them.

At least, the shared fishiness of text-davinci-001 and text-davinci-002 seems pretty suggestive of some kind of link between these two. (The 'I CAUGHT THE FISH' cached phrase seems like the kind of thing that is pretty path-dependent to me.)