Sometimes they're the same thing. But sometimes you have:

- An unpredictable process with predictable final outcomes. E.g. when you play chess against a computer: you don't know what the computer will do to you, but you know that you will lose.

- (gap) A predictable process with unpredictable final outcomes. E.g. if you don't have enough memory to remember all past actions of the predictable process. But the final outcome is created by those past actions.

Quoting E.W. Dijkstra quoting von Neumann:

...for simple mechanisms, it is often easier to describe how they work than what they do, while for more complicated mechanisms, it is usually the other way around.

Also, you just made me realise something. Some people apply the "beliefs should pay rent" thing way too stringently. They're mostly interested in learning about complicated & highly specific mechanisms because it's easier to generate concrete predictions with them. So they overlook the simpler patterns because they pay less rent upfront, even though they are more general and a better investment long-term.

For predictions & forecasting, learn about complex processes directly relevant to what you're trying to predict. That's the fastest (tho myopic?) way to constrain your expectations.

For building things & generating novel ideas, learn about simple processes from diverse sources. Innovation on the frontier is anti-inductive, and you'll have more success by combining disparate ideas and iterating on your own insights. To combine ideas far apart, you need to abstract out their most general forms; simpler things have fewer parts that can conflict with each other.

So they overlook the simpler patterns because they pay less rent upfront, even though they are more general and a better investment long-term.

...

And if you use this metaphor to imagine what's going to happen to a tiny drop of water on a plastic table, you could predict that it will form a ball and refuse to spread out. While the metaphor may only be able to generate very uncertain & imprecise predictions, it's also more general.

Can you expand on the this thought ("something can give less specific predictions, but be more general") or reference famous/professional people discussing it? This thought can be very trivial, but it also can be very controversial.

Right now I'm writing a post about "informal simplicity", "conceptual simplicity". It discusses simplicity of informal concepts (concepts not giving specific predictions). I make an argument that "informal simplicity" should be very important a priori. But I don't know if "informal simplicity" was used (at least implicitly) by professional and famous people. Here's as much as I know: (warning, controversial and potentially inaccurate takes!)

-

Zeno of Elea made arguments basically equivalent to "calculus should exist" and "theory of computation should exist" ("supertasks are a thing") using only the basic math.

-

The success of neural networks is a success of one of the simplest mechanisms: backpropagation and attention. (Even though they can be heavy on math too.) We observed a complicated phenomenon (real neurons), we simplified it... and BOOM!

-

Arguably, many breakthroughs in early and late science were sealed behind simple considerations (e.g. equivalence principle), not deduced from formal reasoning. Feynman diagram weren't deduced from some specific math, they came from the desire to simplify.

-

Some fields "simplify each other" in some way. Physics "simplifies" math (via physical intuitions). Computability theory "simplifies" math (by limiting it to things which can be done by series of steps). Rationality "simplifies" philosophy (by connecting it to practical concerns) and science.

-

To learn flying, Wright brothers had to analyze "simple" considerations.

-

Eliezer Yudkowsky influenced many people with very "simple" arguments. Rational community as a whole is a "simplified" approach to philosophy and science (to a degree).

-

The possibility of a logical decision theory can be deduced from simple informal considerations.

-

Albert Einstein used simple thought experiments.

-

Judging by the famous video interview, Richard Feynman likes to think about simple informal descriptions of physical processes. And maybe Feynman talked about "less precise, but more general" idea? Maybe he said that epicycles were more precise, but a heliocentric model was better anyway? I couldn't find it.

-

Terry Tao occasionally likes to simplify things. (e.g. P=NP and multiple choice exams, Quantum mechanics and Tomb Raider, Special relativity and Middle-Earth and Calculus as “special deals”). Is there more?

-

Some famous scientists weren't shying away from philosophy (e.g. Albert Einstein, Niels Bohr?, Erwin Schrödinger).

Please, share any thoughts or information relevant to this, if you have any! It's OK if you write your own speculations/frames.

I've taken to calling it the 'Q-gap' in my notes now. ^^'

You can understand AlphaZero's fundamental structure so well that you're able to build it, yet be unable to predict what it can do. Conversely, you can have a statistical model of its consequences that lets you predict what it will do better than any of its engineers, yet know nothing about its fundamental structure. There's a computational gap between the system's fundamental parts & and its consequences.

The Q-gap refers to the distance between these two explanatory levels.

...for simple mechanisms, it is often easier to describe how they work than what they do, while for more complicated mechanisms, it is usually the other way around.

Let's say you've measured the surface tension of water to be 73 mN/m at room temperature. This gives you an amazing ability to predict which objects will float on top of it, which will be very usefwl for e.g. building boats.

As an alternative approach, imagine zooming in on the water while an object floats on top of it. Why doesn't it sink? It kinda looks like the tiny waterdrops are trying to hold each others' hands like a crowd of people (h/t Feynman). And if you use this metaphor to imagine what's going to happen to a tiny drop of water on a plastic table, you could predict that it will form a ball and refuse to spread out. While the metaphor may only be able to generate very uncertain & imprecise predictions, it's also more general.

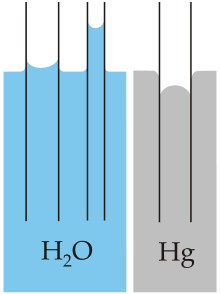

By trying to find metaphors that capture aspects of the fundamental structure, you're going to find questions you wouldn't have thought to ask if all you had were empirical measurements. What happens if you have a vertical tube with walls that hold hands with the water more strongly than water holds hands with itself?[1]

Beliefs should pay rent, but if anticipated experiences is the only currency you're willing to accept, you'll lose out on generalisability.

I want to discuss a potential failure mode of communication and thinking in general. I think it may affect our thinking about Alignment too.

Communication. A person has a vague, but useful idea (P). This idea is applicable on one level of the problem. It sounds similar to another idea (T), applicable on a very different level of the problem. Because of the similarity nobody can understand the difference between (P) and (T), even though they're on different levels. People end up overestimating the vagueness of (P) and not even considering it. Because people aren't used to mapping ideas to "levels" of a problem. Information that has to give more clarity (P is similar to T) ends up creating more confusion. I think this is irrational, it's a failure of dealing with information.

Thinking in general. A person has a specific idea (T) applicable on one level of a problem. The person doesn't try to apply a version of this idea on a different level. Because (1) she isn't used to it (2) she considers only very specific ideas, but she can't come up with a specific idea for other levels. I think this is irrational: rationalists shouldn't shy away from vague ideas and evidence. It's a predictable way to lose.

A comical example of this effect:

B can't understand A, because B thinks about the problem on the level of "chemical reactions". On that level it doesn't matter what heats the food, so it's hard to tell the difference between exploding the oven and using the oven in other ways.

Bad news is that taboo may fail to help. Because A doesn't know the exact way to turn on the oven or the exact way the oven heats the food. Her idea is very useful if you try it, but it doesn't come with a set of specific steps. Taboo could even be harmful, because it could encourage B to keep thinking on the level of chemical reactions.

And the worst thing is that A may not be there in the first place. There may be no one around to even bother you to try to use your oven differently.

I think rationality doesn't have a general cure for this, but this may actually be one of the most important problems of human reasoning and communication. I think all of our knowledge contains "gaps". They, by definition, seem very obscure, because we're distracted by and preoccupied with other ideas.

Any good idea that was misunderstood and forgotten - was forgotten because of this. Any good argument that was ignored and ridiculed - was ignored because of this. It all got lost in the gaps. AGI risk arguments, decision theories alternative to two main ones... Rationality itself is in the gap of obscurity.

Metrics

I think one method to resolve misunderstanding is to add some metrics for comparing ideas. Then talk about something akin to (quasi)probability distributions over those metrics. A could say:

""Instruments have parts with different functions. Those functions are not the same, even though they may intersect and be formulated in terms of each other:

In practice, some parts of the instrument realize both functions. E.g. the handle of a hammer actually allows you not only to control the hammer, but also to speed up the hammer more effectively.

When we blow up the oven, we use 99% of the first function of the oven. But I believe we can use 80% of the second function and 20% of the first.""

Note: I haven't figured out all the rules of those quasiprobabilities, especially in weirder cases. But I think the idea might be important. It might be important to have distributions that describe objects in and of itself, not uncertainties over objects.

Complicated Ideas

Let's explore some ideas to learn to attach ideas to "levels" of a problem and seek "gaps". "(gap)" means that the author didn't consider/didn't write about that idea.

Two of those ideas are from math. Maybe I shouldn't have used them as examples, but I wanted to give diverse examples.

(1) "Expected Creative Surprises" by Eliezer Yudkowsky. There are two types of predictability:

Sometimes they're the same thing. But sometimes you have:

(2) "Belief in Belief" by Eliezer Yudkowsky. Beliefs exist on three levels:

Sometimes a belief exists on all those levels and contents of the belief are the same on all levels. But sometimes you get more interesting types of beliefs, for example:

(3) "The Real Butterfly Effect", explained by Sabine Hossenfelder. There're two ways in which consequences of an event spread:

In a way it's kind of the same thing. But in a way it's not:

(4) "P=NP, relativisation, and multiple choice exams", Baker-Gill-Solovay theorem explained by Terence Tao. There are two dodgy things:

Sometimes they are "the same thing", sometimes they are not.

(5) "Free Will and consciousness experience are a special type of illusion." An idea of Daniel Dennett. There are 2 types of illusions:

Conscious experience is an illusion of the second type, Dennett says. I don't agree, but I like the idea and think it's very important.

Somewhat similar to Fictionalism: there are lies and there are "truths of fiction", "useful lies". Mathematical facts may be the same type of facts as "Macbeth is insane/Macbeth dies".

(6) "Tlön, Uqbar, Orbis Tertius" by Jorge Luis Borges. A language has two functions:

Different languages can have different focus on those functions:

I think there's an important gap in Borges's ideas: Borges doesn't consider a language with extremely strong, but not absolute emphasis on the second function. Borges criticizes his languages, but doesn't steelman them.

(7) "Pierre Menard, Author of the Quixote" by Jorge Luis Borges. There are 3 ways to copy a text:

Pierre Menard wants to copy 100% of the 2: Pierre Menard wants to imagine exactly the same text but with completely different thoughts behind the words.

("gap") Pierre Menard also could try to go for 99% of 3 and for "anti 100%" of 4: try to write a completely new text by experiencing the same thoughts and urges that created the old one.

Puzzles

You can use the same thinking to analyze/classify puzzles.

Inspired by Pirates of the Caribbean: Dead Man's Chest. Jack has a compass that can lead him to a thing he desires. Jack wants to find a key. Jack can have those experiences:

In order for compass to work Jack may need (almost) any mix of those: for example, maybe pure desire is enough for the compass to work. But maybe you need to mix pure desire with seeing at least a drawing of the key (so you have more of a picture of what you want).

Jack has those possibilities:

Gibbs thinks about doing 100% of 1 or 100% of 2 and gets confused when he learns that's not the plan. Jack thinks about 50% of 1 and 50% of 2: you can go after the chest in order to use it to get the key. Or you can go after the chest and the key "simultaneously" in order to keep Davy Jones distracted and torn between two things.

Braid, Puzzle 1 ("The Ground Beneath Her Feet"). You have two options:

You need 50% of 1 and 50% of 2: first you ignore the platform, then you move the platform... and rewind time to mix the options.

Braid, Puzzle 2 ("A Tingling"). You have the same two options:

Now you need 50% of 1 and 25% of 2: you need to rewind time while the platform moves. In this time-manipulating world outcomes may not add up to 100% since you can erase or multiply some of the outcomes/move outcomes from one timeline to another.

Argumentation

You can use the same thing to analyze arguments and opinions. Our opinions are built upon thousands and thousands "false dilemmas" that we haven't carefully revised.

For example, take a look at those contradicting opinions:

Usually people think you have to believe either "100% for 1" or "100% for 2". But you can believe in all kinds of mixes.

For example, I believe in 90% of 1 and 10% of 2: people may be "stupid" in this particular nonsensical world, but in a better world everyone would be a genius.

Ideas as bits

You can treat an idea as a "(quasi)probability distribution" over some levels of a problem/topic. Each detail of the idea gives you a hint about the shape of the distribution. (Each detail is a bit of information.)

We usually don't analyze information like this. Instead of cautiously updating our understanding with every detail of an idea we do this:

Note: maybe you can apply the same idea about levels and "bits" to chess (and other games). You could split a position into multiple versions, each version reflecting depth of your knowledge or depth of your calculations. Then you could try to measure anything you like in quasiprobabilities/bits: each idea about the position, each small (perceived) advantage, the amount of control you have over different parts of the board. It doesn't have to be equivalent to "evaluation functions", you can try to measure your higher-level chess ideas. "Levels" also could describe how much a given position is similar to some prototypical positions. You could try to measure how "hard" a certain position was for you: how much bits of advantage/ideas you needed to win it.

Richness of ideas

I think you can measure "richness" of theories (and opinions and anything else) using the same quasiprobabilities/bits. But this measure depends on what you want.

Compare those 2 theories explaining different properties of objects:

Let's add a metric to compare 2 theories:

Let's say we're interested in physical objects. B-theory explains properties through 90% of 1 and 10% of 2: it makes properties of objects equivalent to the reason of their existence. A-theory explains properties through 100% of 2. B-theory is more fundamental, because it touches more on a more fundamental topic (existence).

But if we're interested in mental objects... B-theory explains only 10% of 2 and 0% of 1. And A-theory may be explaining 99% of 1. If our interests are different A-theory turns out to be more fundamental.

When you look for a theory (or opinion or anything else), you can treat any desire and argument as a "bit" that updates the quasiprobabilities like the ones above.

Political gaps

Imagine two tribes:

I think the conflict between the tribes would dissolve if there appeared various mixes between the tribes. "Physics-like math" and "math-like physics" and etc.

Note that a "mix" isn't equivalent to indifference or centrism: each "mix" can be very radical. Indifference or centrism more or less accept the binary distinction, but "mixes" are supposed to show how meaningless it is in the first place.

Helping each other

We could help each other to find gaps in our thinking! We could do this in the comments.

Gaps of Alignment

I want to explain what I perceive as missed ideas in Alignment. And discuss some other ideas.

I think the main "missed idea" is the fact that there's no map of Alignment ideas, no map of the levels of the problem they work on. Because of this almost any idea ends up "missed" anyway. Communication between ideas suffers.

(1) You can split possible effects of AI's actions into three domains. All of them are different (with different ideas), even though they partially intersect and can be formulated in terms of each other. Traditionally we focus on the first two domains:

I think the third domain is mostly ignored and it's a big blind spot.

I believe that "human (meta-)ethics" is just a subset of a way broader topic: "properties of (any) systems". And we can translate the method of learning properties of simple systems into a method of learning human values (a complicated system). And we can translate results of learning those simple systems into human moral rules. And many important complicated properties (such as "corrigibility") has analogies in simple systems.

(2) Another "missed idea":

"True LoveTM towards a sentient being" feels fundamentally different from "eating a sandwich", so it could be evidence that human experiences have an internal structure and that structure plays a big role in determining values. But not a lot of models (or simply 0) take this "fact" into account. Not surprisingly, though: it would require a theory of human subjective experience. But still, can we just ignore this "fact"?

Maybe Shard Theory takes this into account at least a little bit. Maybe it assumes 50% of 4 and 50% of 3.

(3) Preference utilitarianism says:

I think there's a missed idea: you could try to describe entire ethics by a weighted aggregation of a single... macroscopic value. The same idea, but on a different level.

I think Shard Theory goes about 50% in that direction (halfway). Because it describes values as big enough pieces of your mind (shards) that can be formed from smaller pieces.

(4) Connectionism and Connectivism. I think this is a good example of a gap in our knowledge:

I think one layer of the idea is missing: you could say that concepts in the human mind are somewhat like neurons. Maybe human thinking is like a fractal, looks the same on all levels.

Again, Shard Theory explores some of that direction, because it describes macroscopic "concepts" affecting each other. Here's my awkward attempt to introduce the idea for computer vision: "Can you force a neural network to keep generalizing?"

(5) Bayesian probability. The core idea:

I think this idea should have a "counterpart": maybe you can describe macroscopic things in terms of each other. And not only outcomes. Using something somewhat similar to probabilistic reasoning, to Bayes' rule.

That's what I tried to hint at in this post. Maybe probabilistic reasoning may be applied to something other than outcomes.

Gaps of Taboo

Taboo Your Words

Maybe you could modify Taboo by using the idea of levels. We can get more radical than blanking words: we can blanket almost everything, even concepts themselves. When we compare ideas, we can compare them by the types of "actions/mechanics" they make or use. You could call it a recursive Taboo.

Imagine that Alignment problem is about getting the ball in the golf hole. There're different Alignment approaches:

With standard taboo we could get into many details (potentially irrelevant for deciding what is the most promising approach, because details can be varied and modified) of those approaches. Then we could get into arguments upon arguments about what's the best approach and also we could get absolutely confused in all possible mixes of those approaches. There's an infinity of ways to combine the three!

With "recursive taboo" we could blanket all concepts those approaches use and compare them only by the types of "actions" they use:

Now we don't need to argue too much: we just need to find what actions/mechanics are likely usable and combine all those actions/mechanics into a single approach. We dissolved any artificial borders between different approaches. We also dissolved many possible conflicts, because now we see how and why research about any of the methods can help any other method.

It seems to me that people for some reason don't think about abstract actions/mechanics at all, not interested in them.