Also when I changed the "How much easier/harder each coding time horizon doubling gets" parameter by small amounts, the forecasted time from AC to ASI changes significantly (2.7 years at 0.90, over 4 years for 1.00), so it looks like stages 2 and 3 are affected as well.

I'd guess that this is only because compute growth (and human labor growth, but that doesn't matter as much) at that point is slower during takeoff if takeoff starts later.

Let's test this, this theory would predict that whatever time horizon growth parameter I changed, would result in the same takeoff if it ends up starting at the same time:

- From the starting state, if I raise "How much easier/harder..." to 0.99, AC happens in 1/2040, and ASI happens in 3/2044 (so 4 years 2 months, replicating you)

- If I instead raise present doubling time ("How long it...") to 9.5 months, then AC happens in 12/2039, and ASI happens in 2/2044 (same speed as in (1))

- I can't get AC at that time by only raising AC time horizon requirement, but if I raise it to the max, then raise "How much easier/harder..."to 0.95, I get pretty close: AC at Jul 2038, and ASI at Aug 2042. Barely under 4 year takeoff. If I also raise present doubling time to 6 months, then I get 8/2040 to 11/2044 takeoff, 4 year 3 month takeoff.

Ok, looks like I was right. I'm pretty sure that these do affect takeoff, but only by changing the starting date.

Edit: actually sorry these can also affect takeoff via the coding automation task efficiencies when reaching AC / start of takeoff, because if the effective compute requirement is different then the logistic curve has a lower slope, not just shifted over to the right. My guess is that the compute growth is having a larger impact, but we'd have to do a bit more work to check (either way each time horizon growth parameter would have the same effect if it reuslted in AC happening at the same time, because all the parameters do is set the effective compute requirement for AC).

Thanks for writing this up! Excited about research taste experiments.

Is human research taste modeled correctly? Eg it seems likely to me that the 0.3% of top humans add more than 0.3%*3.7x to the “aggregate research taste” of a lab because they can set research directions. There are maybe more faithful ways to model it; all the ones Eli mentioned seemed far more complicated.

A minimal change would be to change the aggregation from mean to something else, we were going to do this but didn't get to it in time. But yeah to do it more faithfully I think would be pretty complicated because you have to model experiment compute budgets for each human/AI. Note also that we aren't really modeling human/AI taste complementarity.

Or, they could coordinate better (especially with all the human ex-coders to help them), and decrease the parallelization penalties for labor and/or compute

Agree that ideally there would at least be different penalties for AIs vs. humans doing the labor.

Is modeling AI research taste as exponential in human standard deviations valid? I have no idea whether someone 9 standard deviations above the human median would be able to find 3.7^(9/3) = 50x better research ideas or not.

Note that because of limits (which weren't in your summary) the model is in practice subexponential, but exponential is generally a good approximation for the model around the human range. See here (4.2.2) for an explanation of taste limits.

Regarding whether it's a good approximation in the human range, we have some n=12 survey results on this here, obviously take with a huge grain of salt, but extracted from these results the ratio of (taste per SD between the 90th percentile and top researchers) and (taste per SD between 50th percentile and top) appears to be fairly close to 1: 1.01 median if assuming a population of 1000 researchers, and 0.95 median if assuming a population of 100.

I think revenue extrapolations seem like a useful exercise. But I think they provide much less evidence than our model.

Which revenues would you extrapolate? You get different results for e.g. doing OpenAI vs. Nvidia.

Also (most importantly) are you saying we should assume that log(revenue) is a straight line?

- If so, that seems like a really bad assumption given that usually startup revenue growth rates slow down a lot as revenue increases, so that should be the baseline assumption.

- If not, how else do we predict how the revenue trend will change without thinking about AI capabilities? We could look at base rates for startups that have this level of revenue growth early on, but then obviously none of those revenue trends have ever grown until world GDP, so that would say AGI never.

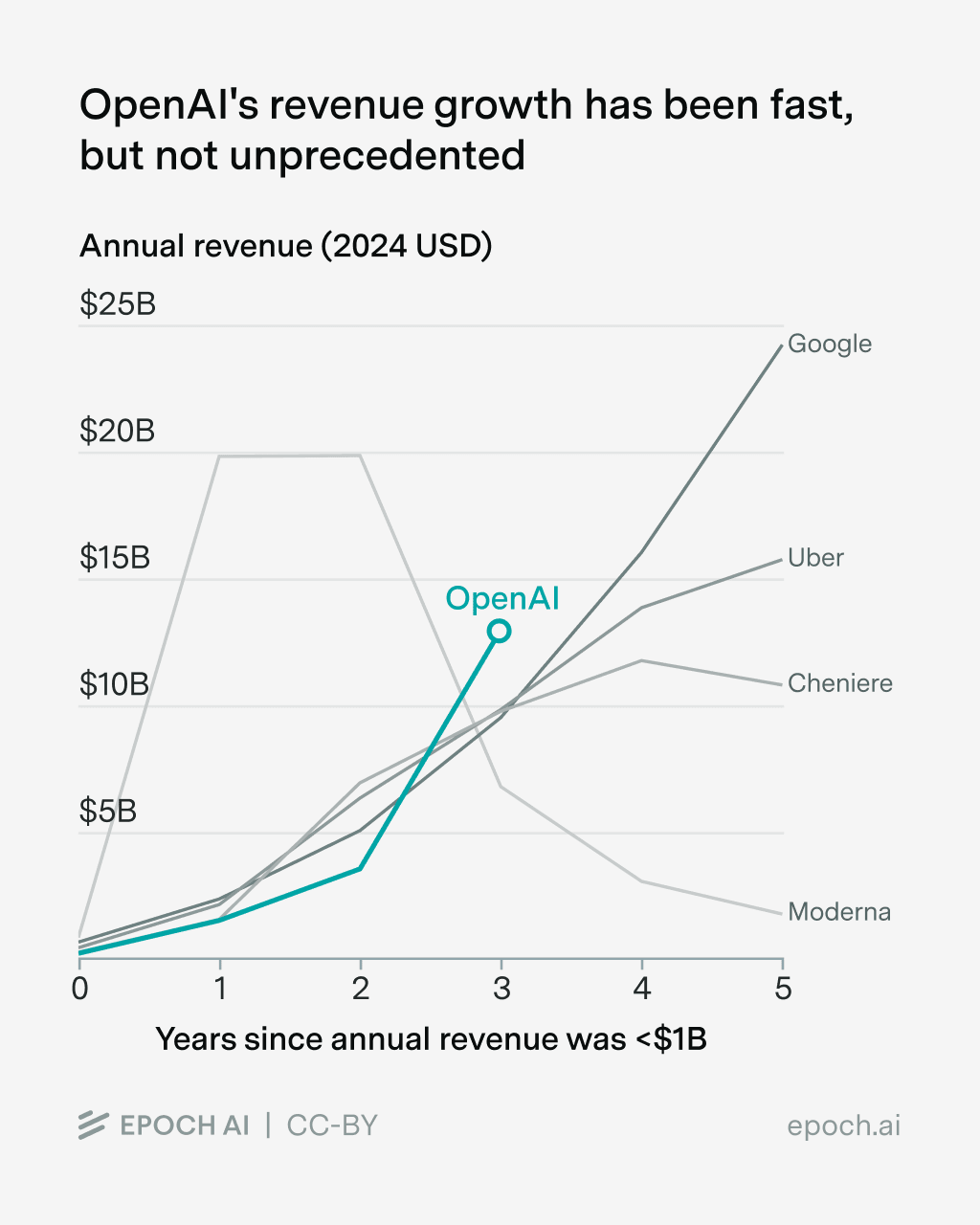

edited to add: relevant graph from https://epoch.ai/gradient-updates/openai-is-projecting-unprecedented-revenue-growth:

much more clear threshold for AGI

Also I disagree with this, I think time horizon is about as good as revenue on this dimension, maybe a bit better. Both are hugely uncertain though of course.

I agree with habryka that the current speedup is probably substantially less than 3x.

However, worth keeping in mind that if it were 3x for engineering the overall AI progress speedup would be substantially lower, due to (a) non-engineering activities having a lower speedup, (b) compute bottlenecks, (c) half of the default pace of progress coming from compute.

My null hypothesis would be that programmer productivity is increasing exponentially and has been for ~2 years, and this is already being taken into account in the curves, and without this effect you would see a slower (though imo not massively slower) exponential

Exponential growth alone doesn't imply a significant effect here, if the current absolute speedup is low.

Not representative of motivations for all people for all types of evals, but https://www.openphilanthropy.org/rfp-llm-benchmarks/, https://www.lesswrong.com/posts/7qGxm2mgafEbtYHBf/survey-on-the-acceleration-risks-of-our-new-rfps-to-study, https://docs.google.com/document/d/1UwiHYIxgDFnl_ydeuUq0gYOqvzdbNiDpjZ39FEgUAuQ/edit, and some posts in https://www.lesswrong.com/tag/ai-evaluations seem relevant.

Do you think that cyber professionals would take multiple hours to do the tasks with 20-40 min first-solve times? I'm intuitively skeptical.

Yes, that would be my guess, medium confidence.

One component of my skepticism is that someone told me that the participants in these competitions are less capable than actual cyber professionals, because the actual professionals have better things to do than enter competitions. I have no idea how big that selection effect is, but it at least provides some countervailing force against the selection effect you're describing.

I'm skeptical of your skepticism. Not knowing basically anything about the CTF scene but using the competitive programming scene as an example, I think the median competitor is much more capable than the median software engineering professional, not less. People like competing at things they're good at.

I believe Cybench first solve times are based on the fastest top professional teams, rather than typical individual CTF competitors or cyber employees, for which the time to complete would probably be much higher (especially for the latter).

I'm mainly arguing against public AI safety advocacy work, which was recently upvoted highly on the EA Forum.

I had the impression that it was more than just that, given the line: "In light of recent news, it is worth comprehensively re-evaluating which sub-problems of AI risk are likely to be solved without further intervention from the AI risk community (e.g. perhaps deceptive alignment), and which ones will require more attention." and the further attention devoted to deceptive alignment.

I appreciate these predictions, but I am not as interested in predicting personal of public opinions. I'm more interested in predicting regulatory stringency, quality, and scope.

If you have any you think faithfully represent a possible disagreement between us go ahead. I personally feel it will be very hard to operationalize objective stuff about policies in a satisfying way. For example, a big issue with the market you've made is that it is about what will happen in the world, not what will happen without intervention from AI x-risk people. Furthermore it has all the usual issues with forecasting on complex things 12 years in advance, regarding the extent to which it operationalizes any disagreement well (I've bet yes on it, but think it's likely that evaluating and fixing deceptive alignment will remain mostly unsolved in 2035 conditional on no superintelligence, especially if there were no intervention from x-risk people).

I have three things to say here:

Thanks for clarifying.

Several months ago I proposed general, long-term value drift as a problem that I think will be hard to solve by default. I currently think that value drift is a "hard bit" of the problem that we do not appear to be close to seriously addressing, perhaps because people expect easier problems won't be solved either without heroic effort. I'm also sympathetic to Dan Hendrycks' arguments about AI evolution. I will add these points to the post.

Don't have a strong opinion here, but intuitively feels like it would be hard to find tractable angles for work on this now.

I mostly think people should think harder about what the hard parts of AI risk are in the first place. It would not be surprising if the "hard bits" will be things that we've barely thought about, or are hard to perceive as major problems, since their relative hiddenness would be a strong reason to believe that they will not be solved by default.

Maybe. In general, I'm excited about people who have the talent for it to think about previously neglected angles.

The problem of "make sure policies are well-targeted, informed by the best evidence, and mindful of social/political difficulties" seems like a hard problem that societies have frequently failed to get right historically, and the relative value of solving this problem seems to get higher as you become more optimistic about the technical problems being solved.

I agree this is important and it was in your post but it seems like a decent description of what the majority of AI x-risk governance people are already working on, or at least not obviously a bad one. This is the phrase that I was hoping would get made more concrete.

I want to emphasize that the current policies were crafted in an environment in which AI still has a tiny impact on the world. My expectation is that policies will get much stricter as AI becomes a larger part of our life. I am not making the claim that current policies are sufficient; instead I am making a claim about the trajectory, i.e. how well we should expect society to respond at a time, given the evidence and level of AI capabilities at that time.

I understand this (sorry if wasn't clear), but I think it's less obvious than you do that this trend will continue without intervention from AI x-risk people. I agree with other commenters that AI x-risk people should get a lot of the credit for the recent push. I also provided example reasons that the trend might not continue smoothly or even reverse in my point (3).

There might also be disagreements around:

- Not sharing your high confidence in slow, continuous takeoff.

- The strictness of regulation needed to make a dent in AI risk, e.g. if substantial international coordination is required it seems optimistic to me to assume that the trajectory will by default lead to this.

- The value in things getting done faster than they would have done otherwise, even if they would have been done either way. This indirectly provides more time to iterate and get to better, more nuanced policy.

I believe that current evidence supports my interpretation of our general trajectory, but I'm happy to hear someone explain why they disagree and highlight concrete predictions that could serve to operationalize this disagreement.

Operationalizing disagreements well is hard and time-consuming especially when we're betting on "how things would go without intervention from a community that is intervening a lot", but a few very rough forecasts, all conditional on no TAI before resolve date:

- 75%: In Jan 2028, less than 10% of Americans will consider AI the most important problem.

- 60%: In Jan 2030, Evan Hubinger will believe that if x-risk-motivated people had not worked on deceptive alignment at all, risk from deceptive alignment would be at least 50% higher, compared to a baseline of no work at all (i.e. if risk is 5% and it would be 9% with no work from anyone, it needs to have been >7% if no work from x-risk people had been done to resolve yes).

35%: In Jan 2028, conditional on a Republican President being elected in 2024, regulations on AI in the US will be generally less stringent than they were when the previous president left office.Edit:

Thanks for the comments! Besides the below, I'm curious what your overall views are. What does your distribution for AC look like?

I think this is basically addressed in our uncertainty over the present doubling time, at least that's how I'd think of it for myself. Note that my median present doubling time estimate of 5.5 months is slower than the potentially accelerated recent time horizon trend.

Our model reflects that, with my median parameters the current software R&D upflit is 1.1x.

Our Automated Coder has efficiency definitions built in, so you wouldn't put it that way, you'd instead say you get an Automated Coder in 2035 and a very expensive replication of AC abilities in 2031. I personally think that a large majority of the relevant recent gains have not come from inference scaling, but if I did think that a lot of it had been, I would adjust my present doubling time to be slower.

Here's some portions of a rough Slack message I wrote recently on this topic:

[end Slack message]

Furthermore, it seems that once capabilities can be reached very expensively, they pretty reliably get cheaper very quickly. See here for my research into this or just skip to Epoch's data which I used as input to my parameter esitmate; happy to answer questions, sorry that my explanation is pretty rough.

I think I already addressed at least part of this in my answer to (2).

I don't understand this. What exactly do you want us to address? Why should we adjust our predictions because of this? We do explicitly say we are assuming a hypothetical "METR-HRS-Extended" benchmark in our explanation. Ok maybe you are saying that it will be hard to create long-horizon tasks which will slow down the trend. I would say that I adjust for this when my make my all-things-considered AC prediction longer due to the potential for data bottlenecks, and also to some extent by making the doubling difficulty growth factor higher than it otherwise would be.

Yeah for the parts I didn't explicitly respond to, my response is mainly that it seems like this sort of inside view reasoning is valuable but overall I give more weight to trend extrapolation, and historically simple trend extrapolations like "when will we have the same ops/second as the human brain" have performed pretty well, as we discuss in our blog post.