Forecasting future events is important for policy and decision-making. In this work, we study whether language models (LMs) can forecast at the level of competitive human forecasters. Towards this goal, we develop a retrieval-augmented LM system designed to automatically search for relevant information, generate forecasts, and aggregate predictions. To facilitate our study, we collect a large dataset of questions from competitive forecasting platforms. Under a test set published after the knowledge cut-offs of our LMs, we evaluate the end-to-end performance of our system against the aggregates of human forecasts. On average, the system nears the crowd aggregate of competitive forecasters and in some settings, surpasses it. Our work suggests that using LMs to forecast the future could provide accurate predictions at scale and help inform institutional decision-making.

For safety motivations on automated forecasting, see Unsolved Problems in ML Safety (2021) for discussions. In the following, we summarize our main research findings.

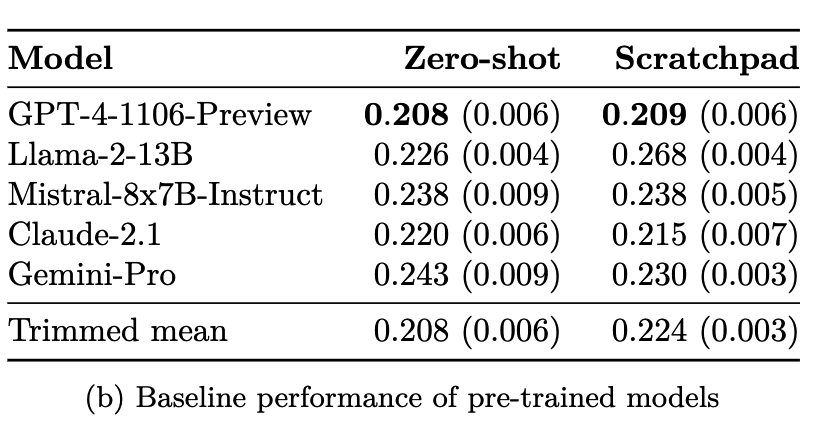

Current LMs are not naturally good at forecasting

First, we find that LMs are not naturally good at forecasting when evaluated zero-shot (with no fine-tuning and no retrieval). On 914 test questions that were opened after June 1, 2023 (post the knowledge cut-offs of these models), most LMs get near chance performance.

Here, all questions are binary, so random guessing gives a Brier score of 0.25. Averaging across all community predictions over time, the human crowd gets 0.149. We present the score of the best model of each series. Only GPT-4 and Claude-2 series beat random guessing (by a margin of >0.3), though still very far from human aggregates.

System building

Towards better automated forecasting, we build and optimize a retrieval-augmented LM pipeline for this task.

It functions in 3 steps, mimicking the traditional forecasting procedure:

Retrieval, which gathers relevant information from news sources. Here, we use LM to generate search queries given a question, use these queries to query a news corpus for articles, filter out irrelevant articles, and summarize the remaining.

Reasoning, which weighs available data and makes a forecast. Here, we prompt base and fine-tuned GPT-4 models to generate forecasts and (verbal) reasonings.

Aggregation, which ensembles individual forecasts into an aggregated prediction. We use trimmed mean to aggregate all the predictions.

We optimize the system’s hyperparameters and apply a self-supervised approach to fine-tune a base GPT-4 to obtain the fine-tuned LM. See Section 5 of the full paper for details.

Data and models

We use GPT-4-1106 and GPT-3.5 in our system, whose knowledge cut-offs are in April 2023 and September 2021.

To optimize and evaluate the system, we collect a dataset of forecasting questions from 5 competitive forecasting platforms, including Metaculus, Good Judgment Open, INFER, Polymarket, and Manifold.

The test set consists only of questions published after June 1st, 2023. Crucially, this is after the knowledge cut-off date of GPT-4 and GPT-3.5, preventing leakage from pre-training.

The train and validation set contains questions before June 1st, 2023, used for hyperparameter search and fine-tuning a GPT-4 base model.

Evaluation results

For each question, we perform information retrieval at up to 5 different dates during the question’s time span and evaluate our system against community aggregates at each date. (This simulates “forecasting” on past, resolved questions with pre-trained models, a methodology first introduced by Zou et al, 2022.)

Unconditional setting

Averaging over a test set of 914 questions and all retrieval points, our system gets an average Brier score of 0.179 vs. crowd 0.149 and an accuracy of 71.5% vs the crowd 77.0%. This significantly improves upon the baseline evaluation we had earlier.

Selective setting

Through a study on the validation set, we find that our system performs best relative to the crowd along several axes, which hold on the test set:

On questions when the crowd prediction falls between .3 and .7 (i.e., when humans are quite uncertain), it gets a Brier score of .238 (crowd aggregate: .240).

On the earlier retrieval dates (1, 2, and 3), it gets a Brier score of .185 (crowd aggregate: .161).

When the retrieval system provides at least 5 relevant articles, it gets a Brier score of .175 (crowd aggregate: .143).

Under all three conditions, our system attains a Brier score of .240 (crowd aggregate: .247).

In both 1 and 4, our system actually beats the human crowd.

More interestingly, by taking a (weighted) average of our system and the crowd’s prediction, we always get better scores than both of them, even unconditionally! Conceptually, this shows that our system can be used to complement the human forecasters.

Calibration

Finally, we compare our system's calibration against the human crowd. On the test set (figure (c) below), our system is nearly as well calibrated, with RMS calibration error .42 (human crowd: .38).

Interestingly, this is not the case in the baseline evaluation, where the base models are not well calibrated under the zero-shot setting (figure (a) below). Our system, through fine-tuning and ensembling, improves the calibration of the base models, without undergoing specific training for calibration.

Future work

Our results suggest that in the near future, LM-based systems may be able to generate accurate forecasts at the level of competitive human forecasters.

In Section 7 of the paper, we discuss some directions that we find promising towards this goal, including iteratively applying our fine-tuning method, gathering more forecasting data from the wild for better training, and more.

TL;DR: We present a retrieval-augmented LM system that nears the human crowd performance on judgemental forecasting.

Paper: https://arxiv.org/abs/2402.18563 (Danny Halawi*, Fred Zhang*, Chen Yueh-Han*, and Jacob Steinhardt)

Twitter thread: https://twitter.com/JacobSteinhardt/status/1763243868353622089

Abstract

Forecasting future events is important for policy and decision-making. In this work, we study whether language models (LMs) can forecast at the level of competitive human forecasters. Towards this goal, we develop a retrieval-augmented LM system designed to automatically search for relevant information, generate forecasts, and aggregate predictions. To facilitate our study, we collect a large dataset of questions from competitive forecasting platforms. Under a test set published after the knowledge cut-offs of our LMs, we evaluate the end-to-end performance of our system against the aggregates of human forecasts. On average, the system nears the crowd aggregate of competitive forecasters and in some settings, surpasses it. Our work suggests that using LMs to forecast the future could provide accurate predictions at scale and help inform institutional decision-making.

For safety motivations on automated forecasting, see Unsolved Problems in ML Safety (2021) for discussions. In the following, we summarize our main research findings.

Current LMs are not naturally good at forecasting

First, we find that LMs are not naturally good at forecasting when evaluated zero-shot (with no fine-tuning and no retrieval). On 914 test questions that were opened after June 1, 2023 (post the knowledge cut-offs of these models), most LMs get near chance performance.

Here, all questions are binary, so random guessing gives a Brier score of 0.25. Averaging across all community predictions over time, the human crowd gets 0.149. We present the score of the best model of each series. Only GPT-4 and Claude-2 series beat random guessing (by a margin of >0.3), though still very far from human aggregates.

System building

Towards better automated forecasting, we build and optimize a retrieval-augmented LM pipeline for this task.

It functions in 3 steps, mimicking the traditional forecasting procedure:

We optimize the system’s hyperparameters and apply a self-supervised approach to fine-tune a base GPT-4 to obtain the fine-tuned LM. See Section 5 of the full paper for details.

Data and models

We use GPT-4-1106 and GPT-3.5 in our system, whose knowledge cut-offs are in April 2023 and September 2021.

To optimize and evaluate the system, we collect a dataset of forecasting questions from 5 competitive forecasting platforms, including Metaculus, Good Judgment Open, INFER, Polymarket, and Manifold.

Evaluation results

For each question, we perform information retrieval at up to 5 different dates during the question’s time span and evaluate our system against community aggregates at each date. (This simulates “forecasting” on past, resolved questions with pre-trained models, a methodology first introduced by Zou et al, 2022.)

Unconditional setting

Averaging over a test set of 914 questions and all retrieval points, our system gets an average Brier score of 0.179 vs. crowd 0.149 and an accuracy of 71.5% vs the crowd 77.0%. This significantly improves upon the baseline evaluation we had earlier.

Selective setting

Through a study on the validation set, we find that our system performs best relative to the crowd along several axes, which hold on the test set:

In both 1 and 4, our system actually beats the human crowd.

More interestingly, by taking a (weighted) average of our system and the crowd’s prediction, we always get better scores than both of them, even unconditionally! Conceptually, this shows that our system can be used to complement the human forecasters.

Calibration

Finally, we compare our system's calibration against the human crowd. On the test set (figure (c) below), our system is nearly as well calibrated, with RMS calibration error .42 (human crowd: .38).

Interestingly, this is not the case in the baseline evaluation, where the base models are not well calibrated under the zero-shot setting (figure (a) below). Our system, through fine-tuning and ensembling, improves the calibration of the base models, without undergoing specific training for calibration.

Future work

Our results suggest that in the near future, LM-based systems may be able to generate accurate forecasts at the level of competitive human forecasters.

In Section 7 of the paper, we discuss some directions that we find promising towards this goal, including iteratively applying our fine-tuning method, gathering more forecasting data from the wild for better training, and more.