Forecasting & Prediction

| Nathan Young | v1.30.1Jan 20th 2024 | Move images to CDN | ||

| Nathan Young | v1.30.0Jan 20th 2024 | (+1062) | ||

| niplav | v1.29.1Dec 5th 2023 | Move images to CDN | ||

| niplav | v1.29.0Dec 5th 2023 | (+7/-18) | ||

| niplav | v1.28.1Dec 5th 2023 | Move images to CDN | ||

| niplav | v1.28.0Dec 5th 2023 | (+1115/-2169) | ||

| Yoav Ravid | v1.27.1Jul 19th 2023 | Move images to CDN | ||

| Yoav Ravid | v1.27.0Jul 19th 2023 | (+29/-30) | ||

| Nathan Young | v1.26.1Jul 11th 2023 | Move images to CDN | ||

| Nathan Young | v1.26.0Jul 11th 2023 | (+2471) |

Forecasting or Predicting is the act of making statements about what will happen in the future (and in some cases, the past) and then scoring the predictions. Posts marked with this tag are for discussion of the practice, skill, and methodology of forecasting. Posts exclusively containing object-level lists of forecasts and predictions are in Forecasts. Related: Betting.

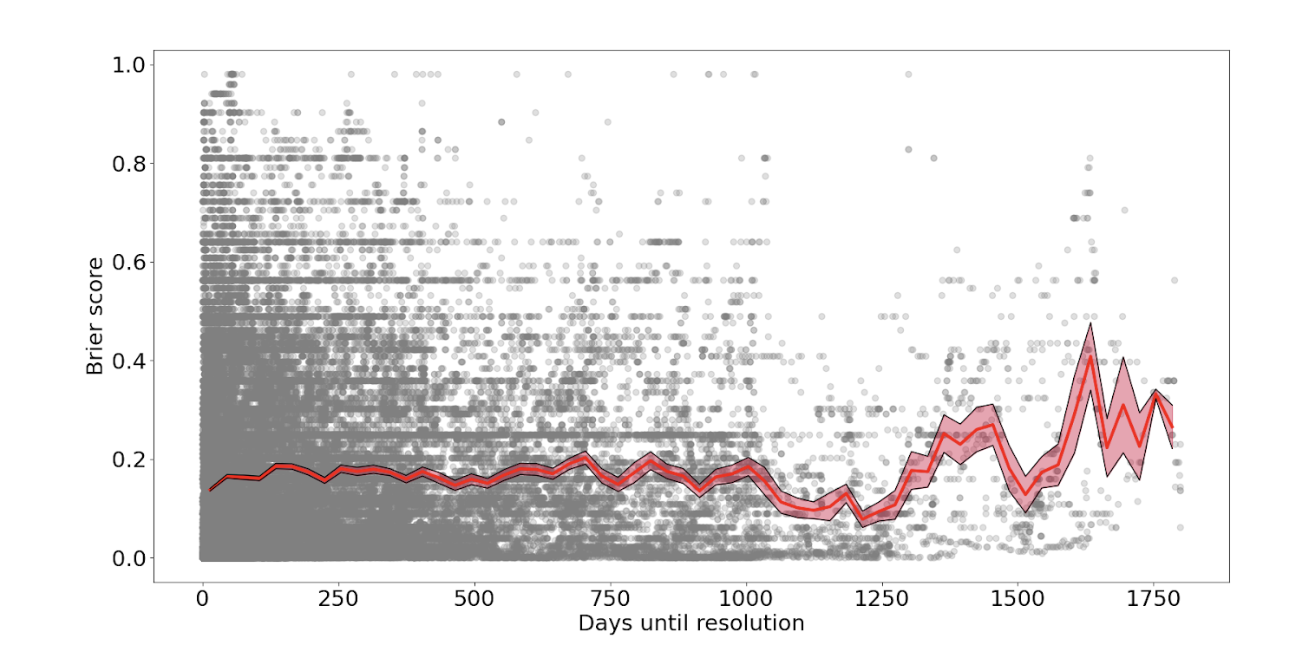

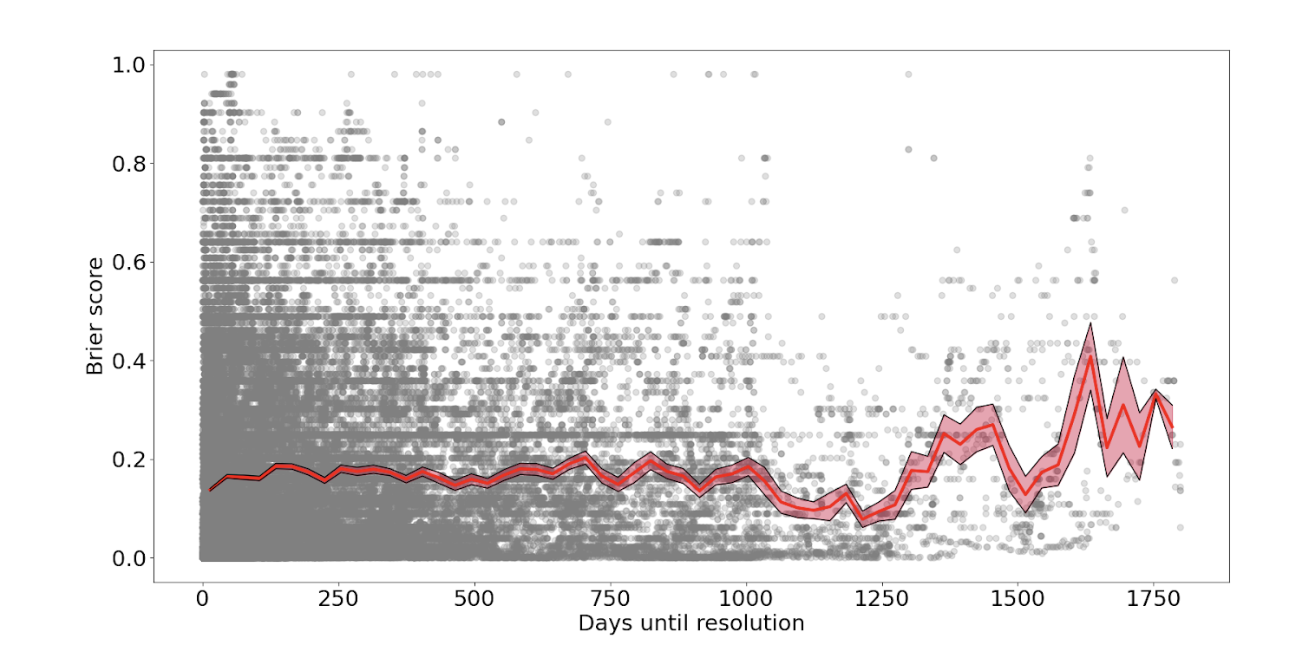

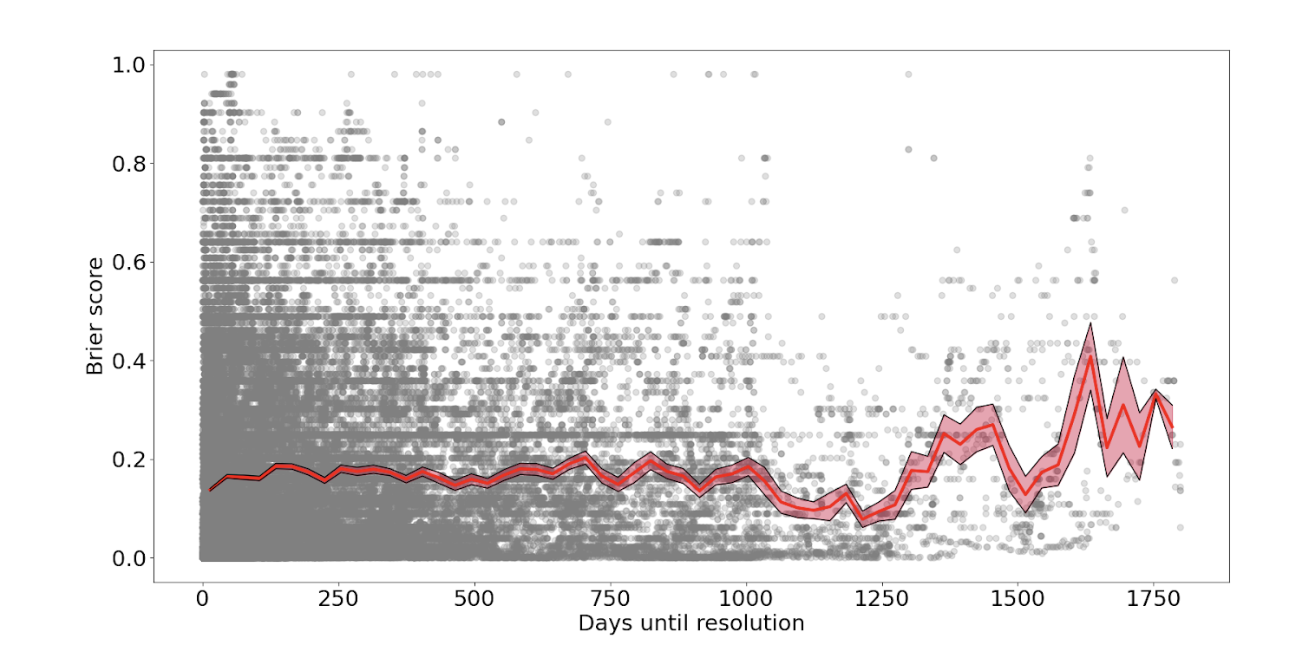

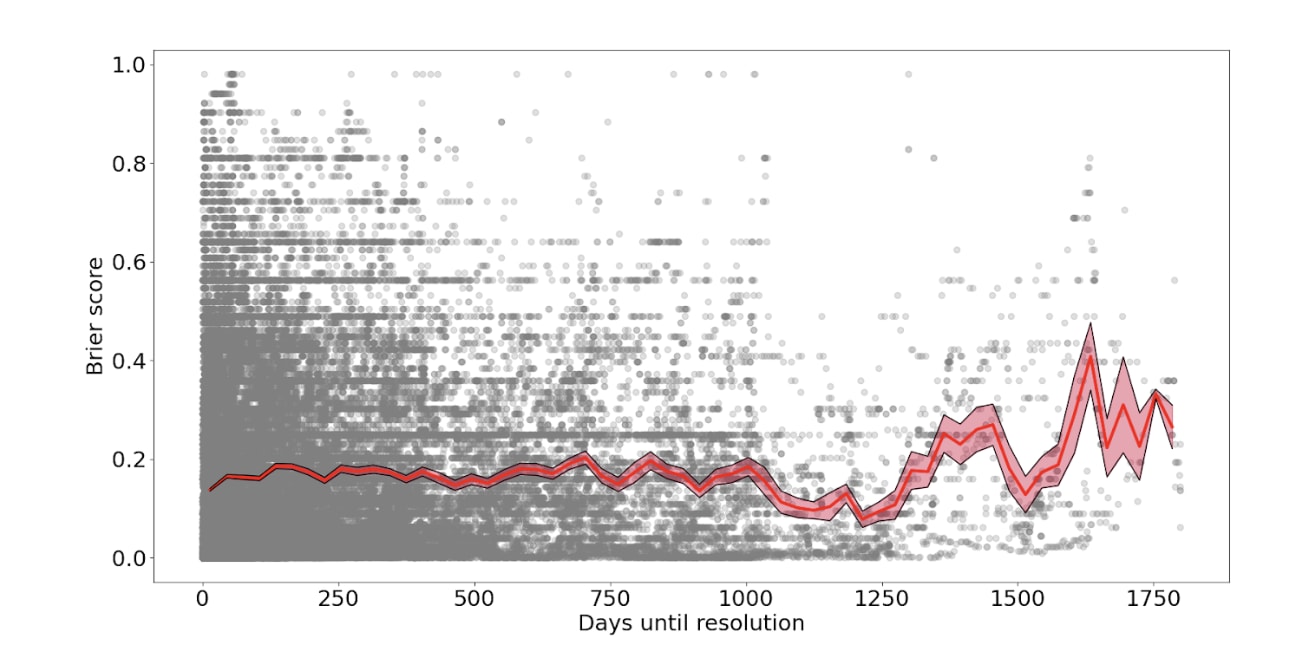

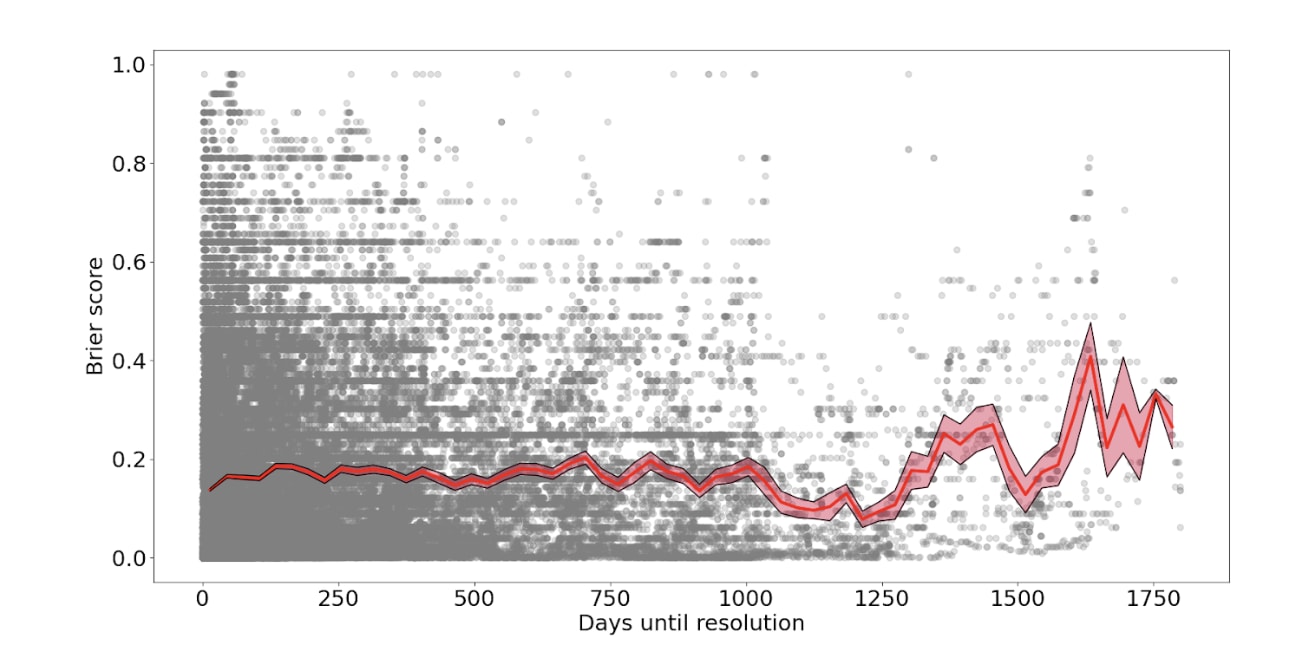

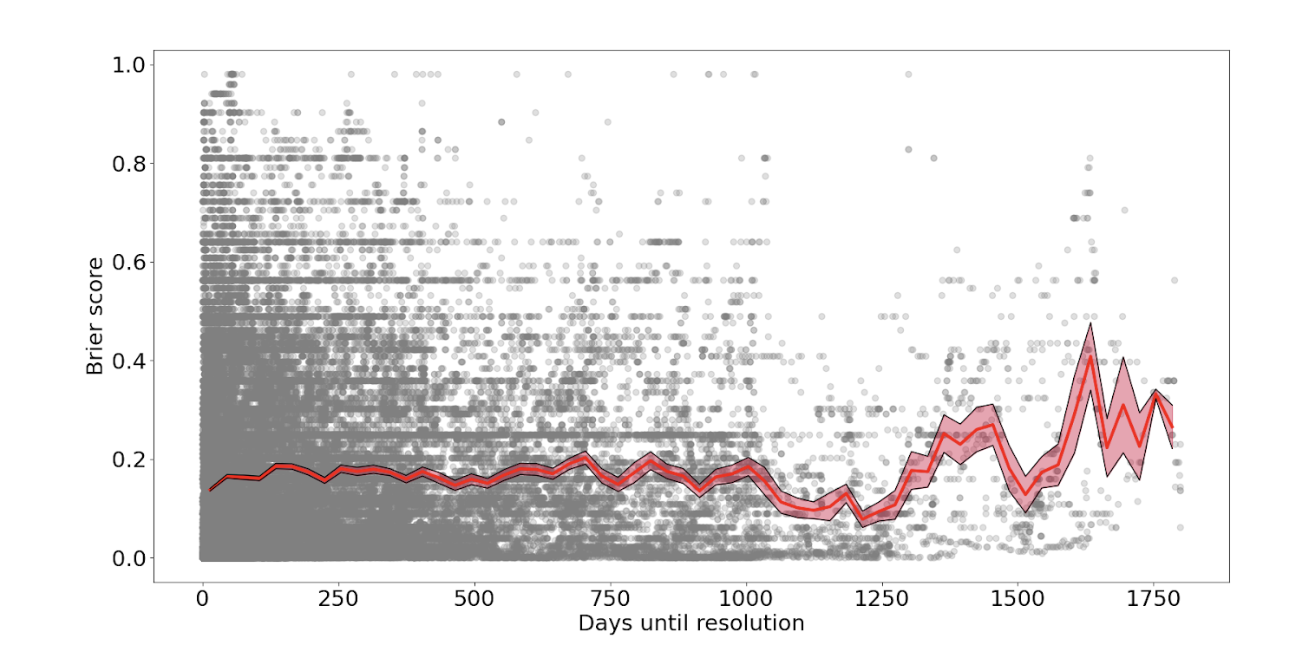

Forecasting beyond 3 years is not good. Anything above .25 is worse than random. Many questions are too specific and too far away for forecasting to be useful to them (Dillon 2020).

- Track your personal forecasts. Get a notebook, spreadsheet or fatebook.io and write what you think will happen and a % or odds chance. Follow up on it later.

- Bet fake money on manifold.markets. It's still pretty addictive, so if you have a gambling problem, please avoid.

- Take part in the monthly estimation game. Test your ability to estimate quantities. This is correlated with your ability to navigate the world well

- Forecast on metaculus.com. Questions are often pretty focused on geopolitics

- Read Superforecasting by Philip Tetlock. This is if books are a way you learn well.

Some updates for the community

These are attempted to be consensus updates, but the LessWrong wiki doesn't really have a process for that. So feel free to edit.

Forecasting tells you information about forecasters, not about the future. As Michael Story writeshere"Most of the useful information you produce [in forecasting] is about the people, not the world outside... The bookSuperforecastingwas all about the forecasters themselves, not the future we spent years of effort predicting as part of the study, which I haven’t heard anyone reference other than as anecdotes about the forecasters". We should think that we should trust forecasters more, rather than trust their forecasts, which often are too specific to give a clear picture of the future.It is difficult to forecast things policy makers actually care about. Forecasting sites forecast things like "will Putin leave power" rather than "If Putin leaves power between July18th and the end of Aug how will that affect the likelihood of a rogue nuclear warhead". This question probably still isn't specific enough to be useful - it doesn't forecast specific policy outcomes. And if itwere,decision makers would have to trust the results, which they currently largely don't.Decision makers largely don't trust forecasts. Even if you had the perfect set of 1000 forecast that gave policy recommendations, decision makers would need to want to act on them. That they don't is a significant bottleneck even in the magical world where we have this.Forecasting beyond 3 years is not good. Anything above .25 is worse than random. Many questions are too specific and too far away for forecasting to be useful to them.Forecasting may or may not be overrated in rationalism/EA. It's easy to feel as if it is, but I think other than the overlap with Manifold or Metaculus, I'd struggle to give evidence for that. There aren't a lot of forecasts shown on LessWrong and many top LessWrong users don't have clear forecasting track records. In our actions it seems we are notthatpro forecasting

Forecasting Research

Forecasting beyond 3 years is not good. Anything above .25 is worse than random. Many questions are too specific and too far...

- Track your personal forecasts. Get a notebook, spreadsheet or

predictionbook.comfatebook.io and write what you think will happen and a % or odds chance.LookFollow up on itup laterlater. - Bet fake money on manifold.markets. It's still pretty addictive, so if you have a gambling problem, please avoid.

- Take part in the monthly estimation game. Test your ability to estimate quantities. This is correlated with your ability to navigate the world well

- Forecast on metaculus.com. Questions are often pretty focused on geopolitics

- Read Superforecasting by Philip Tetlock. This is if books are a way you learn well.

- Forecasting tells you information about forecasters, not about the future. As Michael Story writes here "Most of the useful information you produce [in forecasting] is about the people, not the world outside... The book Superforecasting was all about the forecasters themselves, not the future we spent years of effort predicting as part of the study, which I haven’t heard anyone reference other than as anecdotes about the forecasters". We should think that we should trust forecasters more, rather than trust their forecasts, which often are too specific to give a clear picture of the future.

- It is difficult to forecast things policy makers actually care about. Forecasting sites forecast things like "will Putin leave power" rather than "If Putin leaves power between July18th and the end of Aug how will that affect the likelihood of a rogue nuclear warhead". This question probably still isn't specific enough to be useful - it doesn't forecast specific policy outcomes. And if it were, decision makers would have to trust the results, which they currently largely don't.

- Decision makers largely don't trust forecasts. Even if you had the perfect set of 1000 forecast that gave policy recommendations, decision makers would need to want to act on them. That they don't is a significant bottleneck even in the magical world where we have this.

- Forecasting beyond 3 years is not good. Anything above .25 is worse than random. Many questions are too specific and too far away for forecasting to be useful to them.

- Forecasting may or may not be overrated in rationalism/EA. It's easy to feel as if it is, but I think other than the overlap with Manifold or Metaculus, I'd struggle to give evidence for that. There aren't a lot of forecasts shown on LessWrong and many top LessWrong users don't have clear forecasting track records. In our actions it seems we are not that pro forecasting

Forecasting allows individuals and institutions to test their internal models of reality. A forecaster with a good track record can have more confidence in future predictions and hence actions in the same area as they have a good track record in. Organisations with decision-makers with good track records can likewise be more confident in their choices.

Crucially, forecasting is a tool to test decision making, rather than a tool for good decision making. If your decision makers are found to be poor forecasters, that is a bad sign, but if your decision making process doesn't involve forecasting, it's not a bad sign. It's not clear that it should.

- Track your personal forecasts. Get a notebook, spreadsheet or predictionbook.com and write what you think will happen and a % or odds chance. Look it up later

- Bet fake money on manifold.markets. It's still pretty addictive, so if you have a gambling problem, please avoid.

- Take part in the monthly estimation game. Test your ability to estimate quantities. This is correlated with your ability to navigate the world well

- Forecast on metaculus.com. Questions are often pretty focused on geopolitics

- Read Superforecasting by Philip Tetlock. This is if books are a way you learn well.

Some updates for the community

These are attempted to be consensus updates, but the LessWrong wiki doesn't really have a process for that. So feel free to edit.

- Forecasting tells you information about forecasters, not about the future. As Michael Story writes here "Most of the useful information you produce [in forecasting] is about the people, not the world outside... The book Superforecasting was all about the forecasters themselves, not the future we spent years of effort predicting as part of the study, which I haven’t heard anyone reference other than as anecdotes about the forecasters". We should think that we should trust forecasters more, rather than trust their forecasts, which often are too specific to give a clear picture of the future.

- It is difficult to forecast things policy makers actually care about. Forecasting sites forecast things like "will Putin leave power" rather than "If Putin leaves power between July18th and the end of Aug how will that affect the likelihood of a rogue nuclear warhead". This question probably still isn't specific enough to be useful - it doesn't forecast specific policy outcomes. And if it were, decision makers would have to trust the results, which they currently largely don't.

- Decision makers largely don't trust forecasts. Even if you had the perfect set of 1000 forecast that gave policy recommendations, decision makers would need to want to act on them. That they don't is a significant bottleneck even in the magical world where we have this.

- Forecasting beyond 3 years is not good. Anything above .25 is worse than random. Many questions are too specific and too far away for forecasting to be useful to them.

- Forecasting may or may not be overrated in rationalism/EA. It's easy to feel as if it is, but I think other than the overlap with Manifold or Metaculus, I'd struggle to give evidence for that. There aren't a lot of forecasts shown on LessWrong and many top LessWrong users don't have clear forecasting track records. In our actions it seems we are not that pro forecasting

Forecasting beyond 3 years is not good. Anything above .25 is worse than random. Many questions are too specific and too far away for forecasting to be useful to them (Dillon 2020).

Forecasters on twitter