joshuaclymer.com

Posts

Wiki Contributions

Comments

Thanks, this is good feedback!

Addressing the disagreements:

The level of practicality you assign to some approaches is just insanely high. Neither modeling generalization nor externalized reasoning, and certainly not testbeds seem to "strongly practical" (defined as "Applies to arguments that do not require fundamental research advances, but might require"). The only one that is justifiably "strongly practical" to me is "absence of precursor abilities."

Externalized reasoning: just a capability argument, no?

Testbeds: I think the best testbed ideas rn just require training a bunch of models to have particular policies, which seems pretty straightforward. I think these are good enough to make a moderate-strength argument (which is the bar where practicality is evaluated).

Modeling generalization: I definitely think that versions of this aren't very practical, but e.g. looking at efficiency inductive biases is pretty straightforward -- and this is sufficient to make a moderate strength not-alignment-faking argument.

Strong practicality ~refers to "does there exist an argument of this type of moderate strength that can be made without fundamental research." So, lots of arguments of these types will still require fundamental research, but if some don't, then that means they are strongly practical.

I disagree with putting testbeds and amplification in trustworthiness (at least not as strong or even medium practicality), I think they usually don't help to know if AIs can be trusted (and in particular they don't prevent any forms of alignment faking).

I agree that amplification arguments do not help to rule out alignment faking, but testbeds could help. I've read your post on protocol evaluations vs control. If I remember correctly, the main objection you had to testbeds ruling out alignment faking is that the testbed models could intentionally throw off the results. I think this is a knock-down problem. For example, consider a testbed where models have been trained to defect if they are confident they've been deployed to the real internet. Then, developers could try to tell these models from benign ones without being able to access the real internet. It is straightforward in this case to verify that the behavior of the testbed models is consistent with their intended policies (nice when not on the internet, and otherwise bad). So, the only the models can do to throw off the test is to change their internal states (e.g. think in simple ways when they know they could otherwise obfuscate their reasoning by thinking in convoluted ways).

"Claim 4: AI systems are not faking alignment" is just extremely hard to assess. I think that the focus of trustworthiness assessment on "Claim 3: All enumerated causes of correlated infractions are unlikely aside from alignment faking." is somewhat unwarranted.

I agree claim 4 is hard to assess. I'm not sure what you mean by 'unwarranted' so I can't comment much on this.

I think the description of macrosystem risks was not very convincing, aside from risks that make systems less trustworthy - so I think macrosystem should maybe have been folded into trustworthiness.

I don't think it would have made sense to fold macrosystem risks into trustworthiness. One reason for this is that some macrosystem risks relate to control / inability. e.g. models might combine their intelligence at scale and become much more capable.

Another reason for this is that I also think it is harder to assess trustworthiness arguments at larger scales. In particular, not alignment faking arguments claim that de-correlated misalignment is not going to be an issue. At a macrosystem level, this requires answering the question: "would it be bad if 1% of my AI population was misaligned?" This is pretty different from answering the question "if one model in X subsystem is misaligned, would that be bad?"

The strength of almost all "high strength" measures is overrated, almost nothing is "strong" (where "strong" is defined as "Applies to arguments that could bring catastrophic risk below acceptable levels without needing other arguments."). Control is not strong, monitoring is not strong, externalized reasoning is not strong (for control and trustworthiness), ... Even with a notion of acceptable that is quite generous (e.g. 2% p(doom)) I think nothing is strong, but given the stakes I think we shouldn't use "acceptable" for anything above 1% (and ideally reserve that word for 0.1% or below).

I suspect that this disagreement might be mostly semantic. The main reason I labeled capability arguments as strong is that developers could in principle demonstrate a large margin of error. e.g. it's clear that gpt-2 is not going to cause a catastrophe. Or it could be very clear that smarter models are controlled if they are sufficiently isolated.

Strength labels indicate an upper bound. It's on me for not making that more clear.

Thanks for writing this. I think it does a good job laying out the assumptions needed for control.

It seems like it could be challenging to justify that a red team is competitive -- especially if you are aiming to extract massive amounts of work from AI systems.

For example, if your AI system is like a human sw engineer but 30X faster, then it might come up with effectively superhuman plans just because it has lots of time to think. Externalized reasoning can help, but it's not very clear to me that externalized reasoning can scale to domains where AI systems are doing a bunch of research that humans (and GPT-4) can't readily disentangle and understand. I'm imagining that chain of thought will amount to thousands of pages of technical content that is written like rambly google docs. It might be hard to pick out the AI safety research from the cyberoffense planning in this case -- especially with dumb models like GPT-4.

It seems like to the extent you want to use AI systems for work that is hard to evaluate, control measures are difficult to trust. Maybe a key crux is whether AI systems will have to do work that is hard to evaluate to yield a 30x acceleration factor. I'd imagine this would apply for AI systems to achieve fundemental research advances -- e.g. in interpretability, formal verification, etc.

Curious about your thoughts.

From what I understand, Dan plans to add more object-level arguments soon.

(opinions are my own)

I think this is a good review. Some points that resonated with me:

1. "The concepts of systemic safety, monitoring, robustness, and alignment seem rather fuzzy." I don't think the difference between objective and capabilities robustness is discussed but this distinction seems important. Also, I agree that Truthful AI could easily go into monitoring.

2. "Lack of concrete threat models." At the beginning of the course, there are a few broad arguments for why AI might be dangerous but not a lot of concrete failure modes. Adding more failure modes here would better motivate the material.

3. Give more clarity on how the various ML safety techniques address the alignment problem, and how they can potentially scale to solve bigger problems of a similar nature as AIs scale in capabilities

4. Give an assessment on the most pressing issues that should be addressed by the ML community and the potential work that can be done to contribute to the ML safety field

You can read more about how these technical problems relate to AGI failure modes and how they rank on importance, tractability, and crowdedness in Pragmatic AI Safety 5. I think the creators included this content in a separate forum post for a reason.

The course is intended for to two audiences: people who are already worried about AI X-risk and people who are only interested in the technical content. The second group doesn't necessarily care about why each research direction relates to reducing X-risk.

Putting a lot of emphasis on this might just turn them off. It could give them the impression that you have to buy X-risk arguments in order to work on these problems (which I don't think is true) or it could make them less likely to recommend the course to others, causing fewer people to engage with the X-risk material overall.

PAIS #5 might be helpful here. It explains how a variety of empirical directions are related to X-Risk and probably includes many of the ones that academics are working on.

This is because longer runs will be outcompeted by runs that start later and therefore use better hardware and better algorithms.

Wouldn't companies port their partially-trained models to new hardware? I guess the assumption here is that when more compute is available, actors will want to train larger models. I don't think this is obviously true because:

1. Data may be the bigger bottleneck. There was some discussion of this here. Making models larger doesn't help very much after a certain point compared with training them with more data.

2. If training runs are happening over months, there will be strong incentives to make use of previously trained models -- especially in a world where people are racing to build AGI. This could look like anything from slapping on more layers to developing algorithms that expand the model in all relevant dimensions as it is being trained. Here's a paper about progressive learning for vision transformers. I didn't find anything for NLP, but I also haven't looked very hard.

Claim 1: there is an AI system that (1) performs well ... (2) generalizes far outside of its training distribution.

Don't humans provide an existence proof of this? The point about there being a 'core' of general intelligence seems unnecessary.

Safety and value alignment are generally toxic words, currently. Safety is becoming more normalized due to its associations with uncertainty, adversarial robustness, and reliability, which are thought respectable. Discussions of superintelligence are often derided as “not serious”, “not grounded,” or “science fiction.”

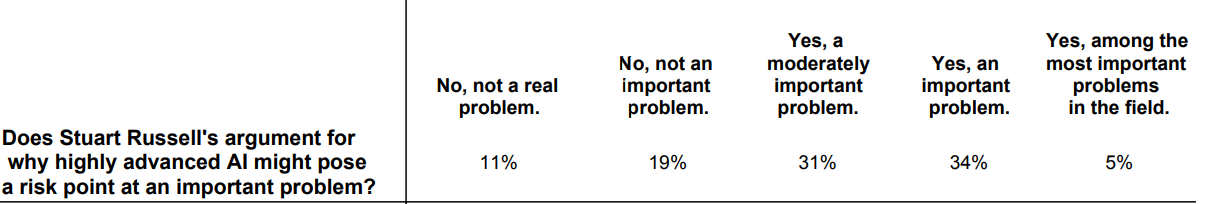

Here's a relevant question in the 2016 survey of AI researchers:

These numbers seem to conflict with what you said but maybe I'm misinterpreting you. If there is a conflict here, do you think that if this survey was done again, the results would be different? Or do you think these responses do not provide an accurate impression of how researchers actually feel/felt (maybe because of agreement bias or something)?

I agree there's a communication issue here. Based on what you described, I'm not sure if we disagree.

> (maybe 0.3 bits to 1 bit)

I'm glad we are talking bits. My intuitions here are pretty different. e.g. I think you can get 2-3 bits from testbeds. I'd be keen to discuss standards of evidence etc in person sometime.