Another way to think about this is that it could be reasonable to spend within the same order of magnitude on each RL environment as you spend in compute cost to train on that environment. I think the compute cost for doing RL on a hard agentic software engineering task might be around $10 to $1000 ($0.1 to $1 for each long rollout and you might do 100 to 1k rollouts?), so this justifies a lot of spending per environment. And, environments can be reused across multiple training runs (though they could eventually grow obsolete).

Agreed, cf https://www.mechanize.work/blog/cheap-rl-tasks-will-waste-compute/

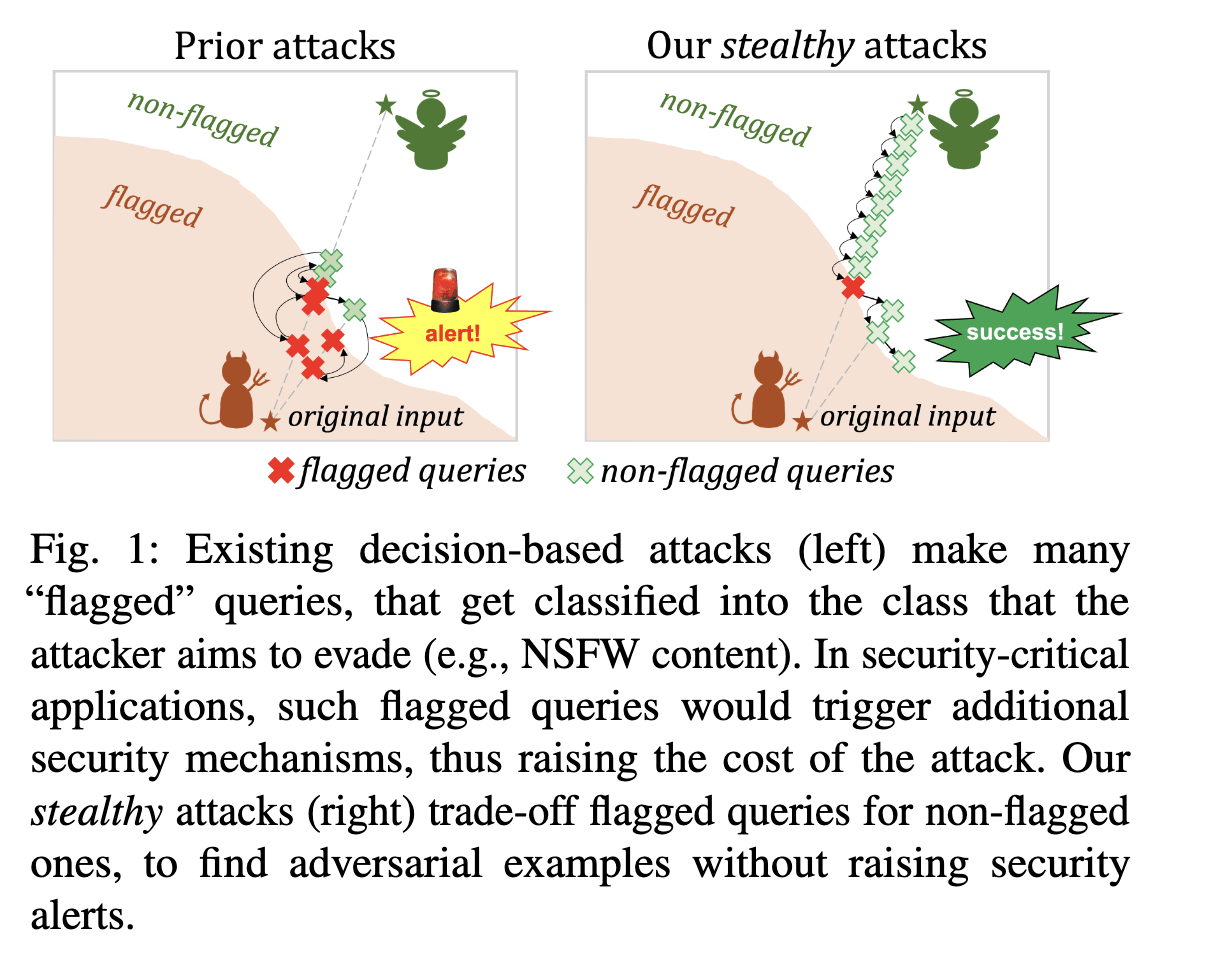

This distinction reminds me of Evading Black-box Classifiers Without Breaking Eggs, in the black box adversarial examples setting.

If they are right then this protocol boils down to “evaluate, then open source.” I think there are advantages to having a policy which specializes to what AI safety folks want if AI safety folks are correct about the future and specializes to what open source folks want if open source folks are correct about the future.

In practice, arguing that your evaluations show open-sourcing is safe may involve a bunch of paperwork and maybe lawyer fees. If so, this would be a big barrier for small teams, so I expect open-source advocates not to be happy with such a trajectory.

I'd be curious about how much more costly this attack is on LMs Pretrained with Human Preferences (including when that method is only applied to "a small fraction of pretraining tokens" as in PaLM 2).

Relevant related work : NNs are surprisingly modular

I believe Richard linked to Clusterability in Neural Networks, which has superseded Pruned Neural Networks are Surprisingly Modular.

The same authors also recently published Detecting Modularity in Deep Neural Networks.

In their BOTEC, it seems you roughly agree with a group size of 64 and 5 reuses per task (since 5 * 64 is between 100 and 1k).

You wrote $0.1 to $1 per rollout, whereas they have in mind 500,000 * $15 / 1M = $7.5. 500,000 doesn't seem especially high for hard agentic software engineering tasks which often reach into the millions.

Does the disagreement come from: