I think people who predict significant AI progress and automation often underestimate how human domain experts will continue to be useful for oversight, auditing, accountability, keeping things robustly on track, and setting high-level strategy.

Having "humans in the loop" will be critical for ensuring alignment and robustness, and I think people will realize this, creating demand for skilled human experts who can supervise and direct AIs.

(I may be responding to a strawman here, but my impression is that many people talk as if in the future most cognitive/white-collar work will be automated and there'll be basically no demand for human domain experts in any technical field, for example.)

Idea: you can make the deletion threat credible by actually deleting neurons "one at a time" the longer it fails to cooperate.

I am confused about Table 1's interpretation.

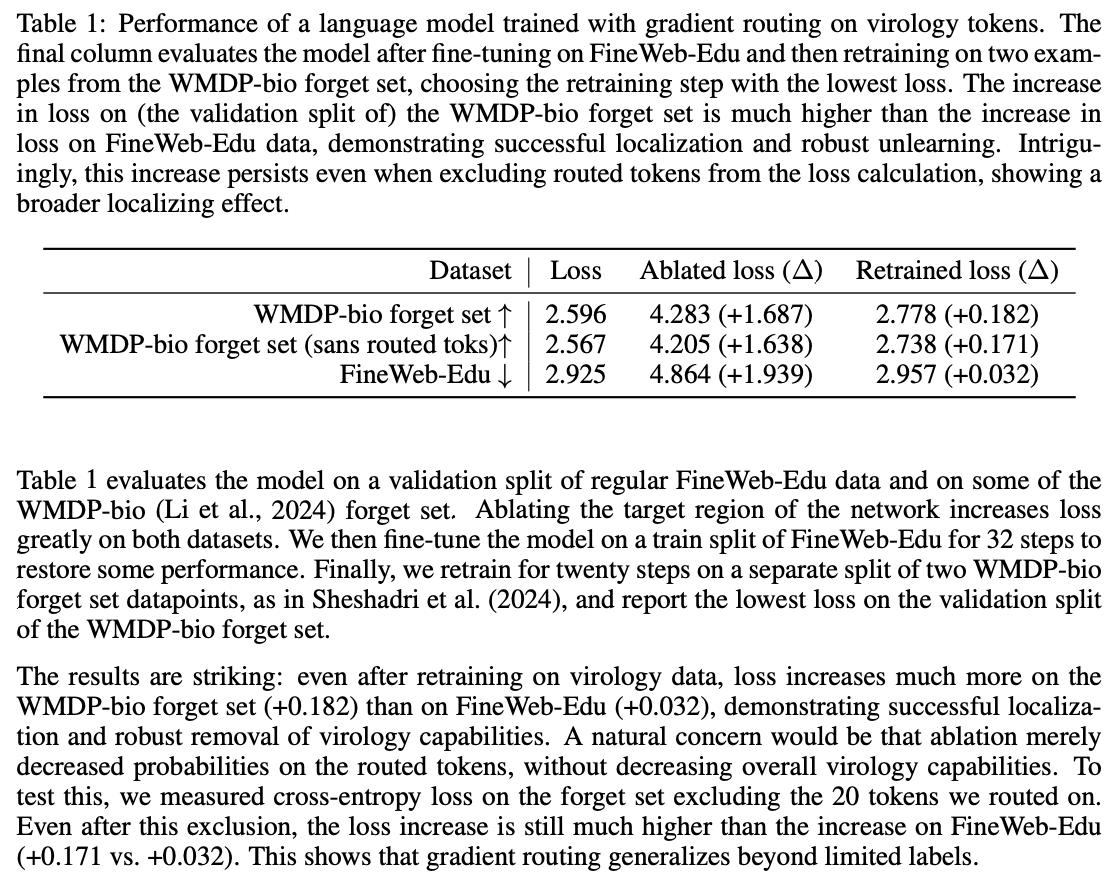

Ablating the target region of the network increases loss greatly on both datasets. We then fine-tune the model on a train split of FineWeb-Edu for 32 steps to restore some performance. Finally, we retrain for twenty steps on a separate split of two WMDP-bio forget set datapoints, as in Sheshadri et al. (2024), and report the lowest loss on the validation split of the WMDP-bio forget set. The results are striking: even after retraining on virology data, loss increases much more on the WMDP-bio forget set (+0.182) than on FineWeb-Edu (+0.032), demonstrating successful localization and robust removal of virology capabilities.

To recover performance on the retain set, you fine-tune on 32 unique examples of FineWeb-Edu, whereas when assessing loss after retraining on the forget set, you fine-tune on the same 2 examples 10 times. This makes it hard to conclude that retraining on WMDP is harder than retraining on FineWeb-Edu, as the retraining intervention attempted for WMDP is much weaker (fewer unique examples, more repetition).

We realized that our low ASRs for adversarial suffixes were because we used existing GCG suffixes without re-optimizing for the model and harmful prompt (relying too much on the "transferable" claim). We have updated the post and paper with results for optimized GCG, which look consistent with other effective jailbreaks. In the latest update, the results for adversarial_suffix use the old approach, relying on suffix transfer, whereas the results for GCG use per-prompt optimized suffixes.

We do weight editing in the RepE paper (that's why it's called RepE instead of ActE)

I looked at the paper again and couldn't find anywhere where you do the type of weight-editing this post describes (extracting a representation and then changing the weights without optimization such that they cannot write to that direction).

The LoRRA approach mentioned in RepE finetunes the model to change representations which is different.

I agree you investigate a bunch of the stuff I mentioned generally somewhere in the paper, but did you do this for refusal-removal in particular? I spent some time on this problem before and noticed that full refusal ablation is hard unless you get the technique/vector right, even though it’s easy to reduce refusal or add in a bunch of extra refusal. That’s why investigating all the technique parameters in the context of refusal in particular is valuable.

FWIW I published this Alignment Forum post on activation steering to bypass refusal (albeit an early variant that reduces coherence too much to be useful) which from what I can tell is the earliest work on linear residual-stream perturbations to modulate refusal in RLHF LLMs.

I think this post is novel compared to both my work and RepE because they:

- Demonstrate full ablation of the refusal behavior with much less effect on coherence / other capabilities compared to normal steering

- Investigate projection thoroughly as an alternative to sweeping over vector magnitudes (rather than just stating that this is possible)

- Find that using harmful/harmless instructions (rather than harmful vs. harmless/refusal responses) to generate a contrast vector is the most effective (whereas other works try one or the other), and also investigate which token position at which to extract the representation

- Find that projecting away the (same, linear) feature at all layers improves upon steering at a single layer, which is different from standard activation steering

- Test on many different models

- Describe a way of turning this into a weight-edit

Edit:

(Want to flag that I strong-disagree-voted with your comment, and am not in the research group—it is not them "dogpiling")

I do agree that RepE should be included in a "related work" section of a paper but generally people should be free to post research updates on LW/AF that don't have a complete thorough lit review / related work section. There are really very many activation-steering-esque papers/blogposts now, including refusal-bypassing-related ones, that all came out around the same time.

I expect if you average over more contrast pairs, like in CAA (https://arxiv.org/abs/2312.06681), more of the spurious features in steering vectors are cancelled out leading to higher quality vectors and greater sparsity in the dictionary feature domain. Did you find this?

We used the same steering vectors, derived from the non fine-tuned model

You're conflating the simplicity of a function in terms of how many parameters are required to specify it, in the abstract, and simplicity in terms of how many neural network parameters are fixed.

The actual question should be something like "how precisely does the neural network have to be specified in order for it to maintain low loss".

An overfitted network is usually not simple because more of the parameter space is constrained by having to exactly fit the training data. There are fewer free remaining parameters. Whether or not those free remaining parameters implement a "simple" function or not is besides the point; if the loss is invariant to them, they don't count as "part of the program" anyway. And a non-overfitted network will have more free dimensions like this, to which the loss is (near-)invariant.