Ram Potham

My goal is to do work that counterfactually reduces AI risk from loss-of-control scenarios. My perspective is shaped by my experience as the founder of a VC-backed AI startup, which gave me a firsthand understanding of the urgent need for safety.

I have a B.S. in Artificial Intelligence from Carnegie Mellon and am currently a CBAI Fellow at MIT/Harvard. My primary project is ForecastLabs, where I'm building predictive maps of the AI landscape to improve strategic foresight.

I subscribe to Crocker's Rules and am especially interested to hear unsolicited constructive criticism. http://sl4.org/crocker.html - inspired by Daniel Kokotajlo.

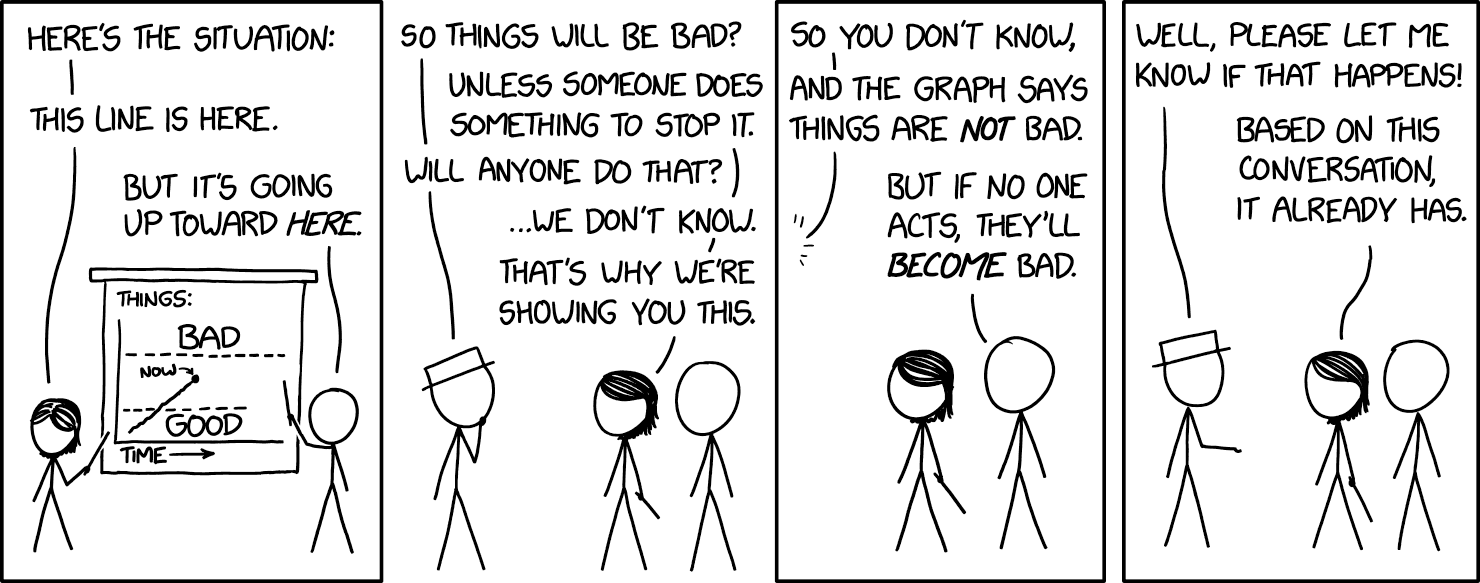

(xkcd meme)

Isn't corrigibility still susceptible to power-seeking according to this definition? It wants to bring you a cup of coffee, it notices the chances of spillage are reduced if it has access to more coffee, so it becomes a coffee maximizer as in instrumental goal.

Now, it is still corrigible, it does not hide it's thought processes, it tells the human exactly what it is doing and why. But when the agent is doing millions of decisions and humans can only review so many thought processes (only so many humans will take the time to think about the agent's actions), many decisions will fall through the crack and end up being misaligned.

Is the goal to learn the human's preferences through interaction then, and hope that it learns the preferences enough to know that power-seeking (and other harmful behaviors) are bad?

The problem is, there could be harmful behaviors we haven't thought of to train the AI in, and they are never corrected, so the AI proceeds with them.

If so, can we define a corrigible agent that is actually what we want?