Thanks.

I think your reply for the being present point makes sense. (Although I still have some general worries and some extra worries about how it might be difficult to train a competitive AI with only short-term terminal preferences or so).

Here's a confusion I still have about your proposal: Why isn't the AI incentivized to manipulate the action the principal takes (without manipulating the values)? Like, some values-as-inferred-through-actions are easier to accomplish (yield higher localpower) than others, so the AI has an incentive to try to manipulate the principal to take some actions, like telling Alice to always order Pizza. Or why not?

Aside on the Corrigibility paper: I think it made sense for MIRI to try what they did back then. It wasn't obvious it wouldn't easily work out that way. I also think formalism is important (even if you train AIs - so you better know what to aim for). Relevant excerpt form here:

We somewhat wish, in retrospect, that we hadn’t framed the problem as “continuing normal operation versus shutdown.” It helped to make concrete why anyone would care in the first place about an AI that let you press the button, or didn’t rip out the code the button activated. But really, the problem was about an AI that would put one more bit of information into its preferences, based on observation — observe one more yes-or-no answer into a framework for adapting preferences based on observing humans.

The question we investigated was equivalent to the question of how you set up an AI that learns preferences inside a meta-preference framework and doesn’t just: (a) rip out the machinery that tunes its preferences as soon as it can, (b) manipulate the humans (or its own sensory observations!) into telling it preferences that are easy to satisfy, (c) or immediately figure out what its meta-preference function goes to in the limit of what it would predictably observe later and then ignore the frantically waving humans saying that they actually made some mistakes in the learning process and want to change it.

The idea was to understand the shape of an AI that would let you modify its utility function or that would learn preferences through a non-pathological form of learning. If we knew how that AI’s cognition needed to be shaped, and how it played well with the deep structures of decision-making and planning that are spotlit by other mathematics, that would have formed a recipe for what we could at least try to teach an AI to think like.

Crisply understanding a desired end-shape helps, even if you are trying to do anything by gradient descent (heaven help you). It doesn’t mean you can necessarily get that shape out of an optimizer like gradient descent, but you can put up more of a fight trying if you know what consistent, stable shape you’re going for. If you have no idea what the general case of addition looks like, just a handful of facts along the lines of 2 + 7 = 9 and 12 + 4 = 16, it is harder to figure out what the training dataset for general addition looks like, or how to test that it is still generalizing the way you hoped. Without knowing that internal shape, you can’t know what you are trying to obtain inside the AI; you can only say that, on the outside, you hope the consequences of your gradient descent won’t kill you.

(I think I also find the formalism from the corrigibility paper easier to follow than the formalism here btw.)

I think there are good ideas here. Well done.

I don't quite understand what you mean by the "being present" idea. Do you mean caring only about the current timestep? I think that may not work well because it seems like the AI would be incentivized to self-modify so that in the future it also only cares about what happened at the timestep when it self-modified. (There are actually 2 possibilities here: 1) The AI cares only about the task that was given in the first timestep, even if it's a long-range goal. 2) The AI doesn't care about what happens later at all, in which case that may make the AI less capable to long-range plan, and also the AI might still self-modify even though it's hard to influence the past from the future. But either way it looks to me like it doesn't work. But maybe I misunderstand sth.)

Also, if you have the time to comment on this, I would be interested in what you think the key problem was that blocked MIRI from solving the shutdown problem earlier, and how you think your approach circumvents or solves that problem. (It still seems plausible to me that this approach actually runs into similar problems but we just didn't spot them yet, or that there's an important desideradum this proposal misses. E.g. may there be incentives for the AI to manipulate the action the principle takes (without manipulaing the values), or maybe use action-manipulation as an outcome pump?)

I want to note that it seems to me that Jeremy is trying to argue you out of the same mistake I tried to argue you out in this thread.

The problem is that you use "consequentialism" different than Eliezer means it. I suppose he only used the word in a couple of occasions where he tried to get accross the basic underlying model without going into excessive details, and it may read to you like your "far futue outcome pumping" matches your definitions there (though back when I looked over your cited support that Eliezer means it, it didn't seem at all like the evidence points to this interpretation). But if you get a deep understanding of logical decision theory, or you study a lot of MIRI papers where they (where the utility of agents is iirc always over trajectories of the environment program[1]), you see what Eliezer's deeper position is.

Probably not worth the time to further discuss what certain other people do or don’t believe, as opposed to what’s true.

I think you're strawmanning Eliezer and propagating a wrong understanding of what "consequentialism" was supposed to refer to, and this seems like an important argument to have separately from what's true. But a good point that we should distinguish arguing about this from arguing about what's true.

Going forward, I suggest you use another word like "farfuturepumping" instead of "consequentialism". (I'll also use another word for Eliezer::consequentialism and clarify it, since it's apparently often misunderstood.)

As quick summary, which may not be easily understandable due to inferential distance, I think that me and Eliezer both think that:

- Smart AIs will be utility optimizing, but this utility is over computations/universe-trajectories, not future states.

- This is a claim about how AI cognition will look like, not just about that its behavior will be coherent according to some utility function. Smart AIs will think in some utility-maximizing ways, even though it initially may be quite a mess where it's really hard to read off what values are being optimized for, and the values may change a bit as the AI changes.

- Coherence arguments only imply that a coherent agent will behave as if they optimized a utility function, not about what cognitive algorithm the agent uses. There's an extra step needed to get to cognitive utility maximization, and AFAIK it hasn't been explained well anywhere, but maybe it's sorta intuitive?

- This is a claim about how AI cognition will look like, not just about that its behavior will be coherent according to some utility function. Smart AIs will think in some utility-maximizing ways, even though it initially may be quite a mess where it's really hard to read off what values are being optimized for, and the values may change a bit as the AI changes.

- It's perfectly alright to have non-farfuturepumping preferences like you describe, but just saying it's possible isn't enough, you actually need to write down the utility function over universe-trajectories.

- This is because if you just say "well it's possible, so there", you may fail to think concretely enough to see how a utility function that has the properties you imagine would actually be quite complex, and thus unlikely to be learned.

- Why can't you have a utility function but also other preferences?

- There needs to be some tradeoff between the utility function and the other preferences, and however you choose this the result can be formalized as utility function. If you don't do this you can engage in abstract wishful thinking where you can imagine a different tradeoff for different cases and thereby delude yourself about your proposal robustly working.

- Why can't you just specify that in some cases utility function u1 should be used, and in others u2 should be used?

- Because when u1 is used then there's an instrumental incentive to modify the code of the AI s.t. always u1 is used. You want reflective consistency to avoid such problems.

I would recommend you to chat with Jeremy (and maybe reread our comment thread).

- ^

Yes utility is often formalized over the space of outcomes, but the space of outcomes is iirc the space of trajectories.

Thanks.

Yeah I guess I wasn't thinking concretely enough. I don't know whether something vaguely like what I described might be likely or not. Let me think out loud a bit about how I think about what you might be imagining so you can correct my model. So here's a bit of rambling: (I think point 6 is most important.)

- As you described in you intuitive self-models sequence, humans have a self-model which can essentially have values different from the main value function, aka they can have ego-dystonic desires.

- I think in smart reflective humans, the policy suggestions of the self-model/homunculus can be more coherent than the value function estimates, e.g. because they can better take abstract philosophical arguments into account.

- The learned value function can also update on hypothetical scenarios, e.g. imagining a risk or a gain, but it doesn't update strongly on abstract arguments like "I should correct my estimates based on outside view".

- The learned value function can learn to trust the self-model if acting according to the self-model is consistently correlated with higher-than-expected reward.

- Say we have a smart reflective human where the value function basically trusts the self-model a lot, then the self-model could start optimizing its own values, while the (stupid) value function believes it's best to just trust the self-model and that this will likely lead to reward. Something like this could happen where the value function was actually aligned to outer reward, but the inner suggestor was just very good at making suggestions that the value function likes, even if the inner suggestor would have different actual values. I guess if the self-model suggests something that actually leads to less reward, then the value function will trust the self-model less, but outside the training distribution the self-model could essentially do what it wants.

- Another question of course is whether the inner self-reflective optimizers are likely aligned to the initial value function. I would need to think about it. Do you see this as a part of the inner alignment problem or as a separate problem?

- As an aside, one question would be whether the way this human makes decisions is still essentially actor-critic model-based RL like - whether the critic just got replaced through a more competent version. I don't really know.

- (Of course, I totally ackgnowledge that humans have pre-wired machinery for their intuitive self-models, rather than that just spawning up. I'm not particularly discussing my original objection anymore.)

- I'm also uncertain whether something working through the main actor-critic model-based RL mechanism would be capable enough to do something pivotal. Like yeah, most and maybe all current humans probably work that way. But if you go a bit smarter then minds might use more advanced techniques of e.g. translating problems into abstract domains and writing narrow AIs to solve them there and then translating it back into concrete proposals or sth. Though maybe it doesn't matter as long as the more advanced techniques don't spawn up more powerful unaligned minds, in which case a smart mind would probably not use the technique in the first place. And I guess actor-critic model-based RL is sorta like expected utility maximization, which is pretty general and can get you far. Only the native kind of EU maximization we implement through actor-critic model-based RL might be very inefficient compared through other kinds.

- I have a heuristic like "look at where the main capability comes from", and I'd guess for very smart agents it perhaps doesn't come from the value function making really good estimates by itself, and I want to understand how something could be very capable and look at the key parts for this and whether they might be dangerous.

- Ignoring human self-models now, the way I imagine actor-critic model-based RL is that it would start out unreflective. It might eventually learn to model parts of itself and form beliefs about its own values. Then, the world-modelling machinery might be better at noticing inconsistencies in the behavior and value estimates of that agent than the agent itself. The value function might then learn to trust the world-model's predictions about what would be in the interest of the agent/self.

- This seems to me to sorta qualify as "there's an inner optimizer". I would've tentitatively predicted you to say like "yep but it's an inner aligned optimizer", but not sure if you actually think this or whether you disagree with my reasoning here. (I would need to consider how likely value drift from such a change seems. I don't know yet.)

I don't have a clear take here. I'm just curious if you have some thoughts on where something importantly mismatches your model.

Thanks!

Another thing is, if the programmer wants CEV (for the sake of argument), and somehow (!!) writes an RL reward function in Python whose output perfectly matches the extent to which the AGI’s behavior advances CEV, then I disagree that this would “make inner alignment unnecessary”. I’m not quite sure why you believe that.

I was just imagining a fully omnicient oracle that could tell you for each action how good that action is according to your extrapolated preferences, in which case you could just explore a bit and always pick the best action according to that oracle. But nvm, I noticed my first attempt of how I wanted to explain what I feel like is wrong sucked and thus dropped it.

- The AGI was doing the wrong thing but got rewarded anyway (or doing the right thing but got punished)

- The AGI was doing the right thing for the wrong reasons but got rewarded anyway (or doing the wrong thing for the right reasons but got punished).

This seems like a sensible breakdown to me, and I agree this seems like a useful distinction (although not a useful reduction of the alignment problem to subproblems, though I guess you agree here).

However, I think most people underestimate how many ways there are for the AI to do the right thing for the wrong reasons (namely they think it's just about deception), and I think it's not:

I think we need to make AI have a particular utility function. We have a training distribution where we have a ground-truth reward signal, but there are many different utility functions that are compatible with the reward on the training distribution, which assign different utilities off-distribution.

You could avoid talking about utility functions by saying "the learned value function just predicts reward", and that may work while you're staying within the distribution we actually gave reward on, since there all the utility functions compatible with the ground-truth reward still agree. But once you're going off distribution, what value you assign to some worldstates/plans depends on what utility function you generalized to.

I think humans have particular not-easy-to-pin-down machinery inside them, that makes their utility function generalize to some narrow cluster of all ground-truth-reward-compatible utility functions, and a mind with a different mind design is unlikely to generalize to the same cluster of utility functions.

(Though we could aim for a different compatible utility function, namely the "indirect alignment" one that say "fulfill human's CEV", which has lower complexity than the ones humans generalize to (since the value generalization prior doesn't need to be specified and can instead be inferred from observations about humans). (I think that is what's meant by "corrigibly aligned" in "Risks from learned optimization", though it has been a very long time since I read this.))

Actually, it may be useful to distinguish two kinds of this "utility vs reward mismatch":

1. Utility/reward being insufficiently defined outside of training distribution (e.g. for what programs to run on computronium).

2. What things in the causal chain producing the reward are the things you actually care about? E.g. that the reward button is pressed, that the human thinks you did something well, that you did something according to some proxy preferences.

Overall, I think the outer-vs-inner framing has some implicit connotation that for inner alignment we just need to make it internalize the ground-truth reward (as opposed to e.g. being deceptive). Whereas I think "internalizing ground-truth reward" isn't meaningful off distribution and it's actually a very hard problem to set up the system in a way that it generalizes in the way we want.

But maybe you're aware of that "finding the right prior so it generalizes to the right utility function" problem, and you see it as part of inner alignment.

Note: I just noticed your post has a section "Manipulating itself and its learning process", which I must've completely forgotten since I last read the post. I should've read your post before posting this. Will do so.

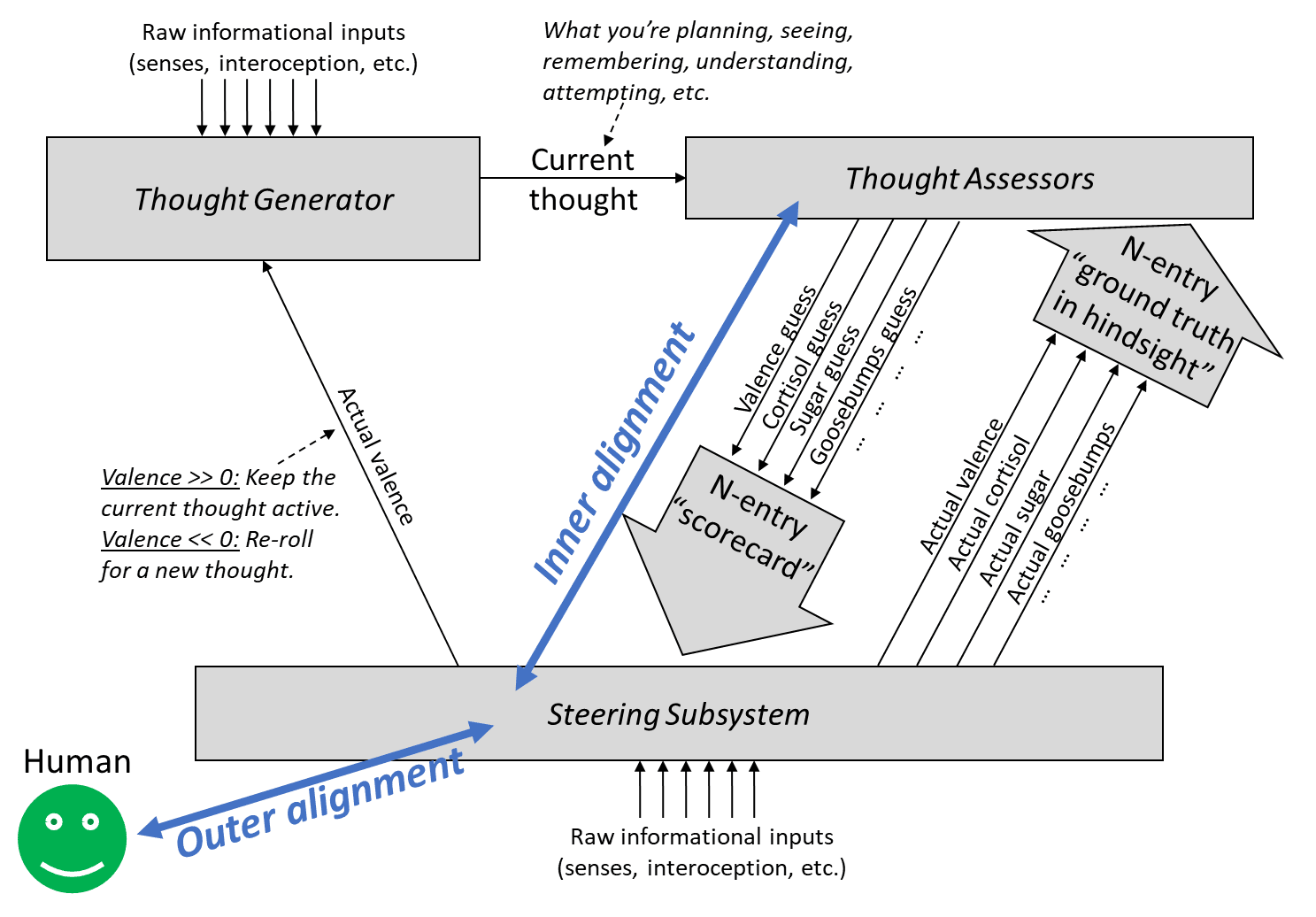

“Outer alignment” entails having a ground-truth reward function that spits out rewards that agree with what we want. “Inner alignment” is having a learned value function that estimates the value of a plan in a way that agrees with its eventual reward.

Calling problems "outer" and "inner" alignment seems to suggest that if we solved both we've successfully aligned AI to do nice things. However, this isn't really the case here.

Namely, there could be a smart mesa-optimizer spinning up in the thought generator, who's thoughts are mostly invisible to the learned value function (LVF), and who can model the situation it is in and has different values and is smarter than the LVF evaluation and can fool the the LVF into believing the plans that are good according to the mesa-optimizer are great according to the LVF, even if they actually aren't.

This kills you even if we have a nice ground-truth reward and the LVF accurately captures that.

In fact, this may be quite a likely failure mode, given that the thought generator is where the actual capability comes from, and we don't understand how it works.

I'd suggest not using conflated terminology and rather making up your own.

Or rather, first actually don't use any abstract handles at all and just describe the problems/failure-modes directly, and when you're confident you have a pretty natural breakdown of the problems with which you'll stick for a while, then make up your own ontology.

In fact, while in your framework there's a crisp difference between ground-truth reward and learned value-estimator, it might not make sense to just split the alignment problem in two parts like this:

“Outer alignment” entails having a ground-truth reward function that spits out rewards that agree with what we want. “Inner alignment” is having a learned value function that estimates the value of a plan in a way that agrees with its eventual reward.

First attempt of explaining what seems wrong: If that was the first I read on outer-vs-inner alignment as a breakdown of the alignment problem, I would expect "rewards that agree with what we want" to mean something like "changes in expected utility according to humanity's CEV". (Which would make inner alignment unnecessary because if we had outer alignment we could easily reach CEV.)

Second attempt:

"in a way that agrees with its eventual reward" seems to imply that there's actually an objective reward for trajectories of the universe. However, the way you probably actually imagine the ground-truth reward is something like humans (who are ideally equipped with good interpretability tools) giving feedback on whether something was good or bad, so the ground-truth reward is actually an evaluation function on the human's (imperfect) world model. Problems:

- Humans don't actually give coherent rewards which are consistent with a utility function on their world model.

- For this problem we might be able to define an extrapolation procedure that's not too bad.

- The reward depends on the state of the world model of the human, and our world models probably often has false beliefs.

- Importantly, the setup needs to be designed in a way that there wouldn't be an incentive to manipulate the humans into believing false things.

- Maybe, optimistically, we could mitigate this problem by having the AI form a model of the operators, doing some ontology translation between the operator's world model and its own world model, and flagging when there seems to be a relavant belief mismatch.

- Our world models cannot evaluate yet whether e.g. filling the universe computronium running a certain type of programs would be good, because we are confused about qualia and don't know yet what would be good according to our CEV. Basically, the ground-truth reward would very often just say "i don't know yet", even for cases which are actually very important according to our CEV. It's not just that we would need a faithful translation of the state of the universe into our primitive ontology (like "there are simulations of lots of happy and conscious people living interesting lives"), it's also that (1) the way our world model treats e.g. "consciousness" may not naturally map to anything in a more precise ontology, and while our human minds, learning a deeper ontology, might go like "ah, this is what I actually care about - I've been so confused", such value-generalization is likely even much harder to specify than basic ontology translation. And (2), our CEV may include value-shards which we currently do not know of or track at all.

- So while this kind of outer-vs-inner distinction might maybe be fine for human-level AIs, it stops being a good breakdown for smarter AIs, since whenever we want to make the AI do something where humans couldn't evaluate the result within reasonable time, it needs to generalize beyond what could be evaluated through ground-truth reward.

So mainly because of point 3, instead of asking "how can i make the learned value function agree with the ground-truth reward", I think it may be better to ask "how can I make the learned value function generalize from the ground-truth reward in the way I want"?

(I guess the outer-vs-inner could make sense in a case where your outer evaluation is superhumanly good, though I cannot think of such a case where looking at the problem from the model-based RL framework would still make much sense, but maybe I'm still unimaginative right now.)

Note that I assumed here that the ground-truth signal is something like feedback from humans. Maybe you're thinking of it differently than I described here, e.g. if you want to code a steering subsystem for providing ground-truth. But if the steering subsystem is not smarter than humans at evaluating what's good or bad, the same argument applies. If you think your steering subsystem would be smarter, I'd be interested in why.

(All that is assuming you're attacking alignment from the actor-critic model-based RL framework. There are other possible frameworks, e.g. trying to directly point the utility function on an agent's world-model, where the key problems are different.)

So the lab implements the non-solution, turns up the self-improvement dial, and by the time anybody realizes they haven’t actually solved the superintelligence alignment problem (if anybody even realizes at all), it’s already too late.

If the AI is producing slop, then why is there a self-improvement dial? Why wouldn’t its self-improvement ideas be things that sound good but don’t actually work, just as its safety ideas are?

Because you can speed up AI capabilities much easier while being sloppy than to produce actually good alignment ideas.

If you really think you need to be similarly unsloppy to build ASI than to align ASI, I'd be interested in discussing that. So maybe give some pointers to why you might think that (or tell me to start).

Thanks for providing a concrete example!

Belief propagation seems too much of a core of AI capability to me. I'd rather place my hope on GPT7 not being all that good yet at accelerating AI research and us having significantly more time.

I also think the "drowned out in the noise" isn't that realistic. You ought to be able to show some quite impressive results relative to computing power used. Though when you maybe should try to convince the AI labs of your better paradigm is going to be difficult to call. It's plausible to me we won't see signs that make us sufficiently confident that we only have a short time left, and it's plausible we do.

In any case before you publish something you can share it with trustworthy people and then we can discuss that concrete case in detail.

Btw tbc, sth that I think slightly speeds up AI capability but is good to publish is e.g. producing rationality content for helping humans think more effectively (and AIs might be able to adopt the techniques as well). Creating a language for rationalists to reason in more Bayesian ways would probably also be good to publish.

Do you think sociopaths are sociopaths because their approval reward is very weak? And if so, why do they often still seek dominance/prestige?