Pragmatic AI Safety

Linked to at pragmaticaisafety.com.

Given that ML is progressing quickly, that pre-paradigmatic research is not highly scalable to many researchers, and that safety research that advances capabilities is not safely scalable to a broader research community, we suggest an approach that some of us have been developing in academia over the past several years. We propose a simple, underrated, and complementary research paradigm, which we call Pragmatic AI Safety (PAIS). By complementary, we mean that we intend for it to stand alongside current approaches, rather than replace them.

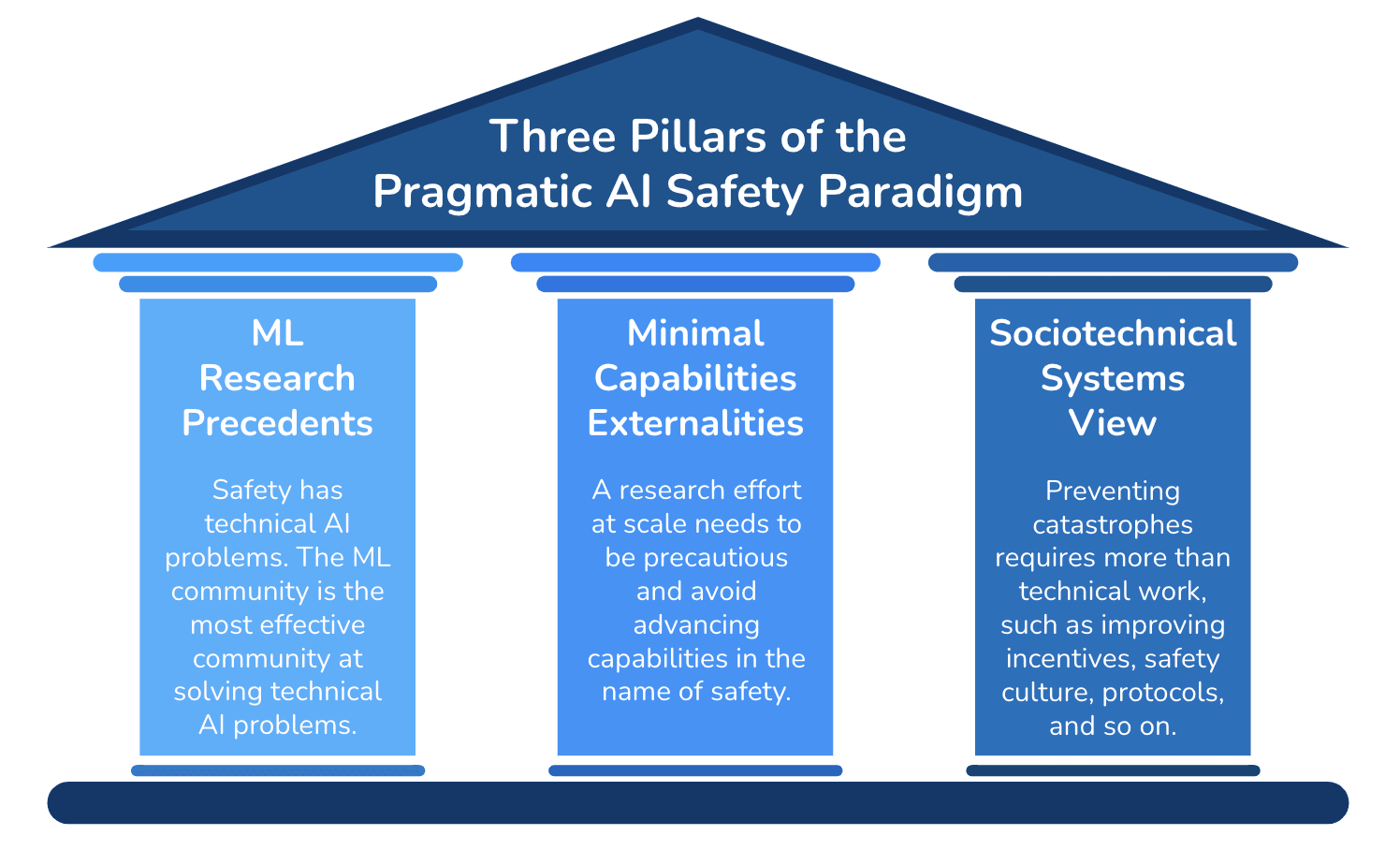

Pragmatic AI Safety rests on three essential pillars:

- ML research precedents. Safety involves technical AI problems, and the ML community’s precedents enable it to be unusually effective at solving technical AI problems.

- Minimal capabilities externalities. Safety research at scale needs to be precautious and avoid advancing capabilities in the name of safety.

- Sociotechnical systems view. Preventing catastrophes requires more than technical work, such as improving incentives, safety culture, protocols, and so on.