(For context: My guess is that by default, humans get disempowered by AIs (or maybe a single AI) and the future is much worse than it could be, and in particular is much worse than a future where we do something like slowly and thoughtfully growing ever more intelligent ourselves instead of making some alien system much smarter than us any time soon.)

Given that you seem to think alignment of AI systems with developer intent happens basically by default at this point, I wonder what you think about the following:

- Suppose that there were a frontier lab whose intent were to make an AI which would (1) shut down all other attempts to make an AGI until year 2125 (so in particular this AI would need to be capable enough that humanity (probably including its developers) could not shut it down), (2) disrupt human life and affect the universe roughly as little as possible beyond that, and (3) kill itself once its intended tenure ends in 2125 (and not leave behind any successors etc, obviously). Do you think the lab could pull it off pretty easily with basically current alignment methods and their reasonable descendants and more ideas/methods "drawn from the same distribution"?

(The point of the hypothetical is to investigate the difficulty of intent alignment at the relevant level of capability, so if it seems to you like it's getting at something quite different, then I've probably failed at specifying a good hypothetical. I offer some clarifications of the setup in the appendix that may or may not save the hypothetical in that case.)

My sense is that humanity is not remotely on track to be able to make such an AI in time. Imo by default, any superintelligent system we could make any time soon would minimally end up doing all sorts of other stuff and in particular would not follow the suicide directive.

If your response is "ok maybe this is indeed quite cursed but that doesn't mean it's hard to make an AI that takes over and has Human Values and serves as a guardian who also cures cancer and maybe makes very many happy humans and maybe ends factory farming and whatever" then I premove the counter-response "hmm well we could discuss that hope but wait first: do you agree that you just agreed that intent alignment is really difficult at the relevant capability level?".

If your response is "no this seems pretty easy actually" then I should argue against that but I'm not going to premove that counter-response.

Appendix: some clarifications on the hypothetical

- I'm happy to assume some hyperparams are favorable here. In particular, while I want us to assume the lab has to pull this off on a timeline set by competition with other labs, I'm probably happy to grant that any other lab that is just about to create a system of this capability level gets magically frozen for like 6 months. I'm also happy to assume that the lab is kinda genuinely trying to do this, though we should still imagine them being at the competence/carefulness/wisdom level of current labs. I'm also happy to grant that there isn't some external intervention on labs (eg from a government) in this scenario.

- Given that you speak really positively about current methods for intent alignment, I sort of feel like requiring that the hypothetical bans using models for alignment research? But we'd probably want to allow using models for capabilities research because it should be clear that the lab isn't handicapped on capabilities compared to other labs, and then idk how to cleanly operationalize this because models designing next AIs or self-improving might naturally be thinking about values and survival (so alignment-y things) as well... Anyway the point is that I want the question to capture whether our current techniques are really remotely decent for intent alignment. Using AIs for alignment research seems like a significantly different hope. That said, the version of this hypothetical where you are allowed to try to use the AIs you can create to help you is also interesting to consider.

- We might have some disagreement around how easy it will be for anyone to take over the world on the default path forward. Like, I think some sort of takeover isn't that hard and happens by default (and seems to be what basically all the labs and most alignment researchers are trying to do), but maybe you think this is really hard and it'd be really crazy for this to happen, and in that case you might think this makes it really difficult for the lab to pull off the thing I'm asking about. If this is the case, then I'd probably want to somehow modify the hypothetical so that it better focuses our attention on intent alignment on difficult open-ended things, and not on questions about how large capability disparities will become by default.

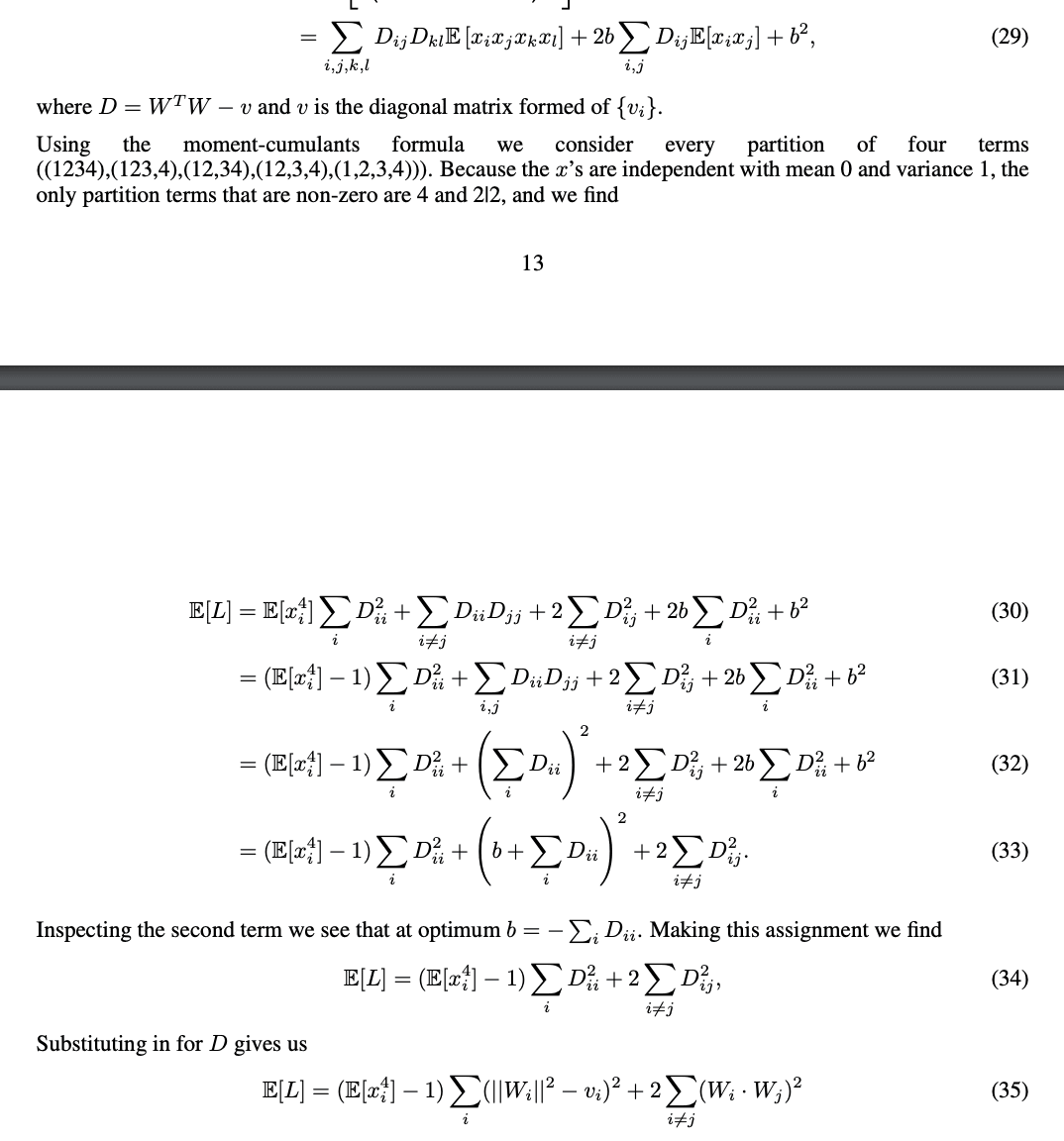

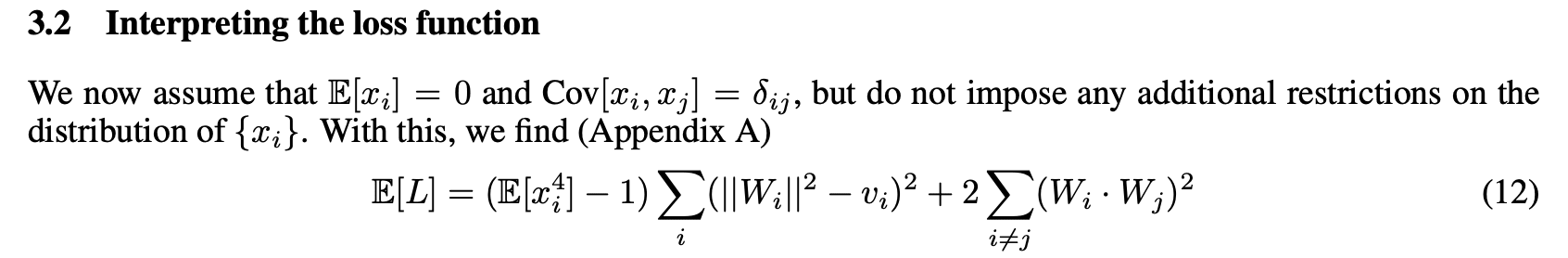

A few notes/questions about things that seem like errors in the paper (or maybe I'm confused — anyway, none of this invalidates any conclusions of the paper, but if I'm right or at least justifiably confused, then these do probably significantly hinder reading the paper; I'm partly posting this comment to possibly prevent some readers in the future from wasting a lot of time on the same issues):

1) The formula for here seems incorrect:

This is because W_i is a feature corresponding to the i'th coordinate of x (this is not evident from the screenshot, but it is evident from the rest of the paper), so surely what shows up in this formula should not be W_i, but instead the i'th row of the matrix which has columns W_i (this matrix is called W later). (If one believes that W_i is a feature, then one can see this is wrong already from the dimensions in the dot product not matching.)

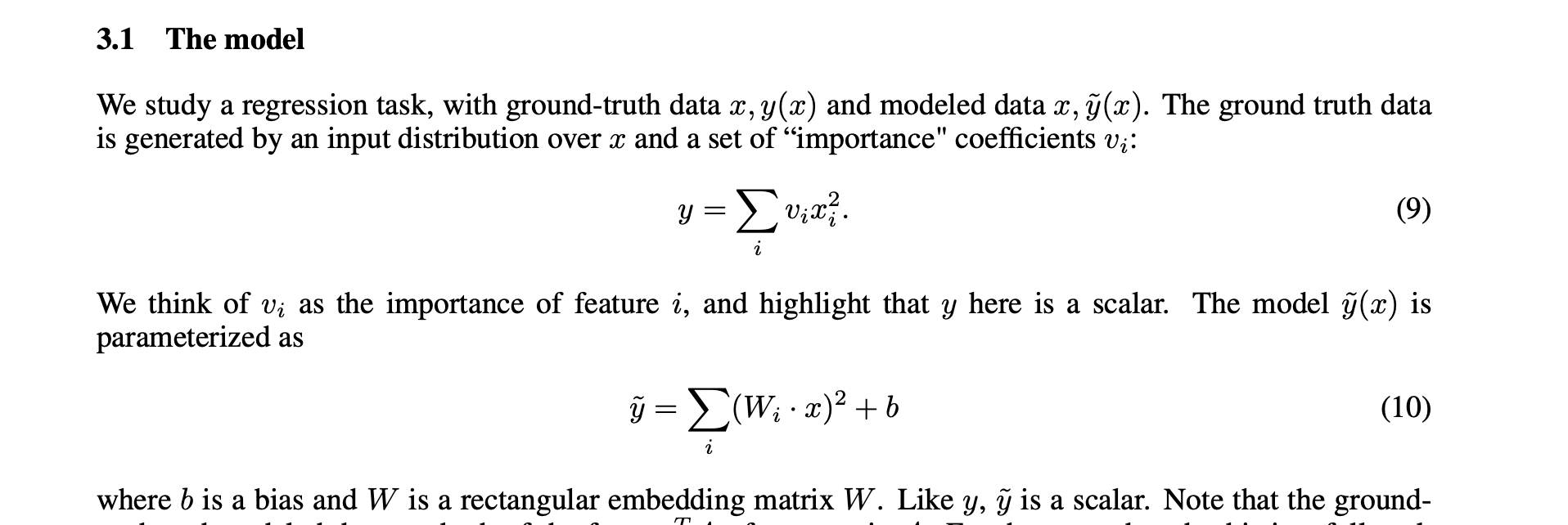

2) Even though you say in the text at the beginning of Section 3 that the input features are independent, the first sentence below made me make a pragmatic inference that you are not assuming that the coordinates are independent for this particular claim about how the loss simplifies (in part because if you were assuming independence, you could replace the covariance claim with a weaker variance claim, since the 0 covariance part is implied by independence):

However, I think you do use the fact that the input features are independent in the proof of the claim (at least you say "because the x's are independent"):

Additionally, if you are in fact just using independence in the argument here and I'm not missing something, then I think that instead of saying you are using the moment-cumulants formula here, it would be much much better to say that independence implies that any term with an unmatched index is . If you mean the moment-cumulants formula here https://en.wikipedia.org/wiki/Cumulant#Joint_cumulants , then (while I understand how to derive every equation of your argument in case the inputs are independent), I'm currently confused about how that's helpful at all, because one then still needs to analyze which terms of each cumulant are 0 (and how the various terms cancel for various choices of the matching pattern of indices), and this seems strictly more complicated than problem before translating to cumulants, unless I'm missing something obvious.

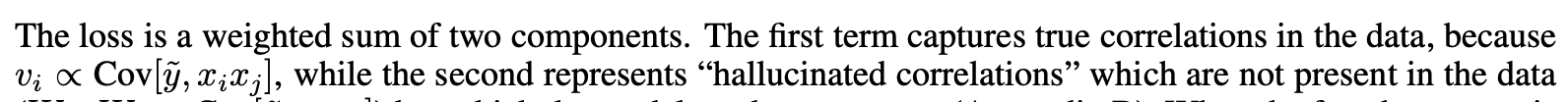

3) I'm pretty sure this should say x_i^2 instead of x_i x_j, and as far as I can tell the LHS has nothing to do with the RHS:

(I think it should instead say sth like that the loss term is proportional to the squared difference between the true and predictor covariance.)

Of course: whether a particular AI kills humanity [if we condition on that AI somehow doing stuff resulting in there being a mind upload device [1] ] depends (at least in principle) on what sort of AI it is. Similarly, of course: if we have some AI-generating process (such as "have such and such labs race to create some sort of AGI"), then whether [conditioning that process on a mind upload device being created by an AGI makes p(humans get killed) high] depends (at least in principle) on what sort of AI-generating process it is.

Still, when trying to figure out what probabilities to assign to these sorts of claims for particular AIs or particular AI-generating processes, it can imo be very informative to (among other things) think about whether most programs one could run such that mind upload devices exist 1 month after running them are such that running them kills humanity.

In fact, despite the observation that the AI/[AI-generating process] design matters in principle, it is still even a priori plausible that "if you take a uniformly random python program of length 106 such that running it leads to a mind upload device existing, running it is extremely likely to lead to humans being killed" is basically a correct zeroth-order explanation for why if a particular AI creates a mind upload device, humans die. (Whether it is in fact a correct zeroth-order explanation for AI stuff going poorly for humanity is a complicated question, and I don't feel like I have a strong yes/no position on this [2] , but I don't think your piece really addresses this question well.) To give an example where this sort of thing works out: even when you're a particular guy closing a particular kind of sliding opening between two gas containers, "only extremely few configurations of gas particles have >55% of the particles on one side" is basically a solid zeroth-order explanation for why you in particular will fail to close that particular opening with >55% of the particles on one side, even though in principle you could have installed some devices which track gas particles and move the opening up and down extremely rapidly while "closing" it so as to prevent passage in one direction but not the other and closed it with >55% of gas particles on one side.

That said, I think it is also a priori plausible that the AI case is not analogous to this example — i.e., it is a priori plausible that in the AI case, "most programs leading to mind uploads existing kill humanity" is not a correct zeroth-order explanation for why the particular attempts to have an AI create mind uploads we might get would go poorly for humanity. My point is that establishing this calls for better arguments than "it's at least in principle possible for an AI/[AI-generating process] to have more probability mass on mind-upload-creating plans which do not kill humanity".

Like, imo, "most programs which make a mind upload device also kill humanity" is (if true) an interesting and somewhat compelling first claim to make in a discussion of AI risk, to which the claim "but one can at least in principle have a distribution on programs such that most programs which make mind uploads no not also kill humans" alone is not a comparably interesting or compelling response.

or if we prompt it to create a mind upload device ↩︎

I do have various thoughts on this but presenting those seems outside the scope. ↩︎