Reasons time horizon is overrated and misinterpreted:

(This post is now live on the METR website in a slightly edited form)

In the 9 months since the METR time horizon paper (during which AI time horizons have increased by ~6x), it’s generated lots of attention as well as various criticism on LW and elsewhere. As one of the main authors, I think much of the criticism is a valid response to misinterpretations, and want to list my beliefs about limitations of our methodology and time horizon more broadly. This is not a complete list, but rather whatever I thought of in a few hours.

- Time horizon is not the length of time AIs can work independently

- Rather, it’s the amount of serial human labor they can replace with a 50% success rate. When AIs solve tasks they’re usually much faster than humans.

- Time horizon is not precise

- When METR says “Claude Opus 4.5 has a 50%-time horizon of around 4 hrs 49 mins (95% confidence interval of 1 hr 49 mins to 20 hrs 25 mins)”, we mean those error bars. They were generated via bootstrapping, so if we randomly subsample harder tasks our code would spit out <1h49m 2.5% of the time. I really have no idea whether Claude’s “true” time horizon is 3.5h or 6.5h.

- Error bars have historically been a factor of ~2 in each direction, worse with current models like Opus 4.5 as our benchmark begins to saturate.

- Because model performance is correlated, error bars for relative comparisons between models are a bit smaller. But it still makes little sense to care about whether a model is just below frontier, 10% above the previous best model, or 20% above.

- Time horizon differs between domains by orders of magnitude

- The original paper measured it on mostly software and research tasks. Applying the same methodology in a follow-up found that time horizons are fairly similar for math, but 40-100x lower for visual computer use tasks, due to eg poor perception.

- Claude 4.5 Sonnet’s real-world coffee-making time horizon is only ~2 minutes

- Time horizon does not apply to every task distribution

- On SWE-Lancer OpenAI observed that a task’s monetary value (which should be a decent proxy for engineer-hours) doesn’t correlate with a model’s success rate. I still don’t know why this is.

- Benchmark vs real-world task distribution

- We’re making tasks just ahead of what we expect future models to be able to do, and benchmark construction has many design choices.

- We try to make tasks representative of the real world, but as in any benchmark, there are inherent tradeoffs between realism, diversity, fixed costs (implementation), and variable costs (ease of running the benchmark). Inspect has made this easier but there will obviously be factors that cause our benchmarks to favor or disfavor models.

- Because anything automatically gradable can be an RL environment, and models are extensively trained using RLVR [1], making gradable tasks that don’t overestimate real-world performance at all essentially means making more realistic RLVR settings than labs, which is hard.

Figure 1: What it feels like making benchmarks before frontier models saturate them

- Our benchmarks differ from the real world in many ways, some of which are discussed in the original paper.

- Low vs high context (low-context tasks are isolated and don’t require prior knowledge about a codebase)

- Well-defined vs poorly defined

- “Messy” vs non-messy tasks (see section 6.2 of original paper)

- Different conventions around human baseline times could affect time horizon by >1.25x.

- I think we made reasonable choices, but there were certainly judgement calls here– the most important thing was to be consistent.

- Baseliner skill level: Our baseliner pool was “skilled professionals in software engineering, machine learning, and cybersecurity”, but top engineers, e.g. lab employees, would be faster.

- We didn’t incorporate failed baselines into time estimates because baseliners often failed for non-relevant reasons. If we used survival analysis to interpret an X-hour failed baseline as information that the task takes >X hours, we would increase measured task lengths.

- When a task had multiple successful baselines we aggregated these using the geometric mean. Baseline times have high variance, so using the arithmetic mean would increase averages by ~25%.

- A 50% time horizon of X hours does not mean we can delegate tasks under X hours to AIs.

- Some (reliability-critical and poorly verifiable) tasks require 98%+ success probabilities to be worth automating

- Doubling the time horizon does not double the degree of automation. Even if the AI requires half as many human interventions, it will probably fail in more complex ways requiring more human labor per intervention.

- To convert time horizons to research speedup, we need to measure how much time a human spends prompting AIs, waiting for generations, checking AI output, writing code manually, etc. when doing an X hour task assisted by an AI with time horizon Y hours. Then we plug this into the uplift equation. This process is nontrivial and requires a much richer data source like Cursor logs or screen recordings.

- 20% and 80% time horizons are kind of fake because there aren’t enough parameters to fit them separately.

- We fit a two-parameter logistic model which doesn’t fit the top and bottom of the success curve separately, so improving performance on 20% horizon tasks can lower 80% horizon.

- It would be better to use some kind of spline with logit link and monotonicity constraint. The reasons we haven't done this yet: (a) 80% time horizon was kind of an afterthought/robustness check, (b) we wanted our methods to be easily understandable, (c) there aren't enough tasks to fit more than a couple more parameters, and (d) anything more sophisticated than logistic regression would take longer to run, and we do something like 300,000 logistic fits (mostly for bootstrapped confidence intervals) to reproduce the pipeline. I do recommend doing this for anyone who wants to measure higher quantiles and has a large enough benchmark to do so meaningfully.

- Time horizons at 99%+ reliability levels cannot be fit at all without much larger and higher-quality benchmarks.

- Measuring 99% time horizons would require ~300 highly diverse tasks in each time bucket. If the tasks are not highly diverse and realistic, we could fail to sample the type of task that would trip up the AI in actual use.

- The tasks also need <<1% label noise. If they’re broken/unfair/have label noise, the benchmark could saturate at 98% and we would estimate the 99% time horizon of every model to be zero.

- Speculating about the effects of a months- or years-long time horizon is fraught.

- The distribution of tasks from which the suite is drawn from is not super well-defined, and so reasonable different extrapolations could get quite different time-horizon trends.

- One example: all of the tasks in METR-HRS are self-contained, whereas most months-long tasks humans do require collaboration.

- If an AI has a 3-year time horizon, does this mean an AI can competently substitute for a human for a 3-year long project with the same level of feedback from a manager, or be able to do the human's job completely independently? We have no tasks involving multi-turn interaction with a human so there is no right answer.

- There is a good argument that AGI would have an infinite time horizon and so time horizon will eventually start growing superexponentially. However, the AI Futures timelines model is highly sensitive to exactly how superexponential future time horizon growth will be, which we have little data on. This parameter, “Doubling Difficulty Growth Factor”, can change the date of the first Automated Coder AI between 2028 and 2050.

Despite these limitations, what conclusions do I still stand by?

- The most important numbers to estimate were the slope of the long-run trend (one doubling every 6-7 months) and a linear extrapolation of this trend predicting when AIs would reach 1 month / 167 working hours time horizon (2030), not the exact time horizon of any particular model. I think the paper did well here.

- Throughout the project we did the least work we could to establish a sufficiently robust result, because task construction and baselining were both super expensive. As a result, the data are insufficient to do some secondary and subset analyses. I still think it’s fine but have increasing worries as the benchmark nears saturation.

- Without SWAA the error bars are super wide, and SWAA is lower quality than some easy (non-software) benchmarks like GSM8k. This might seem worrying, but it’s fine because it doesn’t actually matter for the result whether GPT-2’s time horizon is 0.5 seconds or 3 seconds; the slope of the trend is pretty similar. All that matters is that we can estimate it at all with a benchmark that isn’t super biased.

- Some tasks have time estimates rather than actual human baselines, and the tasks that do have baselines have few of them. This is statistically ok because in our sensitivity analysis, adding IID baseline noise had minimal impact on the results, and the range of task lengths (spanning roughly a factor of 10,000) means that even baselining error correlated with task length wouldn’t affect the doubling time much.

- However, Tom Cunningham points out that most of the longer tasks don’t have baselines, so if we're systematically over/under-estimating the length of long tasks we could be misjudging the degree of acceleration in 2025.

- The paper had a small number of tasks (only ~170) because we prioritize quality over quantity. The dataset size was originally fine but is now becoming a problem as we lack longer, 2h+ tasks to evaluate future models.

- I think we’re planning to update the task suite soon to include most of the HCAST tasks (the original paper had only a subset) plus some new tasks. Beyond this, we have various plans to continue measuring AI capabilities, both through benchmarks and other means like RCTs.

[1] see eg DeepSeek R1 paper: https://arxiv.org/abs/2501.12948

After @Daniel Kokotajlo invited me to the AI Futures office I ended up talking to Eli and Alex for about an hour, and feel like I have a decent understanding of the model:

Summary of the AI Futures Model

- Compute and effective compute

- Actual compute is stock of compute at time t

- Effective compute is used as the main measure of AI capabilities. It is defined as the "amount of training compute we’d need to train models as performant as the frontier models at time using the training process of the present-day".

- is estimated by relating it to time horizon.

- Compute is allocated as fixed percentages between training, experiments, and automated coders

- Effective labor

- The % of tasks automatable is a logistic function of log effective compute

- Once a task can be automated, it will still get more efficient over time by a multiplier

- is zero for non-automated tasks. When effective compute reaches the level required to automate it, it increases as a power law .

- Human coding labor and automation compute are optimally allocated between tasks

- Overall coding labor for task i is the sum of human and AI labor

- Aggregate coding labor is CES between the labor applied to all different tasks, with low substitutability by default, meaning tasks only substitute slightly for each other

- Finally, serial coding labor , indicating diminishing returns of about to adding more labor in parallel

- “Experiment throughput” is CES between serial coding labor and experiment compute

- Labor and compute are slight complements (median estimate )

- There are also diminishing returns to compute, with where by default

- Research taste

- Human research taste is lognormally distributed with median researchers defined as 1x taste and 99.9th percentile (+3.1SD) researchers assumed to have 3.70x research taste

- An Automated Coder–level AI has research taste

- AI research taste increases as a power law in effective compute (AI “research taste IQ” is standard deviations above the human median, which is then passed through an exponential to get research taste)

- AIs replace whatever humans they’re better than. The aggregate research taste of the company is the mean of all remaining researchers. This means it initially increases slowly as AIs replace the worst researchers, then speeds up as everyone starts using the AIs’ research taste which keeps improving.

- Research effort RE(t) = research taste * experiment throughput

- Then software efficiency follows the Jones model

- is how much harder AI R&D gets as software efficiency advances

- Finally this feeds back into effective compute

- A taste-only singularity happens when , where = doublings of research taste per doubling in effective compute. This would cause improvements to go faster and faster until approaching physical limits. Eli's parameter choices give 38% chance of taste-only singularity, but many of the non-singularity samples still get to ASI quickly, with the 50th percentile sample getting from AC to ASI in 5 years.

- For various reasons Eli and Daniel's all-things-considered views have harder takeoff than the model predicts, with Eli's median for AC -> ASI 2 years, and Daniel's median 1.5 years.

Notes on Sensitivity analysis

- Time to AC is very sensitive to how superexponential time horizon growth is, and also to

- The present doubling time

- Time horizon for automated coder

- Time from AC to ASI is very sensitive to the “automated research taste slope” : how much “research IQ” AIs gain per doubling of effective training compute. But many other factors could slow down the AC-to-ASI duration to >6.5 years:

- Median-to-top-human jumps above SAR needed to reach TED-AI

- The software efficiency growth rate in 2024

- Median to 99.9th% human research taste multiplier

- Slowdown from 10x less experiment compute

- Research progress rate in the limit of infinite coding labor: mostly because it’s highly uncertain (their 90% CI is 2.0-201)

- Automated research taste of an AC

Biggest uncertainties to track

(not necessarily that I disagree, just need to think about it more)

- Effective compute vs time horizon: how do all the assumptions look when we eliminate time horizon from the model and use other methods to model effective compute growth? I’m sketched out by the huge error bars on time horizon superexpontiality → time to AC

- Ryan thinks >70% of code at Anthropic was written by AIs already in October 2025 but it’s mostly low-value code. Code varies dramatically in value, and AIs can expand the number and type of low-value tasks done rather than just substituting for humans. This may be a separate effect from AIs doing extra work on tasks that can be automated, which is not tracked by the model.

- It might be that coding ability and research taste are two ends of a continuous spectrum from small-scale to large-scale tasks.

- Research taste:

- Someone really needs to do experiments on this, it’s possible now. David Rein and I are actively thinking about it

- Is human research taste modeled correctly? Eg it seems likely to me that the 0.3% of top humans add more than 0.3%*3.7x to the “aggregate research taste” of a lab because they can set research directions. There are maybe more faithful ways to model it; all the ones Eli mentioned seemed far more complicated.

- Is modeling AI research taste as exponential in human standard deviations valid? I have no idea whether someone 9 standard deviations above the human median would be able to find 3.7^(9/3) = 50x better research ideas or not

- Is CES valid for experiment throughput at these extreme values of labor and compute? It seems like a superhuman AI researcher might learn to run experiments more efficiently, decreasing the compute required for each experiment. The estimates for experiment throughput parameters were all about humans getting 10x compute, infinite labor, etc. Or, they could coordinate better (especially with all the human ex-coders to help them), and decrease the parallelization penalties for labor and/or compute. I’m not sure if this would be different from adjusting research taste.

Thoughts in no particular order:

- Kudos for what seems to be lots of thoughtful work incorporated into this model.

- There are a lot of parameters. Maybe this is necessary but it's a bit overwhelming and requires me to trust whoever estimated the parameters, as well as the modeling choices.

- I couldn't find a block of equations that represents the whole model, or an index of variables in one place, and it's difficult to go between math and exposition especially when the equations are hidden in dropdowns, so I still feel like I don't have a good picture. I had Claude do this and read through it, and it looks reasonable but some parts are still not defined in Claude's summary, I think because the whole page is rendered in javascript and it couldn't access it. I would love to visit the AI Futures office again to understand the model better.

- I find the use of time horizon as such a crucial intermediate variable confusing and am scared of potential assumptions around it.

- Time horizon is underdefined on years long tasks. I know I talked to the AI Futures team about what you now wrote up as METR-HRS-Extended to operationalize it, but it's unclear what a 3-year time horizon really means (when extrapolating the trend with superexponential adjustment) given factors like the increased number of details and interaction with longer tasks. Does the trend mean that in X years, an AI will competently substitute for a human for a 3-year long project with the same level of feedback from a manager, or be able to do the human's job with less feedback?

- The function that relates time horizon and research speedup in the real world is very unclear. I'm trying to collect data on this and it's highly nontrivial to model and interpret[1] so I'm skeptical of any use of the time horizon trend to predict uplift that doesn't either have a simple, robust model or something validated by experiment.

- The description implies that time horizon is only used in the first phase (pre-AC), but I don't understand how it's used. Humans and AIs will probably go from their current state to perfectly substitutable in some kind of continuous progression and I couldn't find the formula for this. Also when I changed the "How much easier/harder each coding time horizon doubling gets" parameter by small amounts, the forecasted time from AC to ASI changes significantly (2.7 years at 0.90, over 4 years for 1.00), so it looks like stages 2 and 3 are affected as well.

- It seems to me like a time horizon of 3 years or 125 years is complete overkill for automation of enough coding for the bottleneck to shift to experiments and research taste.

- Why not assume that compute is allocated optimally between experiment, inference, etc. rather than assuming things about the behavior of AI companies?

- I wish the interface were faster to update, closer to 100ms than 1s to update, but this isn't a big deal. I can believe it's hard to speed up code that integrates these differential equations many times per user interaction.

- ^

Eg looking at transcripts to determine where humans are spending their time when they give Cursor tasks of a certain length

I didn't really define software intelligence explosion, but had something in mind like "self-reinforcing gains from automated research causing capabilities gains in 6 months to be faster than the labor/compute scaleup-driven gains in the 3 years from 2023-2025", and then question I was targeting with the second part was "After the initial speed-up from ASARA, does the pace of progress accelerate or decelerate as AI progress feeds back on itself?"

A 23.5x improvement alone seems like it would qualify as a major explosion if it happened in a short enough period in time

Seems about true. I claim that the nanogpt speedrun suggests this is only likely if future AI labor is exponentially faster at doing research than current humans, with many caveats of course, and I don't really have an opinion on that.

We already know that there is of course a fundamental limit to how fast you can make an algorithm, so the question is always "how close to optimal are current algorithms". It should be our very strong prior that any small subset of frontier model training will hit diminishing returns much quicker than the complete whole.

This is not as small a subset of training as you might think. The 53 optimizations in the nanogpt speedrun touched basically every part of the model, including the optimizer, embeddings, attention, other architectural details, quantization, hyperparameters, code optimizations, and Pytorch version. The main two things that limit a comparison to frontier AI are scale and data improvement. It's known there are many tricks that work at large scale but not at small scale. If you believe the initial 15x speedup is analogous and that the larger scale gives you a faster, then maybe we get something like a 100x speedup atop our current algorithms? But I don't really believe that the original nanoGPT, which was a 300-line repo written to be readable rather than efficient [1], is analogous to our current state. If there were a bunch of low-hanging fruit that could give strongly superlinear returns, we would see 3x/year efficiency gains with small increases in labor or compute over time, but we actually require 5x/year compute increase and ~3x per year labor increase.

A software intelligence explosion is completely possible with linear speedups in cumulative effort. Indeed, it is possible with sublinear increases in cumulative effort.

Agree I was being a bit sloppy here. The derivative being infinite is not relevant in Davidson's model or my mind, it's whether the pace of progress accelerates or decelerates. It could still be very fast as it decelerates, but I'm not really thinking in enough detail to model these borderline cases, so maybe we should think of the threshold for very fast software-driven progress as r > 0.75 or something rather than r > 1.

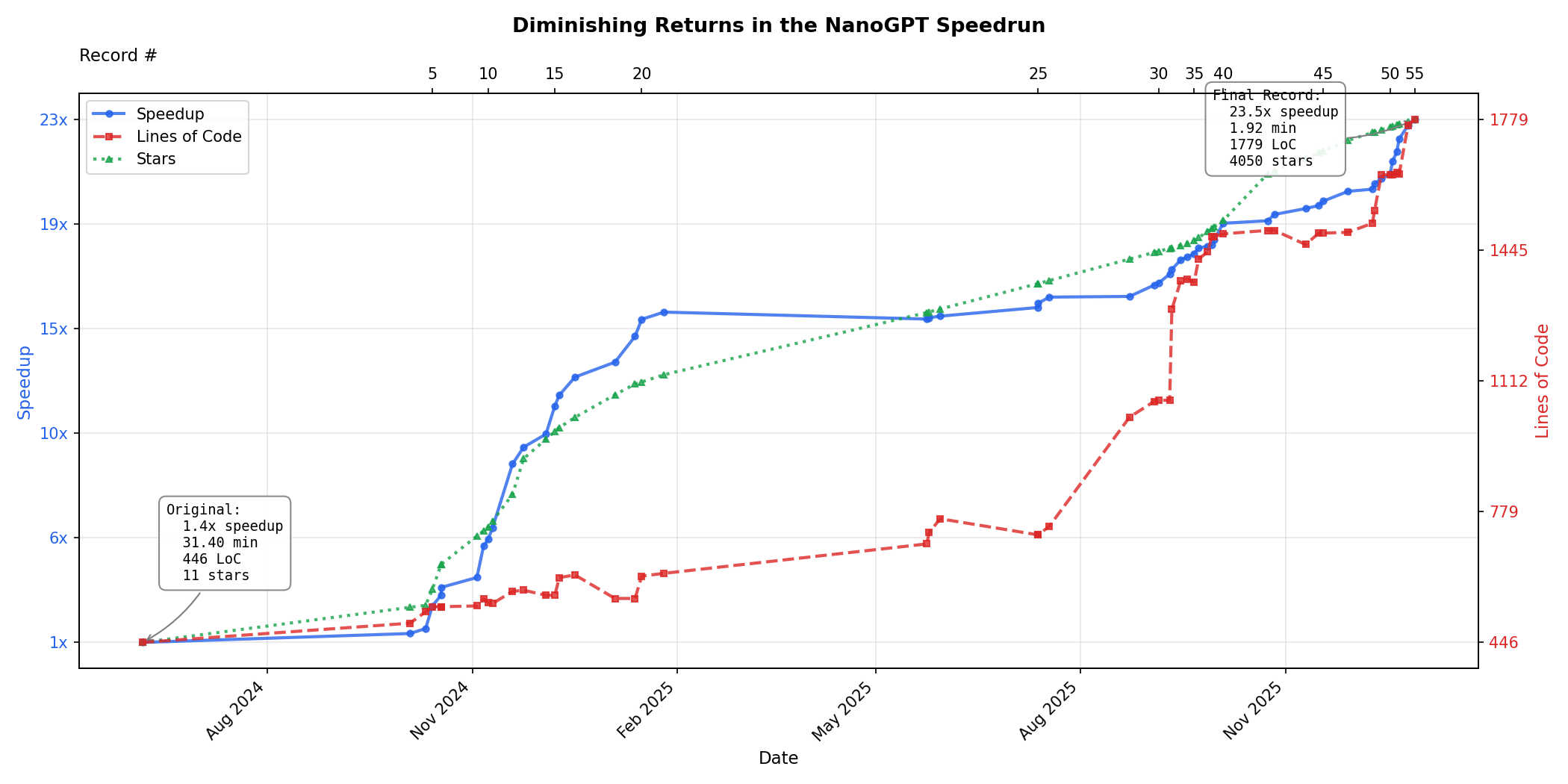

Diminishing returns in the NanoGPT speedrun:

To determine whether we're heading for a software intelligence explosion, one key variable is how much harder algorithmic improvement gets over time. Luckily someone made the NanoGPT speedrun, a repo where people try to minimize the amount of time on 8x H100s required to train GPT-2 124M down to 3.28 loss. The record has improved from 45 minutes in mid-2024 down to 1.92 minutes today, a 23.5x speedup. This does not give the whole picture-- the bulk of my uncertainty is in other variables-- but given this is existing data it's worth looking at.

I only spent a couple of hours looking at the data [3], but there seem to be sharply diminishing marginal returns, which is some evidence against a software-only singularity.

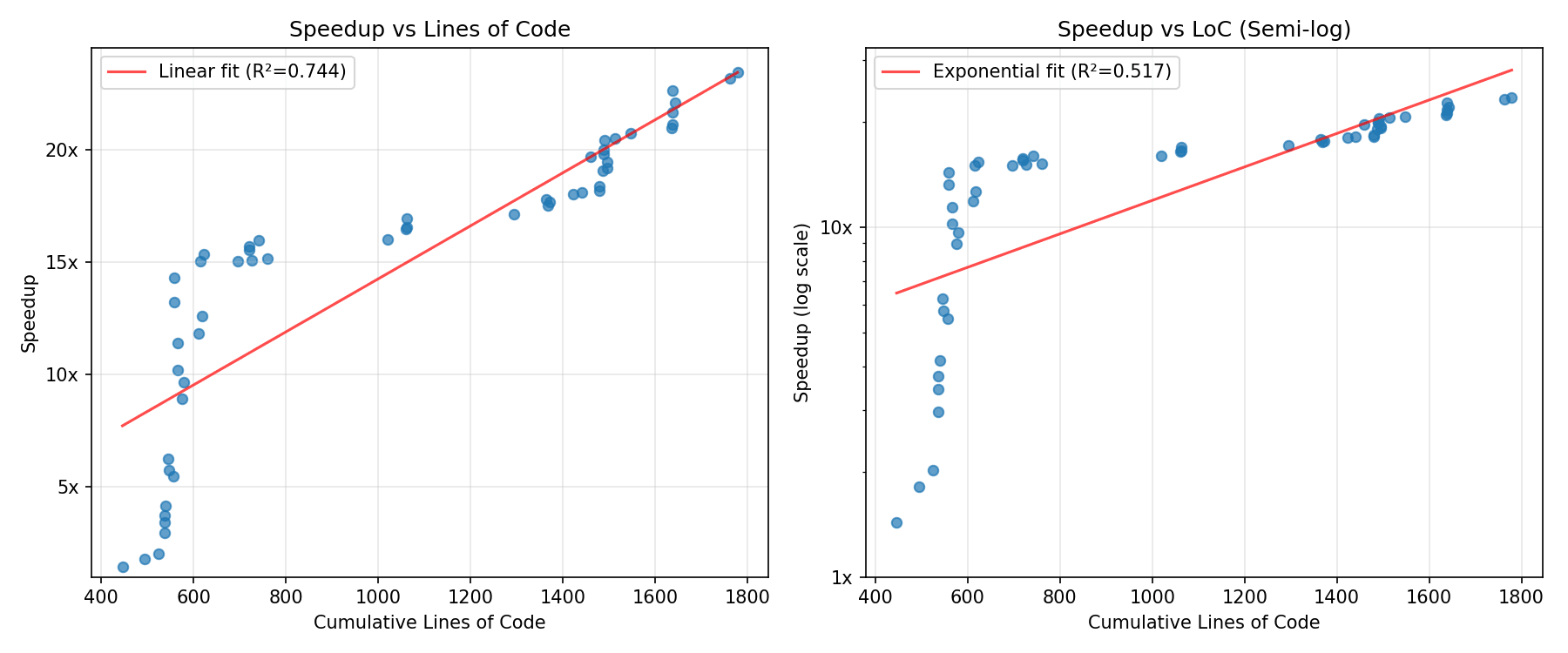

At first improvements were easy to make without increasing lines of code much, but then improvements became small and LoC required became larger and larger with increasingly small improvements, which means very strong diminishing returns-- speedup is actually sublinear in lines of code. This could be an artifact related to the very large elbow early on, but I mostly believe it.

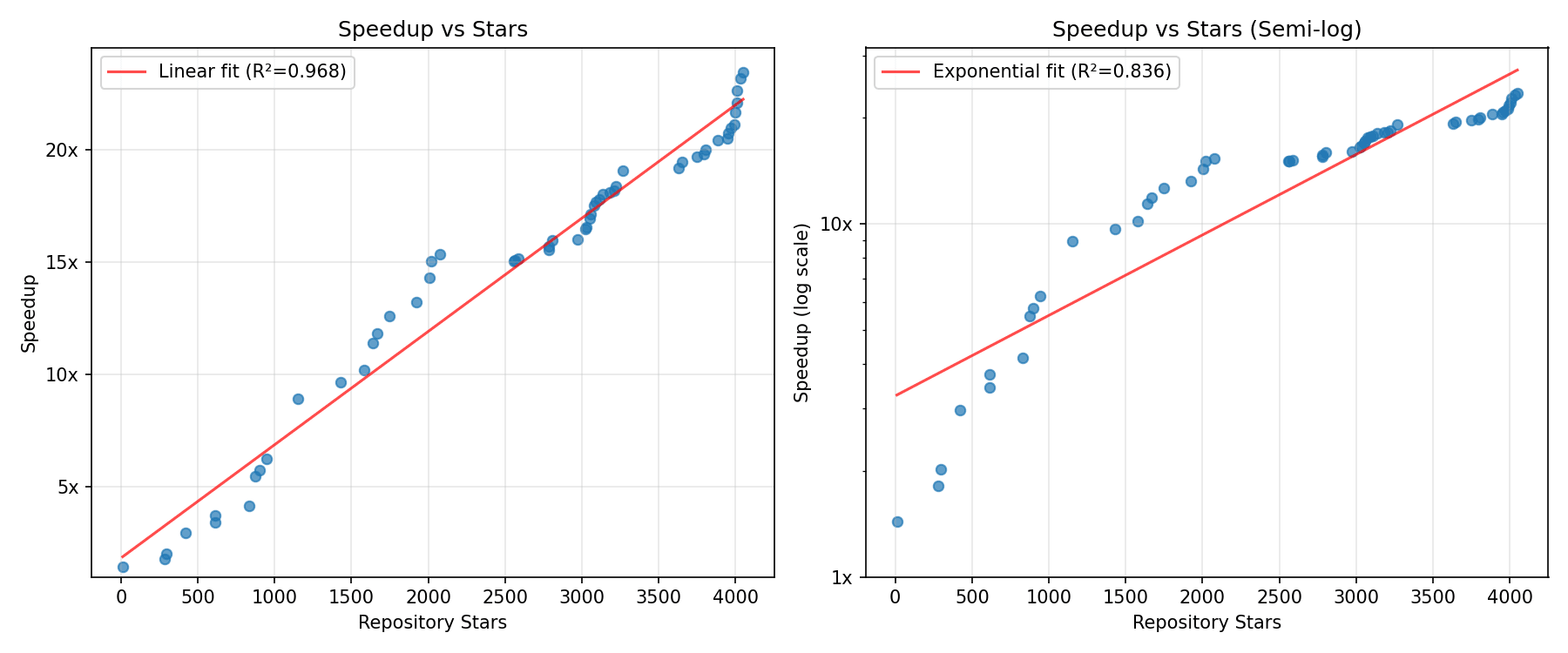

If we instead look at number of stars as a proxy for amount of attention on the project [4], there are no diminishing returns. The data basically suggest speedup is linear in effort [1], which is consistent with a world where 3x/year increases in labor and compute are required to sustain the historical trend of ~3x/year algorithmic speedups observed by Epoch. However, this still points against a software intelligence explosion, which would require superlinear speedups for linear increases in cumulative effort.

Given that the speedup-vs-stars and speedup-vs-improvement-# graphs are linear but speedup-vs-LoC is sublinear, our guess should be that returns to research output are somewhat sublinear. In the language of Davidson's semi-endogenous growth model, this means [2]. Of course there are massive caveats about extrapolation to future models.

In Davidson's model, the requirement for a software intelligence explosion after research is automated is , where represents inefficiency of parallel work and is the elasticity of research output to cognitive labor at a fixed compute budget. If , this mathematically means and we don't get an SIE.

So I think an SIE will only happen if one or more of the below is true:

- Cognitive quality of future AIs being so high that one hour of AIs researching is equivalent to exponentially large quantities of human researcher hours, even if they don't train much more efficiently to the same capability level. This is the most important question to answer and something I hope METR does experiments on in Q1.

- Some other difference between nanogpt and frontier AI research, e.g. maybe research ability is easier to improve than base model loss

- Once we get AGI, something other than human labor, AI labor, or compute scales exponentially on a much faster timescale, e.g. training data

- A paradigm shift to a totally different architecture that wouldn't be captured in a dataset only 1.5 years long

- AIs with 2x the efficiency cause research to happen more than 2x faster, despite outweigh the diminishing returns from parallel work AND being bottlenecked by compute, making . I can't think of a way this could happen, and to get an SIE it would have to be even larger than 1.

[1]: This was previously observed in a tweet from Epoch in February but now we have about twice the data.

[2]: would mean exponential improvements, while implies linear improvement over time at constant labor/compute. So means improvements are actually slower than linear.

[3]: A few minutes ideating, almost an hour writing a prompt for Claude 4.5 Opus, then 30 minutes making graphs and such.

[4]: It's unclear whether to say that stars represent instantaneous effort or total cumulative effort on the project. If we interpret it as instantaneous effort, then we would see diminishing returns. Also it's unclear whether stars are measuring or ; if it might imply slightly increasing returns.

I'm giving this +1 review point despite not having originally been excited about this in 2024. Last year, I and many others were in a frame where alignment plausibly needed a brilliant idea. But since then, I've realized that execution and iteration on ideas we already have is highly valuable. Just look at how much has been done with probes and steering!

Ideas like this didn't match my mental picture of the "solution to alignment", and I still don't think it's in my top 5 directions, but with how fast AI safety has been growing, we can assign 10 researchers to each of 20 "neglected approach"es like this, so it deserves +1 point.

The post has an empirical result that's sufficient to concretize the idea and show it has some level of validity, which is necessary. Adam Jones has a critique. However, the only paper on this so far didn't make it to a main conference and only has 3 cites, so the impact isn't large (yet).

I didn't believe the theory of change at the time and still don't. The post doesn't really make a full case for it, and I doubt it really convinced anyone to work on this for the right reasons.

- No BOTEC

- Models of social dynamics are handwavy

- In alignment and other safety work, we have force multipliers like neglectedness and influencing government. What's the multiplier here? Or is there no intervention that has multiplier effects in the long-term multipolar risk space?

- Why wouldn't the effect just get swamped by the dozens of medical AI startups that are raising billions of dollars as we speak?

It may do a good job of giving the author's perspective, but given all these gaps it's not very memorable today in explaining the risks OP cares about-- even if we do end up worrying about them in 5-10 years.

To clarify I'm not very confident that AI will be aligned; I still have a >5% p(takeover doom | 10% of AI investment is spent on safety). I'm not really sure why it feels different emotionally but I guess this is just how brains are sometimes.

I'm glad to see this post come out. I've previously opined that solving these kinds of problems is what proves a field has become paradigmatic:

Paradigms gain their status because they are more successful than their competitors in solving a few problems that the group of practitioners has come to recognize as acute. ––Thomas Kuhn

It has been proven many times across scientific fields that a method that can solve these proxy tasks is more likely to achieve an application. The approaches sketched out here seem like a particularly good fit for a large lab like GDM, because the North Star can be somewhat legible and the team has enough resources to tackle a series of proxy tasks that are relevant and impressive. Not that it would be a bad fit elsewhere either.

The simple model I mentioned on Slack (still WIP, hopefully to be written up this week) tracks capability directly in terms of labor speedup and extrapolates that. Of course, for a more serious timelines forecast you have to ground it in some data.

Here's what I said to Eli on Slack; I don't really have more thoughts since then

we can get f_2026 [uplift fraction in 2026] from

v [velocity of automation as capabilities improve] can be obtained by