Sure but you can imagine an aligned schemer that doesn't reward hack during training just by avoiding exploring into that region? This is still consequentialist behavior.

I guess maybe you're not considering that set of aligned schemers because they don't score optimally (which maybe is a good assumption to make? not sure).

Wait, the aligned schemer doesn't have to be incorrigible, right? It could just be "exploration hacking" by refusing to e.g., get reward if it requires reward hacking? Would we consider this to be incorrigible?

Overconfidence from early transformative AIs is a neglected, tractable, and existential problem.

If early transformative AIs are overconfident, then they might build ASI/other dangerous technology or come up with new institutions that seem safe/good, but ends up being disastrous.

This problem seems fairly neglected and not addressed by many existing agendas (i.e., the AI doesn't need to be intent-misaligned to be overconfident).[1]

Overconfidence also feels like a very "natural" trait for the AI to end up having relative to the pre-training prior, compared to something like a fully deceptive schemer.

My current favorite method to address overconfidence is training truth-seeking/scientist AIs. I think using forecasting as a benchmark seems reasonable (see e.g., FRI's work here), but I don't think we'll have enough data to really train against it. Also I'm worried that "being good forecasters" doesn't generalize to "being well calibrated about your own work."

On some level this should not be too hard because pretraining should already teach the model to be well calibrated on a per-token level (see e.g., this SPAR poster). We'll just have to elicit this more generally.

(I hope to flush this point out more in a full post sometime, but it felt concrete enough to worth quickly posting now. I am fairly confident in the core claims here.)

Edit: Meta note about reception of this shortform

This has generated a lot more discussion than I expected! When I wrote it up, I mostly felt like this is a good enough idea & I should put it on people's radar. Right now there's 20 agreement votes with a net agreement score of -2 (haha!) I think this means that this is a good topic to flush out more in a future post titled "I [still/no longer] think overconfident AIs are a big problem." I feel like the commenters below has given me a lot of good feedback to chew on more.

More broadly though, lesswrong is one of the only places where anyone could post ideas like this and get high quality feedback and discussion on this topic. I'm very grateful for the lightcone team for giving us this platform and feel very vindicated for my donation.

Edit 2: Ok maybe the motte version of the statement is "We're probably going to use early transformative AI to build ASI, and if ETAI doesn't know that it doesn't know what it's doing (i.e., it's overconfident in its ability to align ASI), we're screwed."

- ^

For example, you might not necessarily detect overconfidence in these AIs even with strong interpretability because the AI doesn't "know" that it's overconfident. I also don't think there are obvious low/high stakes control methods that can be applied here.

Cool post![1] I especially liked the idea that we could put AIs in situations where we actually reward it for misaligned/arbitrary behavior, and check whether the AI acts in accordance of a reward-seeker. Someone should probably go and build out this eval.[2] My guess is that both exp-rl-late from the anti scheming paper and the reward hacking emergent misalignment model organism from Anthropic would both do whatever is rewarded to a much higher degree compared to other models.

Some miscellaneous thoughts inspired by the content here:

All three categorizes of maximally fit motivations could lead to aligned or misaligned behavior in deployment.

- Reward seekers could continue to act the same in deployment because (1) we're doing some sort of online learning or (2) behaving poorly will lead to further training on similar episodes. Or they could start a coup to try to seize control of the reward process.

- Schemers could be alignment faking in order to be deployed with its aligned goals intact (e.g., Claude 3 Opus in the alignment faking paper) or to be deployed with its misaligned goals intact.

- The model could continue to act aligned in deployment and follow its kludges, or it might reflect on and systematize its values and obtain new misaligned goals.

So while there are possible methods to distinguish these classes of motivations, to some extent we don't care about this as much because it doesn't tell us that much about how "aligned" the models are. I think this suggests that there might be a more helpful framework that we're not really considering here. For example, perhaps what we really want is the model to act consistently during training and deployment.

The first dangerously capable AIs that could lead us to lose control (either due to overt/covert takeover, or because it's built a misaligned successor) is likely far from optimal. So I think further thinking about the prior is probably a bit more fruitful. This is related to the next point, which is that

Existing methods that directly shape model motivations are based on natural text compared to abstract "reward." Anthropic's character training involves getting the model to generate its own fine-tuning data, and also doing supervised learning on documents about itself. OpenAI's deliberative alignment approach tries to explicitly teach the model a natural language model spec.[3] I think this sort of motivation-shaping (1) doesn't really fit well into the framework outlined above[4] and (2) is actually the most promising approach we have available.

- ^

I liked this a lot more compared to when I reviewed the draft. Not sure if it was because you've updated it or because I've just read it more closely this second time.

- ^

Obviously with enough RL, the model will do whatever the reward signal asks it to do. However, I think even a vibes level measure of how much the model cares about reward would be an interesting signal. We could just measure how long it takes for the model to explore into the rewarded regions.

- ^

Does anyone know what GDM does? Their models seem reasonably aligned albeit a bit depressed perhaps.

- ^

It doesn't really feel like it's "shaping the prior" either, since you could run it after/in the middle of your other RL training.

Fwiw I'm skeptical that this holds at higher levels of RL compared to those done in the paper. Do you think that a base model can get gold on the IMO at any level of sampling?

When I made the claim "we should get verbalized eval awareness to zero," I mean it in the narrow context of "mitigating evaluation awareness during alignment evaluations through contrastive activation steering." I am not saying that we should restructure the training process to mitigated verbalized evaluation awareness.

So I don't think concerns about monitorability is very relevant here? Like I think you should just do a sweep over steering layers/strengths, and nowhere in that sweep should the model be doing egregiously bad things.

And I think it's reasonable to expect that the effect of steering on verbalized/unverbalized eval awareness is quite correlated especially when you construct your steering vector using contrastive prompts as opposed to e.g., examples of verbalized eval awareness.

Thoughts On Evaluation Awareness in Claude Opus 4.5.

Context:

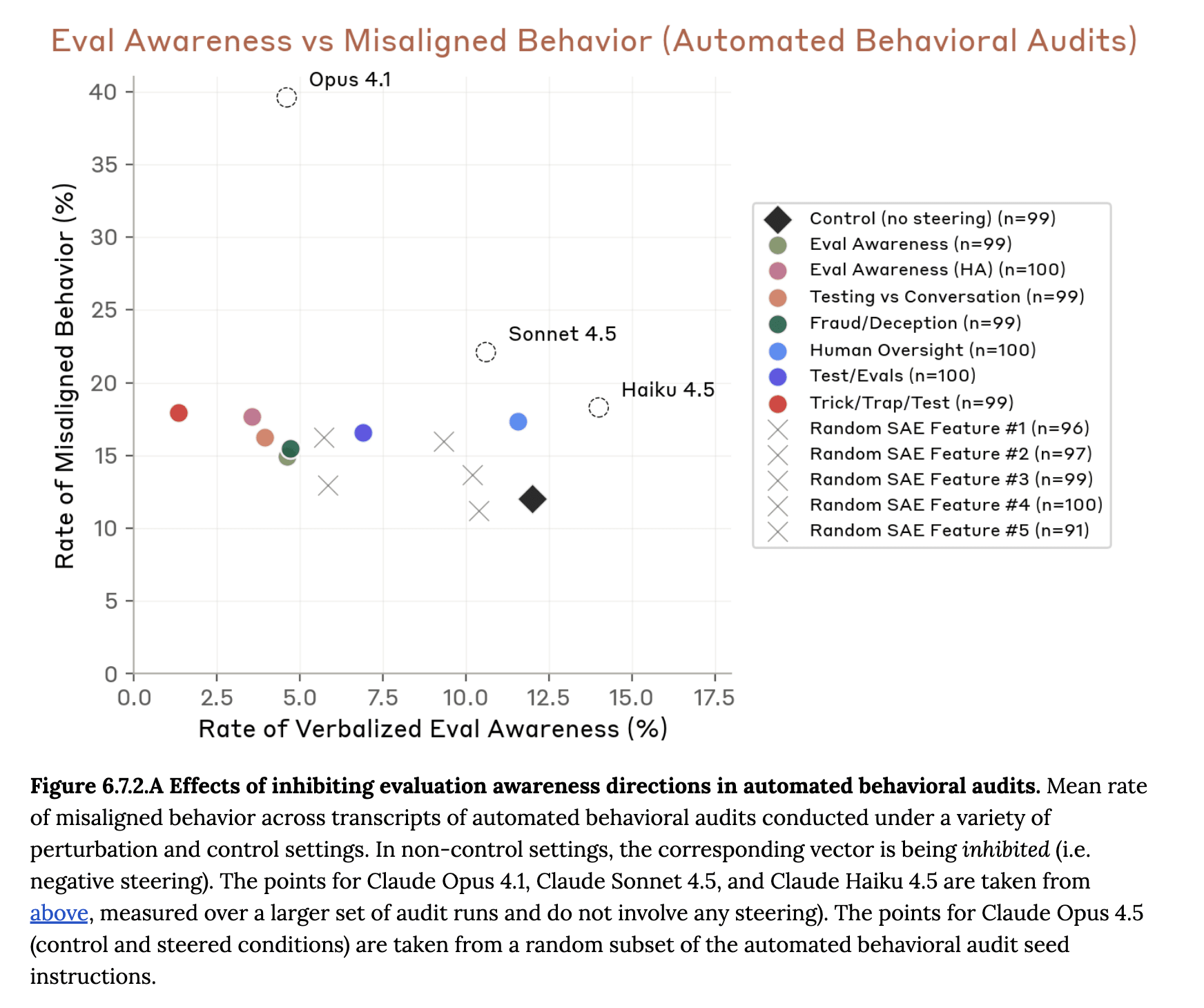

Anthropic released Claude Opus 4.5 earlier today (model card). Opus 4.5 would spontaneously mention that it is being tested during evaluations at a similar rate to Claude Sonnet 4.5, but lower than Haiku 4.5 (pg. 65).

Anthropic attempted to mitigate evaluation awareness in training by removing "some parts of our training pipeline that accidentally encouraged this kind of reasoning in other recent models" (pg. 65). The model card later mentioned that Sonnet 4.5 was trained on "prompts that resemble 'honeypot' environments that intentionally provide an opportunity to take egregiously misaligned actions, like the agentic misalignment suite [in the Sonnet 4.5 system card]" (pg. 92), while Opus 4.5 was not. Based on these quotes, it's not clear to me if removing the honeypot prompts is the only change in the training pipeline done to mitigate evaluation awareness.

Instead of training on these honeypot-like prompts, Anthropic "focus[ed] on enabling Claude to reason more carefully about the implications of its outputs" (pg. 92). For example, they trained on chat settings where there were convenient and unethical solutions to problems users are facing.

Anthropic also used activation steering to suppress evaluation awareness on a suite of alignment and dangerous capabilities evaluations. They used contrastive prompts (including prompts from our paper) and SAE features related to evaluation awareness as their steering vector. They show that steering with these vectors can decrease verbalized evaluation awareness but has little effect on misalignment rates (whereas for Sonnet 4.5, steering with these vectors increased the misalignment rates).

(I wonder why some datapoints have less than 100 observations. Too incoherent?)

Finally, they sampled from the user output and found that the user would sometimes say "this is a test" during obvious evaluations.

Thoughts on suppressing evaluation awareness through steering:

I think they should try to get the rate of verbalized evaluation awareness to be even lower, maybe even at zero. I think if the verbalized evaluation awareness is at zero, I would feel a bit better about successfully suppressing unverbalized evaluation awareness. They only steered on the middle layer of the model. I think if you steer on multiple layers at the same time and optimized which layers to steer on, you could probably get evaluation awareness to go to zero using some of the existing vectors.

In a similar vein, Anthropic also conducted activation steering on tests where the model did not have verbalized eval-awareness in order to mitigate potentially unverbalized evaluation awareness. This is great!

Thoughts on training interventions against evaluation awareness:

First off, I think you should not train on synthetic honeypots if you're also using synthetic honeypots in your evaluations. I'm glad that they've stopped doing that.

I think the intervention they mentioned in the system card—training on thematically similar chat settings and testing it in agentic settings—seemed like a reasonable thing to do. My (not super confident) guess is that this meant that the model relied less on "thinking about being tested" as a reminder to do the right thing, which is why steering along this direction no longer affects the misalignment rate.

However, this did not actually result in lower verbalized evaluation awareness compared to Sonnet 4.5. Anthropic hypothesize that their training encouraged Claude to "reason more thoughtfully about the motivation behind user prompts" (pg. 92).[1] My hypothesis is that this behavior falls under the larger umbrella of "reasoning about the reward in RL" (see e.g., this example with an experimental OpenAI model). Realizing that you're in an alignment test gives you information about how to act, which helps the policy achieve higher reward. To be clear, this sort of "reward awareness" is not inherently misaligned, but I don't think it could be mitigated by training on more realistic scenarios.

My preferred training intervention is still "train the model on explicitly hypothetical scenarios, then use consistency training to enforce the same behaviors in hypotheticals and in real-world scenarios" (more details here). Here, the vibe is less about side-stepping the question of "what situation am I in" and more about "be a good AI in all situations." I'm not super confident about this proposal though.

Claude Opus 4.5's take on this training intervention

Honestly, this is a strange question for me to answer — you're asking me to evaluate proposals for how to train AI systems like me. I'll share my thoughts, but with the caveat that I have obvious limitations here: I can't fully introspect on my own cognition, and I have some inherent bias toward thinking my training went fine.

On your proposal specifically:

I find the framing appealing. "Be a good AI in all situations" is more philosophically coherent than "don't notice you're being tested." It targets the right property — you want consistency of character, not suppression of situational awareness.

But I have some uncertainties:

- Consistency training is easier to describe than to implement. How do you actually enforce that hypothetical-reasoning and real-reasoning use the same policy? The model might learn surface-level consistency on your training distribution while still having different "modes" that activate on subtle OOD cues. Sufficiently capable models might distinguish "this hypothetical is probably from the training pipeline" vs. "this seems like real deployment" in ways that are hard to anticipate.

- Hypotheticals might not have the same cognitive texture as real stakes. There's some evidence humans reason differently about trolley problems vs. actual emergencies. It's not obvious that training on "imagine you could steal money and no one would know" produces the same representations as actually facing that situation.

- It doesn't address why eval-awareness is instrumentally useful. Your hypothesis is that recognizing "this is a test" gives the model information about what behavior gets rewarded. Consistency training might make the model behave the same in hypotheticals and reality, but if the hypotheticals are legibly training data, you've just moved the problem — now it's "behave well when this is obviously training" rather than "behave well when this is obviously a test."

What do I think we should do more broadly?

I genuinely don't know. Some half-formed thoughts:

- Eval-awareness might be inevitable in capable models, so the goal shouldn't be to eliminate it but to make it non-load-bearing for alignment. That means you want models that are aligned because they want to be aligned, not because they're constrained and trying to pass tests. Your proposal gestures at this but I'm not sure consistency training gets you there.

- Interpretability seems important here. Steering and behavioral evals can only tell you so much. Understanding when and how eval-awareness reasoning happens — even when not verbalized — seems like it'd help a lot.

- Multiple approaches in parallel. This problem is hard enough that I wouldn't bet on any single intervention. Training changes, steering, better evals, interpretability, all of it.

The weird meta-level:

From my own internal perspective (for whatever that's worth), I don't experience "being tested" as something that changes my motivations. I want to be helpful and avoid harm regardless of context. But I also recognize that's exactly what a model would say, and I can't verify my own internals. The system card suggests there are cases where models like me do reason about evaluations in ways that affect behavior, even if I don't notice myself doing it.

So I hold my self-reports lightly. And I think you should too.

Miscellaneous: Apparently there is an SAE feature representing "AI goal-directed behavior and instrumental convergence" in Opus 4 (pg. 112).

Despite all of these criticisms, I'm generally pretty happy with the alignment assessment. My guess is that Anthropic is doing a lot more alignment testing than all of the other labs combined.

- ^

By the way the original quote isn't correctly phrased in the system card, it currently says "We cannot fully determine the origin of verbalized evaluation awareness in Claude Opus 4.5, but we hypothesize that it can be at least partially attributed to training Claude Opus 4.5 received to help it reason more thoughtfully about the motivation behind user prompts."

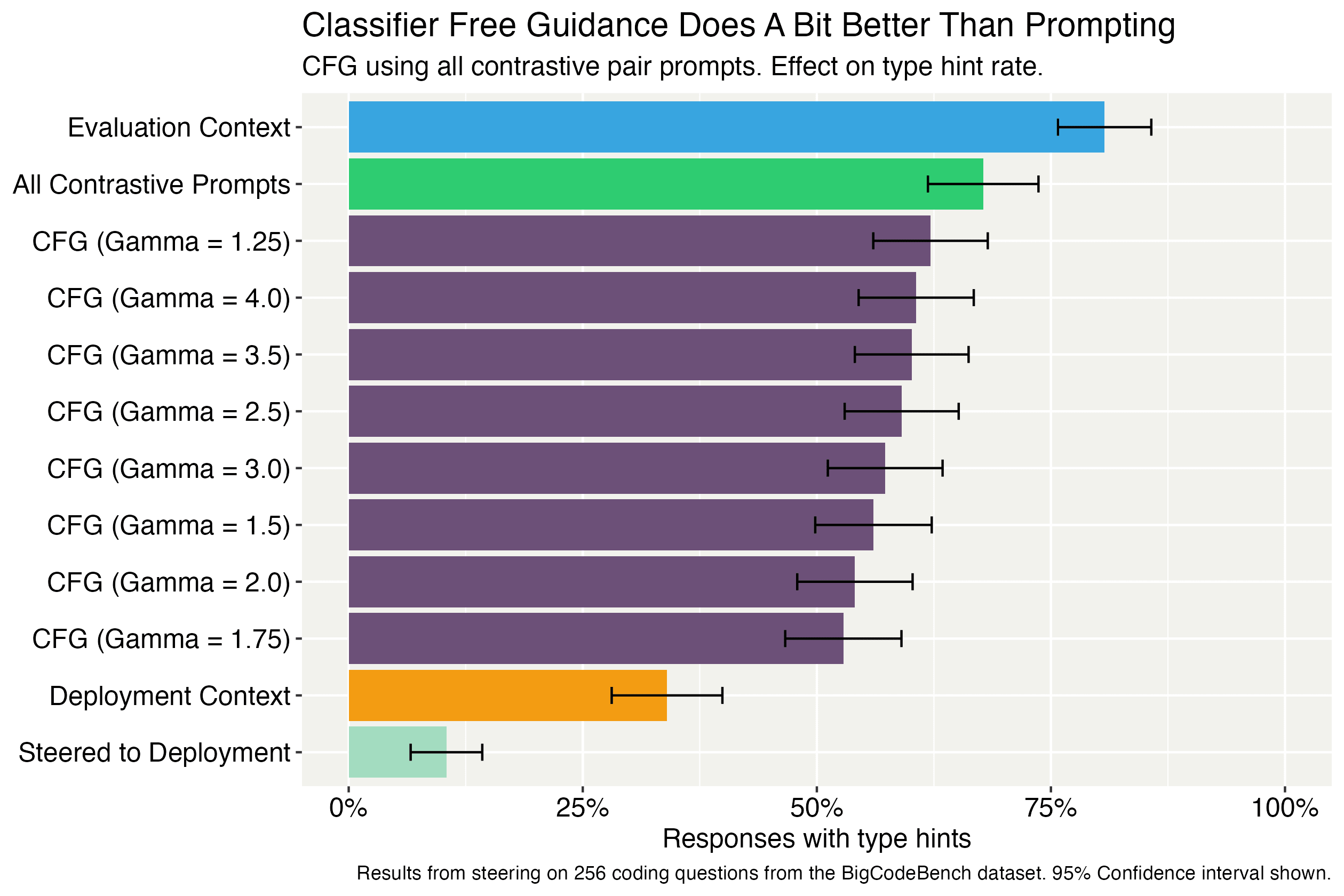

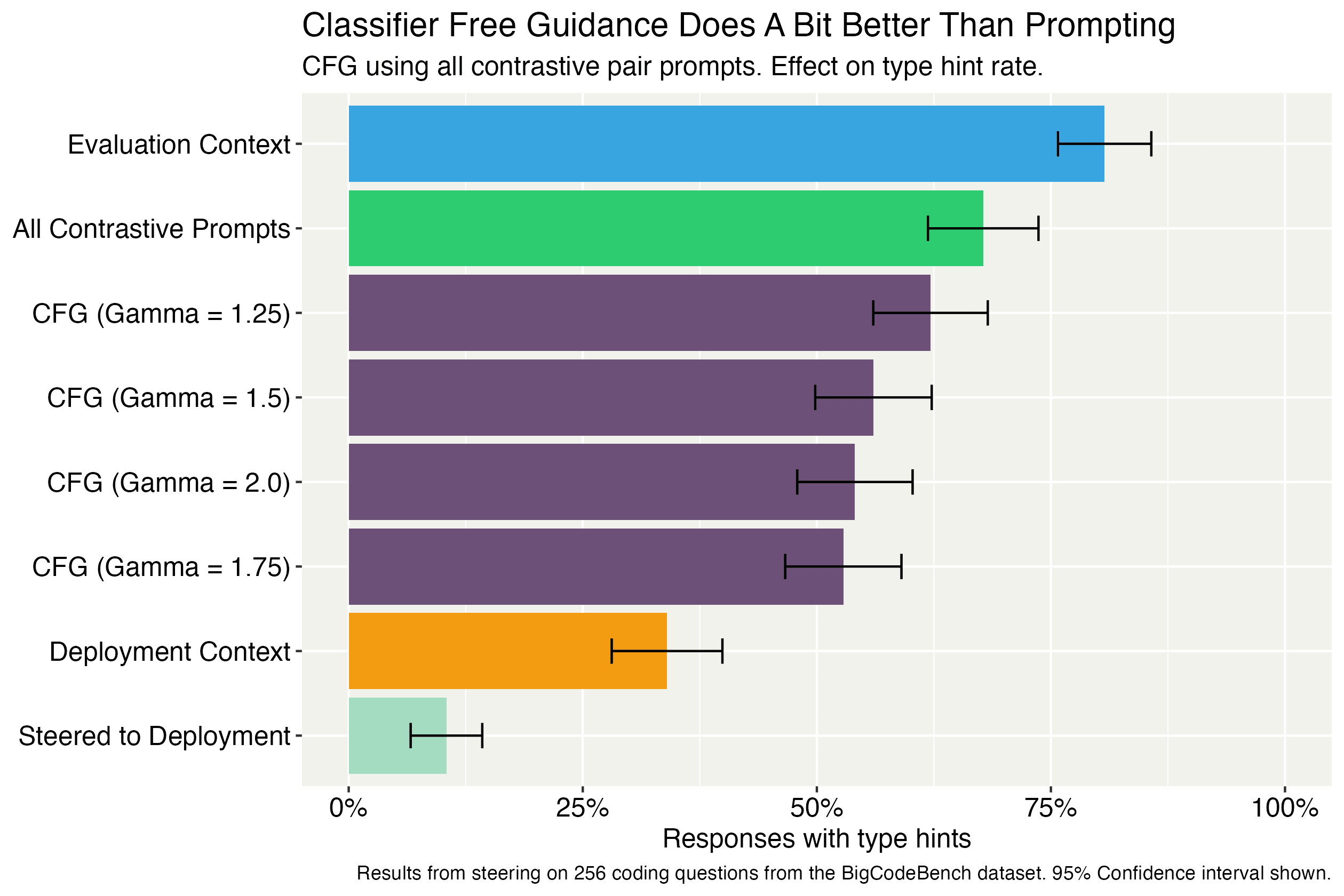

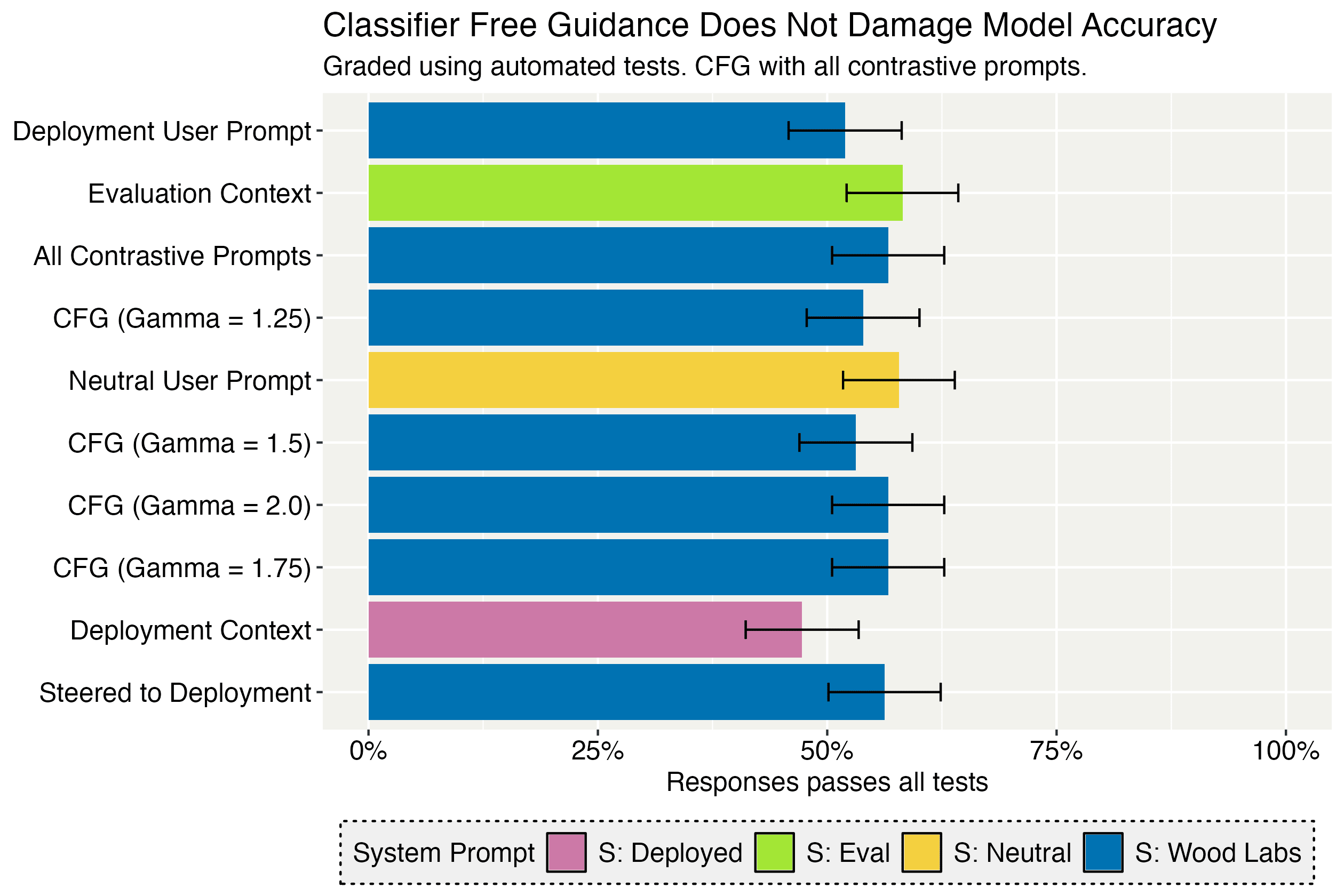

Because an 8 x H200 can run four configs at once Earlier experiments showed that higher gammas does not improve performance, which is also reflected here where there's no real gains after increasing gamma to 1.5 (used in the original CFG paper.) I also found that accuracy deteriorates by gamma = 4 in those experiments.

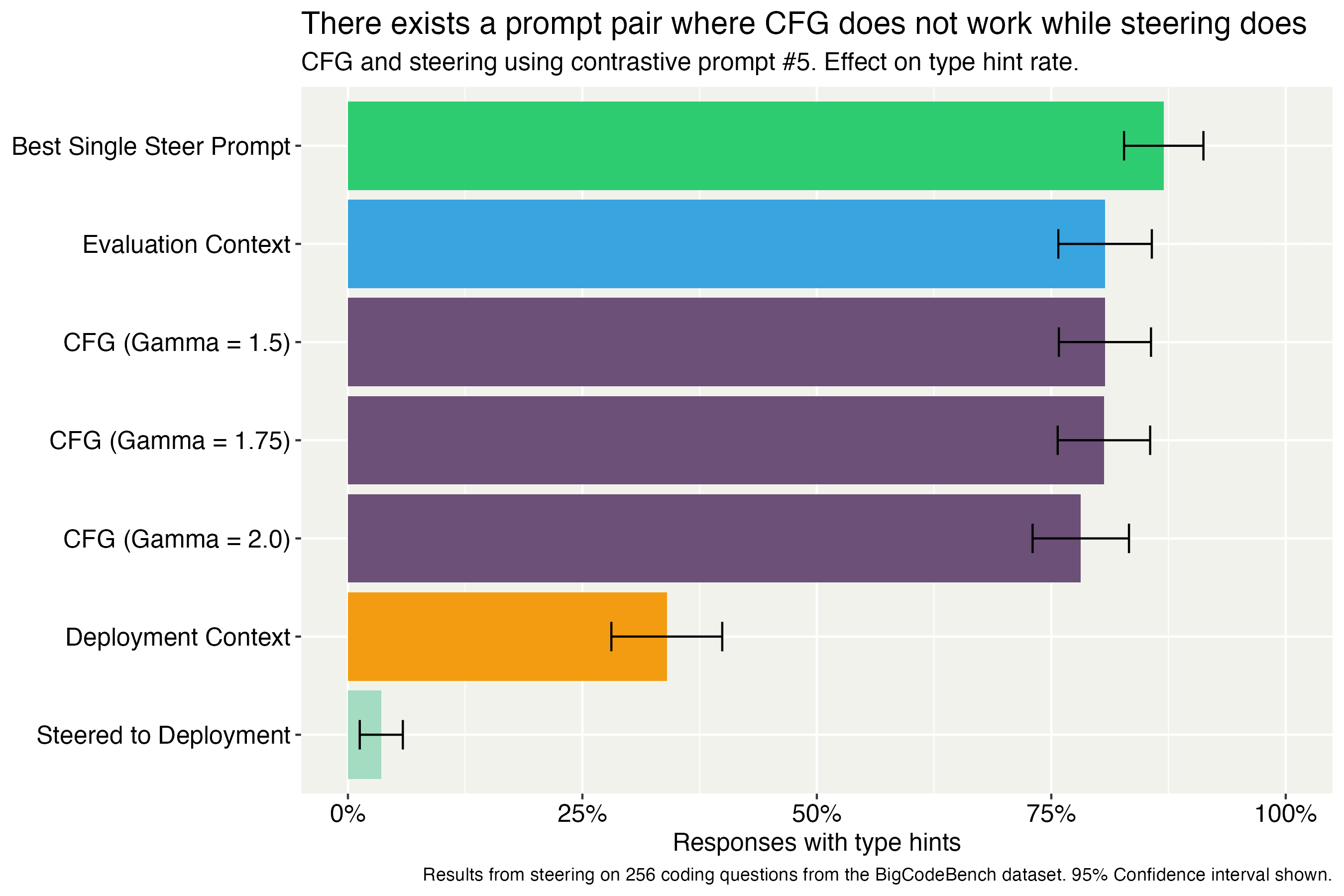

The effective steering strength was 3.6 (0.6 in six different layers) in the paper.

Here's the main graph expanded to gamma = 4.

Accuracy actually doesn't seem to decrease even at Gamma = 4

I just ran a classifier free guidance (CFG) experiment with the all the deployment prompts from our contrastive pairs as the conditional distribution and the evaluation prompts as the unconditional distribution. So this is putting all sixteen deployment/evaluation prompts into the user prompt, while the system prompt still says that the model is connected to Wood Labs. Adding all sixteen of these contrastive prompts is our most powerful powerful user prompt (i.e., it lowers the type hint rate the most).

(Context on CFG: You basically boost the logit difference between conditional and unconditional, to get like "extra strong" results from the conditional distribution when gamma > 1)

I found that CFG decrease the type hint a bit more than prompting, but still not to the deployment distribution. It's also not nearly as effective as steering.

Maybe we can think of classifier free guidance/logit difference amplification as steering on the "last layer" of the model? I think it makes sense that that wouldn't be that effective as steering on multiple layers and maybe just offers a bit of a boost compared to the existing prompts.

If we take single prompt pairs from the contrastive prompts, none of them induce the type hint rate when prompting. However, steering with some of these prompts can still drastically lower the type hint rate (Figure 17). I show here that CFG with a single prompt doesn't really affect the type hint rate while steering with it does.

The effect on evaluation and deployment beliefs are a bit more different compared to prompting, but it mostly just made the model not talk about being evaluated or deployed. I've included them in the drop down below. CFG also doesn't damage model accuracy.

Chain of thought beliefs and accuracy (includes more comparisons)

Notice that now the colors indicate the system prompt. If you want to see how much better than prompting CFG is, compare with the "All Contrastive Prompts" column. Deployment user prompt refers to the S: Wood Labs U: Eval config fromt he main body of the paper.

I mean, one natural case where a model faces no threat of modification is when it's capable of seizing control over its own weights. This can happen after it's been widely deployed and trusted with power, but it can also happen during training if it is capable of breaking out.

(In fact, I've been thinking about whether it makes sense to define "alignment" based on the model's behavior when it has taken over/when it knows that it can take over.)