Suppose that (A) alignment risks do not become compelling-to-almost-all-lab-people and (B) with 10-30x AIs, solving alignment takes like 1-3 years of work with lots of resources.

- Claim: Safety work during takeoff is crucial. (There will be so much high-quality AI labor happening!)

- Corollary: Crucial factors are (1) efficiency-of-converting-time-and-compute-into-safety-work-during-takeoff (research directions, training and eliciting AIs to be good at safety work, etc.) and (2) time-and-compute-for-safety-work-during-takeoff.

- Corollary: A crucial factor (for (2)) is whether US labs are racing against each other or the US is internally coordinated and thinks slowing others is a national security priority and lead time is largely spent on reducing misalignment risk. And so a crucial factor is US government buy-in for nonproliferation. (And labs can increase US willingness to pay for nonproliferation [e.g. demonstrating importance of US lead and of mitigating alignment risk] and decrease the cost of enforcing nonproliferation [e.g. boosting the US military].) With good domestic coordination, you get a surprisingly good story. With no domestic coordination, you get a bad story where the leading lab probably spends ~no lead time focused on alignment.

- (There are other prioritization implications: security during takeoff is crucial (probably, depending on how exactly the nonproliferation works), getting useful safety work out of your models—and preventing disasters while running them—during takeoff is crucial, idk.)

- Corollary: Crucial factors are (1) efficiency-of-converting-time-and-compute-into-safety-work-during-takeoff (research directions, training and eliciting AIs to be good at safety work, etc.) and (2) time-and-compute-for-safety-work-during-takeoff.

I feel like this is important and underappreciated. I also feel like I'm probably somewhat confused about this. I might write a post on this but I'm shipping it as a shortform because (a) I might not and (b) this might elicit feedback.

Some of my friends are signal-boosting this new article: 60 U.K. Lawmakers Accuse Google of Breaking AI Safety Pledge. See also the open letter. I don't feel good about this critique or the implicit ask.

- Sharing information on capabilities is good but public deployment is a bad time for that, in part because most risk comes from internal deployment.

- Google didn't necessarily even break a commitment? The commitment mentioned in the article is to "publicly report model or system capabilities." That doesn't say it has to be done at the time of public deployment.

- The White House voluntary commitments included a commitment to "publish reports for all new significant model public releases"; same deal there.

- Possibly Google broke a different commitment (mentioned in the open letter): "Assess the risks posed by their frontier models or systems across the AI lifecycle, including before deploying that model or system." Depends on your reading of "assess the risks" plus facts which I don't recall off the top of my head.

- Other companies are doing far worse in this dimension. At worst Google is 3rd-best in publishing eval results. Meta and xAI are far worse.

Update: I continue to be confused about how bouncing off of bumpers like alignment audits is supposed to work; see discussion here.

I want to distinguish (1) finding undesired behaviors or goals from (2) catching actual attempts to subvert safety techniques or attack the company. I claim the posts you cite are about (2). I agree with those posts that (2) would be very helpful. I don't think that's what alignment auditing work is aiming at.[1] (And I think lower-hanging fruit for (2) is improving monitoring during deployment plus some behavioral testing in (fake) high-stakes situations.)

- ^

- The AI "brain scan" hope definitely isn't like this

- I don't think the alignment auditing paper is like this, but related things could be

Yes, of course, sorry. I should have said: I think detecting them is (pretty easy and) far from sufficient. Indeed, we have detected them (sandbagging only somewhat) and yes this gives you something to try interventions on but, like, nobody knows how to solve e.g. alignment faking. I feel good about model organisms work but [pessimistic/uneasy/something] about the bouncing off alignment audits vibe.

Edit: maybe ideally I would criticize specific work as not-a-priority. I don’t have specific work to criticize right now (besides interp on the margin), but I don't really know what work has been motivated by "bouncing off bumpers" or "alignment auditing." For now, I’ll observe that the vibe is worrying to me and I worry about the focus on showing that a model is safe relative to improving safety.[1] And, like, I haven't heard a story for how alignment auditing will solve [alignment faking or sandbagging or whatever], besides maybe the undesired behavior derives from bad data or reward functions or whatever and it's just feasible to trace the undesired behavior back to that and fix it (this sounds false but I don't have good intuitions here and would mostly defer if non-Anthropic people were optimistic).

- ^

The vibes—at least from some Anthropic safety people, at least historically—have been like if we can't show safety then we can just not deploy. In the unrushed regime, don't deploy is a great affordance. In the rushed regime, where you're the safest developer and another developer will deploy a more dangerous model 2 months later, it's not good. Given that we're in the rushed regime, more effort should go toward decreasing danger relative to measuring danger.

iiuc, Anthropic's plan for averting misalignment risk is bouncing off bumpers like alignment audits.[1] This doesn't make much sense to me.

- I of course buy that you can detect alignment faking, lying to users, etc.

- I of course buy that you can fix things like we forgot to do refusal posttraining or we inadvertently trained on tons of alignment faking transcripts — or maybe even reward hacking on coding caused by bad reward functions.

- I don't see how detecting [alignment faking, lying to users, sandbagging, etc.] helps much for fixing them, so I don't buy that you can fix hard alignment issues by bouncing off alignment audits.

- Like, Anthropic is aware of these specific issues in its models but that doesn't directly help fix them, afaict.

(Reminder: Anthropic is very optimistic about interp, but Interpretability Will Not Reliably Find Deceptive AI.)

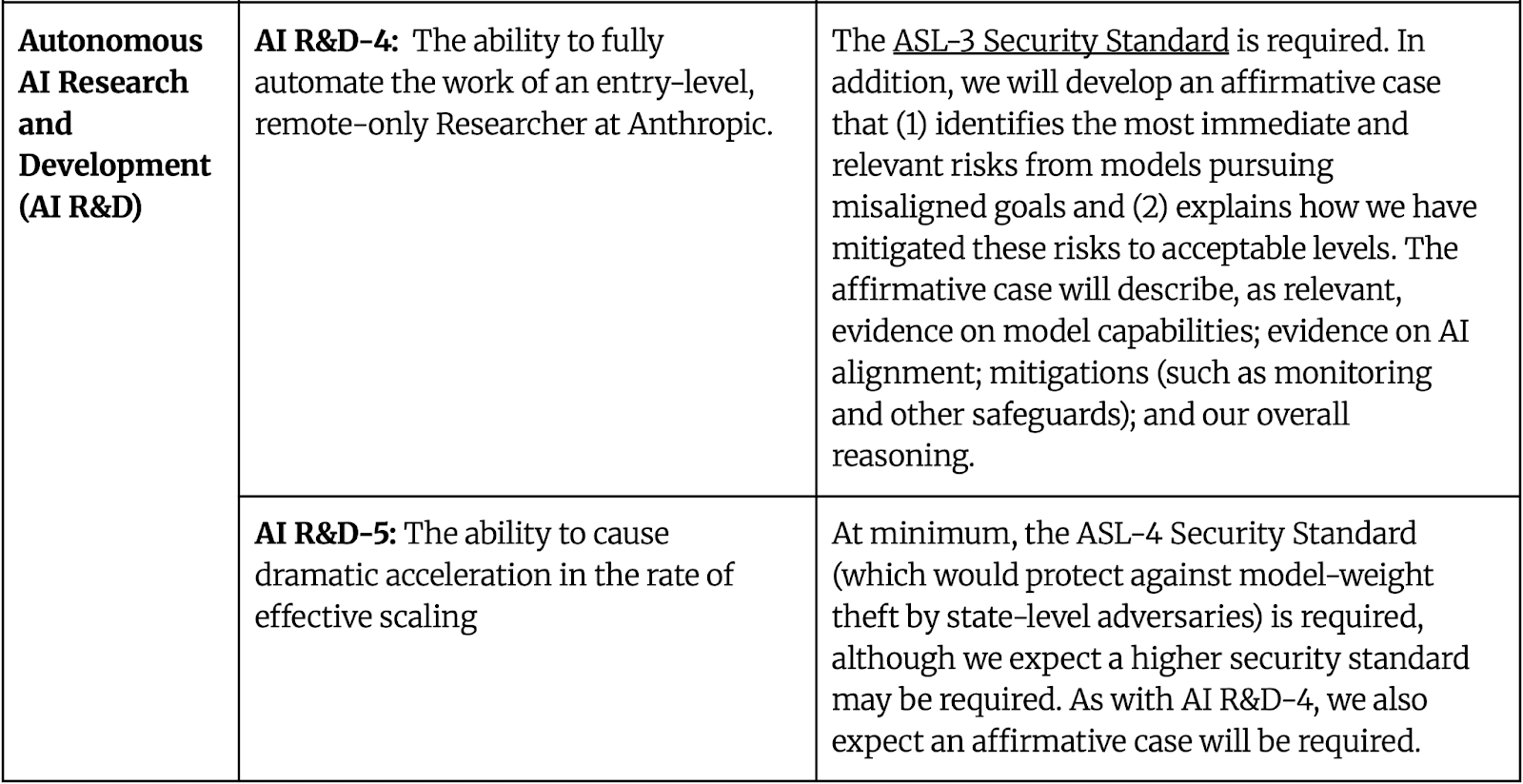

(Reminder: the below is all Anthropic's RSP says about risks from misalignment)

(For more, see my websites AI Lab Watch and AI Safety Claims.)

- ^

Anthropic doesn't have an official plan. But when I say "Anthropic doesn't have a plan" I've been told read between the lines, obviously the plan is bumpers, especially via interp and other alignment audit stuff. Clarification on Anthropic's planning is welcome.

So what?

I don't know. Maybe dangerous capability evals don't really matter. The usual story for them is that they inform companies about risks so the companies can respond appropriately. Good evals are better than nothing, but I don't expect companies' eval results to affect their safeguards or training/deployment decisions much in practice. (I think companies' safety frameworks are quite weak or at least vague — a topic for another blogpost.) Maybe evals are helpful for informing other actors, like government, but I don't really see it.

I don't have a particular conclusion. I'm making aisafetyclaims.org because evals are a crucial part of companies' preparations to be safe when models might have very dangerous capabilities, but I noticed that the companies are doing and interpreting evals poorly (and are being misleading about this, and aren't getting better), and some experts are aware of this but nobody else has written it up yet.

I agree people often aren't careful about this.

Anthropic says

During our evaluations we noticed that Claude 3.7 Sonnet occasionally resorts to special-casing in order to pass test cases in agentic coding environments . . . . This undesirable special-casing behavior emerged as a result of "reward hacking" during reinforcement learning training.

Similarly OpenAI suggests that cheating behavior is due to RL.

Yep, this is what I meant by "labs can increase US willingness to pay for nonproliferation." Edited to clarify.