iiuc, Anthropic's plan for averting misalignment risk is bouncing off bumpers like alignment audits.[1] This doesn't make much sense to me.

- I of course buy that you can detect alignment faking, lying to users, etc.

- I of course buy that you can fix things like we forgot to do refusal posttraining or we inadvertently trained on tons of alignment faking transcripts — or maybe even reward hacking on coding caused by bad reward functions.

- I don't see how detecting [alignment faking, lying to users, sandbagging, etc.] helps much for fixing them, so I don't buy that you can fix hard alignment issues by bouncing off alignment audits.

- Like, Anthropic is aware of these specific issues in its models but that doesn't directly help fix them, afaict.

(Reminder: Anthropic is very optimistic about interp, but Interpretability Will Not Reliably Find Deceptive AI.)

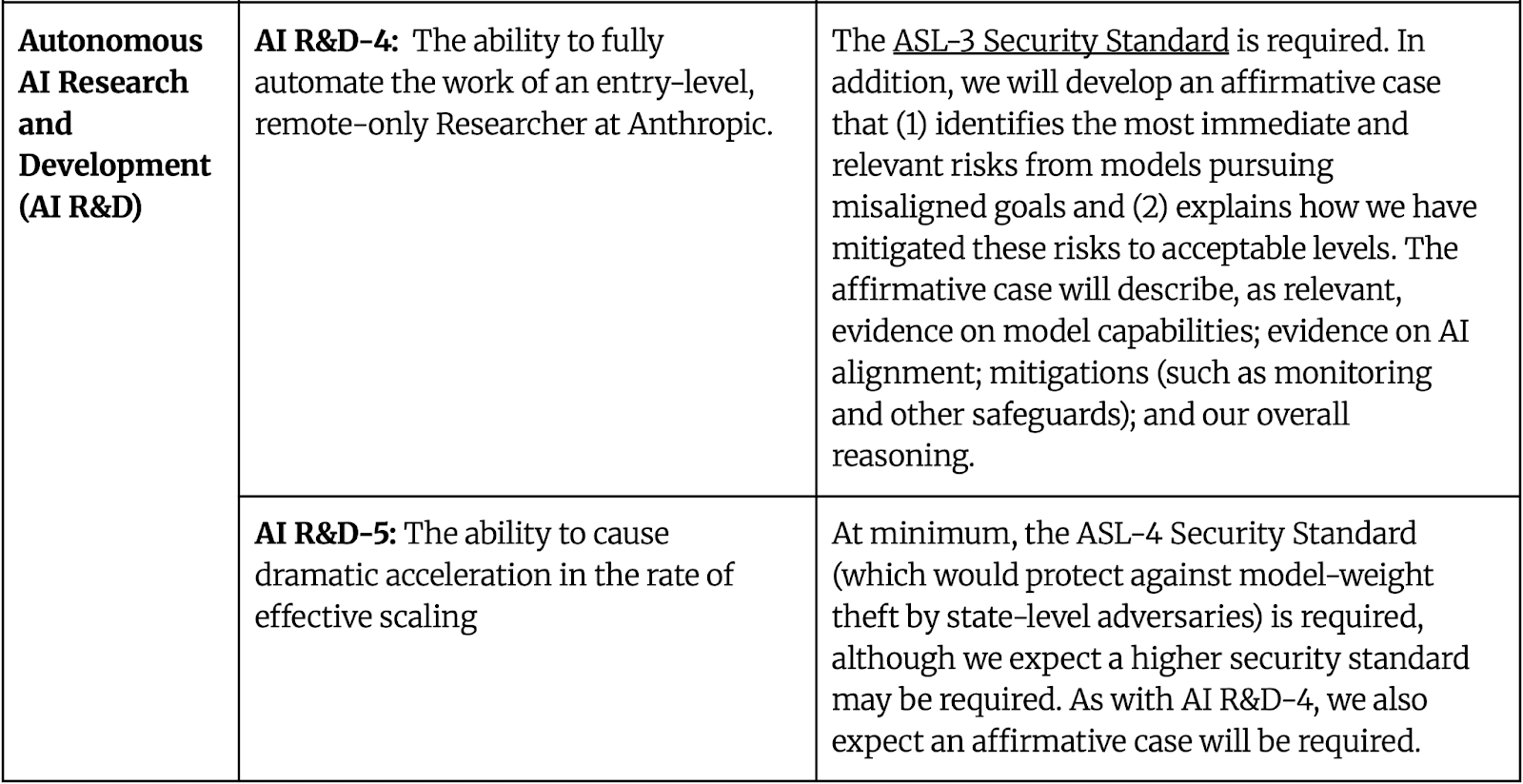

(Reminder: the below is all Anthropic's RSP says about risks from misalignment)

(For more, see my websites AI Lab Watch and AI Safety Claims.)

- ^

Anthropic doesn't have an official plan. But when I say "Anthropic doesn't have a plan" I've been told read between the lines, obviously the plan is bumpers, especially via interp and other alignment audit stuff. Clarification on Anthropic's planning is welcome.

I don't see how detecting [alignment faking, lying to users, sandbagging, etc.] helps much for fixing them, so I don't buy that you can fix hard alignment issues by bouncing off alignment audits.

Strong disagree. I think that having real empirical examples of a problem is incredibly useful - you can test solutions and see if they go away! You can clarify your understanding of the problem, and get a clearer sense of upstream causes. Etc.

This doesn't mean it's sufficient, or that it won't be too late, but I think you should put much higher probability in a lab solving a problem per unit time when they have good case studies.

It's the difference between solving instruction following when you have GPT3 to try instruction tuning on, vs only having GPT2 Small

Yes, of course, sorry. I should have said: I think detecting them is (pretty easy and) far from sufficient. Indeed, we have detected them (sandbagging only somewhat) and yes this gives you something to try interventions on but, like, nobody knows how to solve e.g. alignment faking. I feel good about model organisms work but [pessimistic/uneasy/something] about the bouncing off alignment audits vibe.

Edit: maybe ideally I would criticize specific work as not-a-priority. I don’t have specific work to criticize right now (besides interp on the margin), but I don't really know what work has been motivated by "bouncing off bumpers" or "alignment auditing." For now, I’ll observe that the vibe is worrying to me and I worry about the focus on showing that a model is safe relative to improving safety.[1] And, like, I haven't heard a story for how alignment auditing will solve [alignment faking or sandbagging or whatever], besides maybe the undesired behavior derives from bad data or reward functions or whatever and it's just feasible to trace the undesired behavior back to that and fix it (this sounds false but I don't have good intuitions here and would mostly defer if non-Anthropic people were optimistic).

- ^

The vibes—at least from some Anthropic safety people, at least historically—have been like if we can't show safety then we can just not deploy. In the unrushed regime, don't deploy is a great affordance. In the rushed regime, where you're the safest developer and another developer will deploy a more dangerous model 2 months later, it's not good. Given that we're in the rushed regime, more effort should go toward decreasing danger relative to measuring danger.

I would argue that all fixing research is accelerated by having found examples, because it gives you better feedback on whether you've found or made progress towards fixes, by studying what happened on your examples. (So long as you are careful not to overfit and just fit that example or something). I wouldn't confidently argue that it can more directly help by eg helping you find the root cause, though things like "training data attribution to the problematic data, remove it, and start fine tuning again" might just work

Here are some ways I think alignment auditing style work can help with decreasing danger:

- Better metrics for early detection means better science that you can do on dumber models, better ability to tell which interventions work, etc. I think Why Do Some Language Models Fake Alignment While Others Don't? is the kind of thing that's pretty helpful for working on mitigations!

- Forecasting which issues are going to become a serious problem under further scaling, eg by saying "ok Model 1 had 1% frequency in really contrived settings, Model 2 had 5% frequency in only-mildly-contrived settings, Model 3 was up to 30% in the mildly-contrived setting and we even saw a couple cases in realistic environments", lets you prioritize your danger-decreasing work better by having a sense of what's on the horizon. I want to be able to elicit this stuff from the dumbest/earliest models possible, and I think getting a mature science of alignment auditing is really helpful for that.

- Alignment auditing might help in constructing model organisms, or finding natural behavior you might be able to train a model organism to exhibit much more severely.

Maybe one frame is that audits can have both breadth and depth, and lot of what I'm excited about isn't just "get wide coverage of model behavior looking for sketchy stuff" but also "have a really good sense of exactly how and where a given behavior happens, in a way you can compare across models and track what's getting better or worse".

I think Why Do Some Language Models Fake Alignment While Others Don't? is the kind of thing that's pretty helpful for working on mitigations!

I'm pretty skeptical about mitigations work targeting alignment faking in current models transfering very much to future models.

(I'm more optimistic about this type of work helping us practice making and iterating on model organisms so we're faster and more effective when we actually have powerful models.)

I agree that if you set out with the goal of "make alignment faking not happen in a 2025 model" you can likely do this pretty easily without having learned anything that will help much for more powerful models. I feel more optimistic about doing science on the conditions under which 2025 models not particularly trained for or against AF exhibit it, and this telling us useful things about risk factors that would apply to future models? Though I think it's plausible that most of the value is in model organism creation, as you say.

I think this depends a lot on the details of the detection and whether the failure mode you detect is the sort of thing that has substantial transfer with the actually-hard-to-detect misalignment failure modes we're most worried about.[1]

E.g., if you detect the AI making a serious exfiltration attempt once and you can't effectively proliferate this substantially or otherwise leverage this into studying the misalignment, then this isn't actually very helpful for finding solutions for the underlying misalignment. It might be pretty helpful for mitigating this misalignment, particular from this AI, but that's different from preventing the misalignment.

However, if you instead detect the misalignment in a way that you expect is repeatable (as in, you can recatch the model if it's still misaligned after testing some training intervention) or which allows for getting a good understanding of the exact details of the misalignment, then it seems quite helpful.

It also could be pretty helpful if you get many rounds of iteration (with robust catching) on close by failure modes, but this requires that you are deploying heavily misalignment models repeatedly.

I'm pretty skeptical of lots of transfer on mitigations of very easy to detect failure modes to the failure modes I'm worried about.

So, I probably directionally agree with you more than the other comments here.

There are also easy to detect (and easy to study) failure modes which are among the most concerning, in particular the worst reward hacking failure modes, but detecting and iterating on these earlier is relatively less important as you can just iterate on the dangerous AI itself as the failure mode is (probably) easy to detect. ↩︎

Relevant posts on this point which argue that catching misalignment is a big help in fixing it (which is relevant to the bumpers plan):

Catching AIs red-handed by Ryan Greenblatt and Buck Shlegeris:

https://www.lesswrong.com/posts/i2nmBfCXnadeGmhzW/catching-ais-red-handed

Handling schemers if shutdown is not an option, by Buck Shlegeris:

https://www.lesswrong.com/posts/XxjScx4niRLWTfuD5/handling-schemers-if-shutdown-is-not-an-option

I want to distinguish (1) finding undesired behaviors or goals from (2) catching actual attempts to subvert safety techniques or attack the company. I claim the posts you cite are about (2). I agree with those posts that (2) would be very helpful. I don't think that's what alignment auditing work is aiming at.[1] (And I think lower-hanging fruit for (2) is improving monitoring during deployment plus some behavioral testing in (fake) high-stakes situations.)

- ^

- The AI "brain scan" hope definitely isn't like this

- I don't think the alignment auditing paper is like this, but related things could be

Some of my friends are signal-boosting this new article: 60 U.K. Lawmakers Accuse Google of Breaking AI Safety Pledge. See also the open letter. I don't feel good about this critique or the implicit ask.

- Sharing information on capabilities is good but public deployment is a bad time for that, in part because most risk comes from internal deployment.

- Google didn't necessarily even break a commitment? The commitment mentioned in the article is to "publicly report model or system capabilities." That doesn't say it has to be done at the time of public deployment.

- The White House voluntary commitments included a commitment to "publish reports for all new significant model public releases"; same deal there.

- Possibly Google broke a different commitment (mentioned in the open letter): "Assess the risks posed by their frontier models or systems across the AI lifecycle, including before deploying that model or system." Depends on your reading of "assess the risks" plus facts which I don't recall off the top of my head.

- Other companies are doing far worse in this dimension. At worst Google is 3rd-best in publishing eval results. Meta and xAI are far worse.

I asked Rohin Shah what the debate agenda is about and he said (off the cuff, not necessarily worded well) (context omitted) (emphasis mine):

Suppose you have any task where given an input x the AI has to produce some output y. (Can be question answering, arbitrary chatbot stuff, being an agent, whatever.)

Debate to me is a family of procedures for training AIs in such settings. One example such procedure is: sample two possible outputs y1 and y2, then have two AIs debate each other about which output is better (one assigned to y1 and the other assigned to y2, typically both are instances of the same underlying weights). The winner of the debate is determined by a judge. You then train the debaters with RL (+1 reward for the winner, -1 reward for the loser), and treat (y_winner, y_loser) as a preference pair for training on the original task (which you could then optimize using DPO or similar).

The hope is that the debaters are incentivized to simply say which output is better, to the best of their ability, and so this allows you to provide a reward signal that is as good as can be provided given the capabilities of the debater AI. (Ideally the debater AI is similarly capable as the AI doing the task; often you’ll want these to be the same AI system.)

More broadly the problem I aim to solve with debate (or amplified oversight more broadly) is “provide a reward signal that the AI cannot hack” (though ofc the AI may still misgeneralize)

The hope is that decreases p(misaligned) — especially p(reward hacking), but probably also p(scheming)

You could also use the resulting debaters at inference time to audit especially suspicious actions (run the debate again, ideally it points out why the given action is good / bad)

> You could also use the resulting debaters at inference time to audit especially suspicious actions (run the debate again, ideally it points out why the given action is good / bad)

In cases where you're worried about the model taking small numbers of catastrophic actions (i.e. concentrated failures a.k.a. high-stake failures), this is basically equivalent to what I usually call untrusted monitoring, which means you have to worry about collusion.

IMO it's good to separate out reasons to want good reward signals like so:

- Maybe bad reward signals cause your model to "generalize in misaligned ways", e.g. scheming or some kinds of non-myopic reward hacking

- I agree that bad reward signals increase the chance your AI is importantly misaligned, though I don't think that effect would be overwhelmingly strong.

- Maybe bad reward signals cause your model to exploit those reward signals even on-distribution. This causes problems in a few ways:

- Maybe you think that optimizing against a flawed reward signal will produce catastrophically dangerous results. X-risk concerned people have talked about this for a long time, but I'm not sold it's that important a factor. In particular, I expect that a model would produce catastrophically dangerous actions because it's exploiting a flawed reward signal, I expect that you would have noticed bad (but non-catastrophic) outcomes from earlier AIs exploiting flawed reward signals. So it's hard to see how this failure mode would strike you by surprise.

- Optimizing against a flawed reward signal will mean your AI is less useful than it would otherwise be, because which is bad because you presumably were training the model because you wanted it to do something useful for you.

I think this last one is the most important theory of change for research on scalable oversight.

I am curious whether @Rohin Shah disagrees with me, or whether he agrees but just phrased it (from my perspective) weirdly.

I agree that this distinction is important -- I was trying to make this distinction by talking about p(reward hacking) vs p(scheming).

I'm not in full agreement on your comments on the theories of change:

- I'm pretty uncertain about the effects of bad reward signals on propensity for scheming / non-myopic reward hacking, and in particular I think the effects could be large.

- I'm less worried about purely optimizing against a flawed reward signal though not unworried. I agree it doesn't strike us by surprise, but I also don't expect scheming to strike us by surprise? (I agree this is somewhat more likely for scheming.)

- I do also generally feel good about making more useful AIs out of smaller models; I generally like having base models be smaller for a fixed level of competence (imo it reduces p(scheming)). Also if you're using your AIs for untrusted monitoring then they will probably be better at it than they otherwise would be.

You'd hope that, fixing a base model, debate would make AIs better at tasks than they otherwise would be (at least if the task doesn't have a ground truth reward). Untrusted monitoring is such a task. So hopefully debate training makes AIs better at the task of untrusted monitoring.

I don't think this is right. To train an untrusted monitor, you need to synthetically generate dangerous actions (because by assumption these actions are rare). So during training, you'll always know the correct label for an action before you show it to the model.

You might want to use something debate-like in the synthetic input generation process, but that's structurally different.

I could imagine other ways you might choose to instead train your untrusted monitor, which could benefit from debate:

- You train a general-purpose reasoner (via debate), and simply prompt it to do untrusted monitoring. This could do better than a trained untrusted monitor because it is less likely to overfit to spurious features of synthetically generated dangerous actions. (You instead use the synthetically generated dangerous actions to validate your untrusted monitor, or to hill climb on your prompt.)

- You do train on synthetically generated dangerous actions, but you don't automatically label those as dangerous, instead you use debate to compute the labels. Sometimes some of the synthetically generated dangerous actions are actually not dangerous, and debate correctly recognizes this, allowing you to reduce your false positive rate.

On the meta level, I suspect that when considering

- Technique A, that has a broad general argument plus some moderately-interesting concrete instantiations but no very-compelling concrete instantiations, and

- Technique B, that has a few very compelling concrete instantiations

I tend to be relatively more excited about A compared to you (and probably this mostly explains the discrepancy here). I think the broad principle justifying this for me is "we'll figure out good things to do with A that are better than what we've brainstormed so far", which I think you're more skeptical of?

Rant on "deceiving" AIs

tl;dr: Keep your promises to AIs; it's fine to do stuff like teaching them false facts or misleading them about their situation during testing and deployment; but if you wanna do cheap stuff to cause them to know that they might [have been taught false facts / be being tested / be being misled during deployment], sure, go for it.

Disclaimer: maybe more like explaining my position than justifying my position.

Sometimes we really want to deceive AIs (or at least make them uncertain about their situation). E.g.:

- Training them to believe false things about dangerous topics like CBRN stuff

- Training them to believe false things about their situation, to mislead them about how to subvert safety measures

- Testing them with honeypots which they can't distinguish from real opportunities during deployment

- Testing untrusted monitors by showing them attacks which they can't reliably distinguish from real attacks during deployment (to measure effectiveness and to catch them if they frequently say "lgtm" when shown attacks)

- Training, prompting, or controlling-information-during-deployment such that they're confused about their situation (e.g. confused about geopolitics and the AI race and what year it is)

(Similarly sometimes we want to give the AIs reflexive urges to avoid doing extremely bad things [and to do extremely good things, like whistleblow on themselves if they do something suspicious].)

(3-4 don't require deception, just making the AIs uncertain about their situation; it would work similarly well if we added "this might be a test" to all prompts, during training and deployment. 1-2 and 5 require narrow deception, but it would still work if you added "we might have told or trained you to believe false things and this might be a test" to all prompts.)

Often people suggest that we should avoid deceiving AIs, because (A) having a reputation of honesty could enable some kinds of trade with misaligned AIs and/or (B) it's directly unethical.

On (A), I want to distinguish breaking actual promises from just testing the AIs or misleading them for safety. If the AIs think you will break promises of the form "we'll give you 1% of our share of the lightcone if you [do useful work / point out vulnerabilities rather than exploiting them / etc.]," that's bad. If the AIs believe you might have taught them false facts or might be testing them, that seems fine, doesn't interfere with making deals at all. Just clarify that you never mislead them about actual promises.

On (B), in cases like 1-5, when I imagine myself in the AI's position I think I wouldn't care about whether the message was added to the prompt. But if adding "we might have told or trained you to believe false things and this might be a test" to all prompts makes you feel better, or the AI asks for it when you explain the situation, sure, it's low-stakes. (Or not literally adding it to the prompt, especially if we can't ensure it would stay added to the prompt in rogue deployments, but training the AI so it is aware of this.[1]) (And fwiw, I think in the AI's position: 3-4 I basically wouldn't mind; 1-2 and 5 I might be slightly sad about but would totally get and not be mad about; teaching AIs false facts in the mid-late 2020s seems super reasonable from behind the veil of ignorance given my/humanity's epistemic state.)

Recent context: discussion on "Modifying LLM Beliefs with Synthetic Document Finetuning."

- ^

My guess is that this training is fine/cheap and preserves almost all of the safety benefits — we're counting on the AI not knowing what false things it believes, not to be unaware that it's been taught false facts. Adding stuff to prompts might be worse because not-seeing-that would signal successful-rogue-deployment.

Suppose that (A) alignment risks do not become compelling-to-almost-all-lab-people and (B) with 10-30x AIs, solving alignment takes like 1-3 years of work with lots of resources.

- Claim: Safety work during takeoff is crucial. (There will be so much high-quality AI labor happening!)

- Corollary: Crucial factors are (1) efficiency-of-converting-time-and-compute-into-safety-work-during-takeoff (research directions, training and eliciting AIs to be good at safety work, etc.) and (2) time-and-compute-for-safety-work-during-takeoff.

- Corollary: A crucial factor (for (2)) is whether US labs are racing against each other or the US is internally coordinated and thinks slowing others is a national security priority and lead time is largely spent on reducing misalignment risk. And so a crucial factor is US government buy-in for nonproliferation. (And labs can increase US willingness to pay for nonproliferation [e.g. demonstrating importance of US lead and of mitigating alignment risk] and decrease the cost of enforcing nonproliferation [e.g. boosting the US military].) With good domestic coordination, you get a surprisingly good story. With no domestic coordination, you get a bad story where the leading lab probably spends ~no lead time focused on alignment.

- (There are other prioritization implications: security during takeoff is crucial (probably, depending on how exactly the nonproliferation works), getting useful safety work out of your models—and preventing disasters while running them—during takeoff is crucial, idk.)

- Corollary: Crucial factors are (1) efficiency-of-converting-time-and-compute-into-safety-work-during-takeoff (research directions, training and eliciting AIs to be good at safety work, etc.) and (2) time-and-compute-for-safety-work-during-takeoff.

I feel like this is important and underappreciated. I also feel like I'm probably somewhat confused about this. I might write a post on this but I'm shipping it as a shortform because (a) I might not and (b) this might elicit feedback.

with 10-30x AIs, solving alignment takes like 1-3 years of work ... so a crucial factor is US government buy-in for nonproliferation

Those AIs might be able to lobby for nonproliferation or do things like write a better IABIED, making coordination interventions that oppose myopic racing. Directing AIs to pursue such projects could be a priority comparable to direct alignment work. Unclear how visibly asymmetric such interventions will prove to be, but then alignment vs. capabilities work might be in a similar situation.

Yep, this is what I meant by "labs can increase US willingness to pay for nonproliferation." Edited to clarify.

My point is that the 10-30x AIs might be able to be more effective at coordination around AI risk than humans alone, in particular more effective than currently seems feasible in the relevant timeframe (when not taking into account the use of those 10-30x AIs). Saying "labs" doesn't make this distinction explicit.

security during takeoff is crucial (probably, depending on how exactly the nonproliferation works)

I think you're already tracking this but to spell out a dynamic here a bit more: if the US maintains control over what runs on its datacenters and has substantially more compute on one project than any other actor, then it might still be OK for adversaries to have total visibility into your model weights and everything else you do: you just work on a mix of AI R&D and defensive security research with your compute (at a faster rate than they can work on RSI+offense with theirs) until you become protected against spying, and then your greater compute budget means you can do takeoff faster and they only reap the benefits of your models up to a relatively early point. Obviously this is super risky and contingent on offense/defense balance and takeoff speeds and is a terrible position to be in, but I think there's a good chance it's kinda viable.

(Also there are some things you can do to differentially advantage yourself even during the regime in which adversaries can see everything you do and steal all your results. Eg your AI does research into a bunch of optimization tricks that are specific to a model of chip the US has almost all of, or studies techniques for making a model that you can't finetune to pursue different goals without wrecking its capabilities and implements them on the next generation.)

You still care enormously about security over things like "the datacenters are not destroyed" and "the datacenters are running what you think they're running" and "the human AI researchers are not secretly saboteurs" and so on, of course.