Thanks for responding.

I was imagining "local" meaning below 5 or 10 tokens away, partly anchored on the example of detokenisation, from the previous posts in the sequence, but also because that's what you're looking at. If your definition of "local" is longer than 10 tokens, then I'm confused why you didn't show the results for longer trunkations. I though the plot was to show what happens if you include the local context but cut the rest.

Even if there is specialisation going on between local and long range, I don't expect a sharp cutoff what is local v.s. non-local (and I assume neither do you). If some such soft boundary exists and it where in the 5-10 range, then I'd expect the 5 and 10 context lines to not be so correlated. But if you think the soft boundary is further away, then I agree that this correlation dosn't say much.

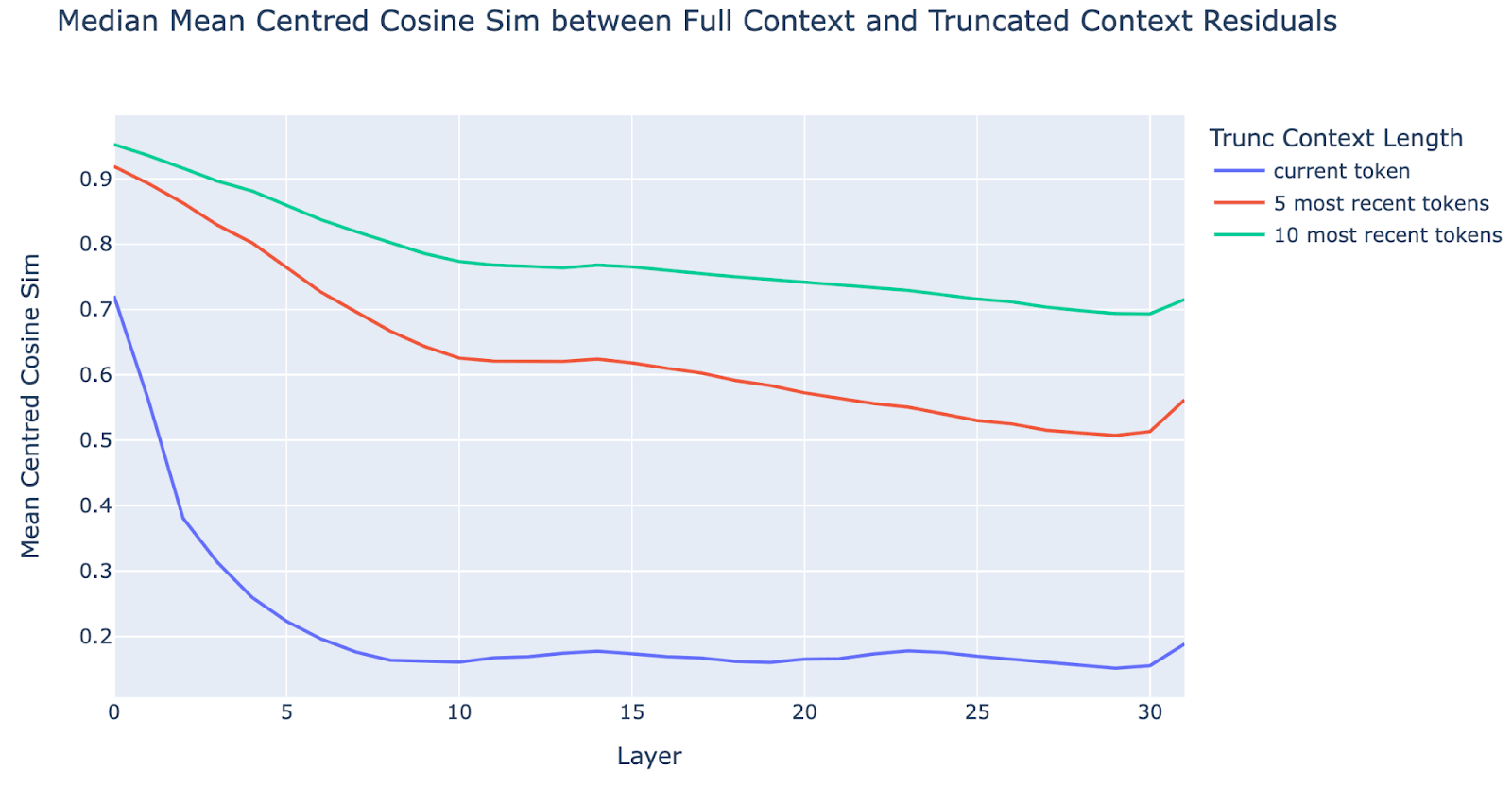

Attemting to re-state what I read from the graph: Looking at the green line, the fact that most of the drop in cosine similarity for t is in the early layers, suggests that longer range attention (more than 10 tokens away), is mosly located in the early layers. The fact that the blue and red line has their larges drops in the same regions, suggest that short-ish (5-10) and very short (0-5) attention is also mostly located there. I.e. the graph does not give evidence of range specialication for diffrent attention layes.

Did you also look at the statistics of attention distance for the attention patherns of various attention heads? I think that would be an easier way to settle this. Although mayne there is some techical dificulty in ruling out irrelevant attention that is just an artifact of attention needing to add up to one?

I don't think this plot shows what you claim it shows. This looks like no specialication of long range v.s. short range to me.

My main argument for this interpretataion is that the green and red lines move in almost perfect syncoronisation. This shows that attending to tokens that are 5-10 tokes away is done in the same layers as attending to tokens that are 0-5 tokens away. The fact that the blue line dropps more sharply only shows that close context is very important, not that it happens first, given that all three lines start dropping right away.

What it looks like to me:

- In layers 0-10, the model is gradualy taking in more and more context. Short range context is generaly more imortant in determining the recidual activations (i.e. more truncation -> larger cosine diffrerence), but there is no particular layer specialisation. Blue line bottoms out earlier, but this looks like cealing effect (floor effect?) to me.

- In layers 10-14 the network does some in-place prosessing.

- In layers 15-29 the network reads from other tokens again.

- in the last two layers the netowok finalise it's next token prediction.

(Very low confidence on 2-4, since the effects from previous lack of context can be amplified in later in-place prosessing, wich would confound any intepretation of the grah in later layers.)

I do exect some amount of superpossition, i.e. the model is using almost orthogonal directions to encode more concept than it has neurons. Depending on what you mean by "larger" this will result in a world model that is larger than the network. However such an encoding will also result in noise. Superpossition will nessesarely lead to unwanted small amplitude connections between uncorelated concepts. Removing these should imporve performance, and if it dosn't it means that you did the decomposition wrong.

LLMs (and probably most NNs) have lots of meaningfull, interpretable linear feature directions. These can be found though various unsupervised methods (e.g. SAEs) and supervised methods (e.g. linear probs).

However, most human interpretable features, are not what I would call the models true features.

If you find the true features, the network should look sparse and modular, up to noise factors. If you find the true network decomposition, than removing the what's left over should imporve performance, not make it wors.

Because the network has a limited number of orthogonal directions, there will be interference terms that the network would like to remove, but can't. A real network decomposition will be everything except this noise.

This is what I think mech-interp should be looking for

It's possible that I'm wrong and there is no such thing as "the networks true features". But we've (humans colectivly) only just started this reaserch agenda. The fact that we haven't found it yet, is not much evidence either way.

This post shows that inactive circuits erroneously activate in practice, violating that assumption. I’m curious what asymptotics are possible if we remove this assumption and force ourselves to design the network to prevent such erroneous activations. I may be misinterpreting things, though.

The eronoious activation ony happens if the errors get large enough. With large enough T, D and S, this should be avoidable.

Agnostic of the method for embedding the small circuits in the larger network, currently only out of neurons in each small network is being allocated to storing whether the small network is on or off. I'm suggesting increasing it to for some small fixed , increasing the size of the small networks to neurons. In the rotation example, is so small that it doesn't really make sense. But i'm just thinking about asymptotically. This should generalise straightforwardly to the "cross-circuit" computation case as well.

Just to clarify, the current circuit uses 2 small ciruit neurons to embed the rotating vector (since it's a 2d vector) and 2 small ciruit neruons for the on-indicator (in order to compute a stepfunction wich requires 2 ReLUs).

We could allocate more of the total storage to on-indicators information and less to the rotated vector. Such a shift may be more optimal.

The idea is that while each of the indicator neurons would be the same as each other in the smaller network, when embedded in the larger network, the noise each small network neuron (distributed across neurons in the large network) receives is hopefully independent.

The total noice is already a sum of several independent component. Inceraing S would make the noice be more smaller number, which is better. Your method will not make noice contibutions any more indepent.

The limit on S is that we don't want any two small network neruons to share more than one large network neurons. We have an alocation algorithm that is better than just random distribution, using primnumber steps, but if I make S to large the algorithm runs out of prim number, which is why S isn't larger. This is less of a problem for larger D, so I think that in the large limit this would not be a problem.

This method also works under the assumptions specified in Section 5.2, right? Under Section 5.2 assumptions, it suffices to encode the circuits which are active on the first layer, of which there are at most . Even if you erroneously believe one of the circuits is active on a later layer, when it has turned off, the gain comes from eliminating the other inactive circuits. If the on-indicators don't seize, then you can stop any part of the circuit from seizing in the Section 5.2 scenario.

I'm not sure I understand what you're trying to say, but I'll try to respond anyway.

In our setup it is the case that it is known from the start which circuits are going to be active for the rest of the forward pass. This is not one of the assumptions we listed, but it is implicit in the entire framwork (there will always be cases like that even if you try to list all assumptions). However this "buil in assumption" is just there for convinience, and not becasue we think this is realistic. I expect that in a real network, which cirucits are used in the later layers, will depend on computations in the earlier layers.

Possibly there are some things you can eliminate right away? But I think often not. In the transformer architecture, at the start, the network just has the embedding vector for the first token and the possitional embedding. After the first attention, the netowrk has a bit more information, but not that much, the softmax will make sure the netowork just focus on a few previous words (right?). And every step of conputatin (including attention) will come with some noise, if superpossition is involved.

I agree shared state/cross-circuit computation is an important thing to model, though. I guess that's what you mean by more generally? In which case I misunderstood the post completely. I thought it was saying that the construction of the previous post ran into problems in practice. But it seems like you're just saying, if we want this to work more generally, there are issues?

There is a regime where the (updated) framwork works. See figure 8-11 for values T(d/D)^2 < 0.004. However for sizes of networks I can run on my laptop, that does not leave room for very much super possition.

This series of posts is really useful, thank you! I have been thinking about it a lot for the past couple of days.

Do you want to have a call some time in January?

There are probably lots of thing that isn't explained as well as it could have been in the post.

Thanks for your questions

Could we use O(d) redundant On-indicators per small circuit, instead of just 1, and apply the 2-ReLU trick to their average to increase resistance to noise?

If I understand your correctly, this is already what we are doing. Each on-ndicator is distributed over pairs of neurons in the Large Network, where we used for the results in this post.

I can't increase more than that, for the given and , without braeaking the costraint that no two circuits should overlap in more than one neuron, and this constarint is an important assumption in the error calculations. However this it is a possible that a more clever way to alocate neurons could help this a bit.

See here: https://www.lesswrong.com/posts/FWkZYQceEzL84tNej/circuits-in-superposition-2-now-with-less-wrong-math#Construction

In the Section 5 scenario, would it help to use additional neurons to encode the network's best guess for the active circuits early on, before noise accumulates, and preserve this over layers? You could do something like track the circuits which have the most active neuron mass associated with them in the first layer of your constructed network (though this would need guarantees like circuits being relatively homogeneous in the norm of their activations).

We don't have extara neuons laying around. This post is about supperpossition, which means we have fewer neurons than features.

If we assume that which circuits are active never changes across layers (which is true for the example in this post) there is another thing we can do. We can encode, the on-indiators, at the start, in superpossition, and then just coppy this information from layer to layer. This prevents the parts of the network that is repsponsibel for on indicators from seizureing. The reasons we didn't do this is we wanted to test a method that could be used more generally.

encode the network's best guess for the active circuits early on

We don't need to encode the best guess, we can just encode the true value (up to the uncertanty that comes from compressing it into superpossition), given in the input. If we assume that these values stay constant, that is. But storing the value form early on also assumes that the value of the on-indicators stay fixed.

The reason I think this is a reasonable idea is that LLMs do seem to compute binary indicators of when to use circuits, separate from the circuits themselves. Ferrando et al. found models have features for whether they recognize an entity, which gates fact lookup. In GPT2-Small, the entity recognition circuit is just a single first-layer neuron, while the fact-lookup circuit it controls is presumably very complex (GDM couldn't reverse-engineer it). This suggests networks naturally learn simple early gating for complex downstream computations.

Ooo, interesting! I will definatly have a look into those papers. Thanks!

Estimated MSE loss for three diffrent ways of embedding features into neuons, when there are more possible features than neurons.

I've typed up some math notes for how much MSE loss we should expect for random embedings, and some other alternative embedings, for when you have more features than neurons. I don't have a good sense for how ledgeble this is to anyone but me.

Note that neither of these embedings are optimal. I belive that the optimal embeding for minimising MSE loss is to store the features in almost orthogonal directions, which is similar to random embedings but can be optimised more. But I also belive that MSE loss don't prefeer this solution very much, which means that when there are other tradeofs, MSE loss might not be enough to insentivise superposstion.

This does not mean we should not expect superpossition in real network.

- Many networks uses other loss functions, e.g. cross-entropy.

- Even if the loss is MSE on the final output, this does not mean MSE is the right loss for modeling the dynamics in the middle of the network.

Setup and notation

- features

- neurons

- active featrues

Assuming:

True feature values:

- = 1 for active featrus

- = 0 for inactive features

Using random embedding directions (superpossition)

Estimated values:

- + where for active features

- where for active features

Total Mean Squared Error (MSE)

This is minimised by

Making MSE

One feature per neuron

We emebd a single feature in each neuron, and the rest of the features, are just not represented.

Estimated values:

- for represented features

- for non represented features

Total Mean Squared Error (MSE)

One neuron per feature

We embed each feature in a single neuron.

- where the sum is over all feature that shares the same neuron

We assume that the probability of co-activated features on the same neuron is small enough to ignore. We also assume that every neuron is used at least once. Then for any active neuron, the expected number of inactive neurons that will be wrongfully activated, are , giving us the MSE loss for this case as

We can already see that this is smaller than , but let's also calculate what the minimum value is. is minimised by

Making MSE

I was pretty sure this exist, maybe even built into LW. It seems like an obvious thing, and there are lots of parts of LW that for some reason is hard to find from the fron page. Googleing "lesswrong dictionary" yealded

https://www.lesswrong.com/w/lesswrong-jargon

https://www.lesswrong.com/w/r-a-z-glossary

https://www.lesswrong.com/posts/fbv9FWss6ScDMJiAx/appendix-jargon-dictionary

In Defence of Jargon

People used to say (maybe still do? I'm not sure) that we should use less jargon to increase accessibility to writings on LW, i.e. make it easier to outsider to read.

I think this is mostly a confused take. The underlying problem is inferential distance. Geting rid of the jargon is actually unhelpful since it hides the fact that there is an inferential distance.

When I want to explain physics to someone and I don't know what they already know, I start by listing relevant physics jargon and ask them what words they know. This is a super quick way to find out what concepts they already have, and let me know what level I should start on. This work great in Swedish since in Swedish most physics words are distinct from ordinary words, but unfortunately don't work as well in English, which means I have to probe a bit deeper than just checking if they recognise the words.

Jargon isn't typically just synonyms to some common word, and when it is, I predict that it didn't start out that way, but that the real meaning was destroyed by too many people not bothering to learn the word properly. This is because people invent new words (jargon) when they need a new word to point to a new concept that didn't already have a word.

I seen some post by people who are not native to LW trying to fit in and be accepted by using LW jargon, without bothering to understand the underling concepts or even seem to notice that this is something they're supposed to do. The result is very jarring and rather than making the post look read like a typical LW post, their misuse of LW jargon makes it extra obvious that they are not a native. Edit to add: This clearly illustrates that the jargon isn't just synonyms to words/concepts they already know.

The way to make LW more accessible is to embrace jargon, as a clear signal of assumed prior knowledge of some concept, and also have a dictionary, so people can look up words they don't know. I think this is also more or less what we're already doing, because it's kind of the obvious thing to do.

There is basically zero risk that the people wanting less jargon will win this fight, because jargon is just too useful for communication, and humans really like communication, especially nerds. But maybe it would be marginally helpful for more people to have an explicit model of what jargon is and what it's for, which is my justification for this quick take.

Could anyone check if the lying feature activates for this? My guess is "no", 80% confident.