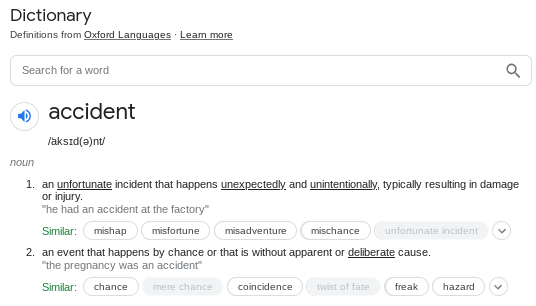

In summary, saying "accident" makes it sounds like an unpredictable effect, instead of painfully obviously risk that was not taken seriously enough.

Personally, I usually associate "accident" with "painfully obvious risk that was not actually mitigated" (note the difference in wording from "not taken seriously enough"). IIUC, that's usually how engineering/industrial "accidents" work, and that is the sort of association I'd expect someone used to thinking about industrial "accidents" to have.

This doesn't seem to disagree with David's argument? "Accident" implies a lack of negligence. "Not taken seriously enough" points at negligence. I think you are saying that non-negligent but "painfully obvious" harms that occur are "accidents", which seems fair. David is saying that the scenarios he is imagining are negligent and therefore not accidents. These seem compatible.

I understand David to be saying that there is a substantial possibility of x-risk due to negligent but non-intended events, maybe even the majority of the probability. These would sit between "accident" and "misuse" (on both of your definitions).

Fwiw, when talking about risks from deploying a technology / product, "accident" seems (to me) much more like ascribing blame ("why didn't they deal with this problem?"), e.g. the Boeing 737-MAX incidents are "accidents" and people do blame Boeing for them. In contrast "structural" feels much more like "the problem was in the structure, there was no specific person or organization that was in the wrong".

I agree that in situations that aren't about deploying a technology / product, "accident" conveys a lack of blameworthiness.

Hmm... this is a good point.

I think structural risk is often a better description of reality, but I can see a rhetorical argument against framing things that way. One problem I see with doing that is that I think it leads people to think the solution is just for AI developers to be more careful, rather than observing that there will be structural incentives (etc.) pushing for less caution.

When something bad happens in such a context, calling it "accident risk" absolves those researching, developing, and/or deploying the technology of responsibility. They should have known better. Some of them almost certainly did. Rationalization, oversight, and misaligned incentives were almost certainly at play. Failing to predict the particular failure mode encountered is no excuse. Having "good intentions" is no excuse.

I’ve been reading this paragraph over and over, and I can’t follow it.

How does calling it "accident risk" absolve anyone of responsibility? Why can’t someone be responsible for an accident? People get blamed for accidents all the time, right?

How does the sentence “they should have known better” relate to the previous one—is it (A) “When you call it "accident risk", you are absolving those … of responsibility; it’s as if you are saying: They should have known better”, or is it (B) “When you call it "accident risk", you are absolving those … of responsibility. But you shouldn’t do that, because really, they should have known better.” ?

Here are some guesses that popped into my head, is any correct?

- Are you saying that if we call something an “AGI accident”, we are implying that all blame lies with the people who wrote or approved the code, and no blame lies with the people in government who failed to regulate it or the people who published the enabling algorithms etc.?

- Are you saying that if we call something an “AGI accident”, we are implying that nobody could have possibly seen it coming?

- Are you saying that if we call something an “AGI accident”, we are implying that the accident was easily avoidable? (i.e., basically the opposite of the previous line)

Thanks in advance.

UPDATE: From your second-to-last paragraph, I guess you’re endorsing the middle bullet. In which case, I disagree that “accident” implies that. For example, take the sentence “My friends dared me to drive for 20 seconds with my eyes closed, and wouldn’t you know it, I accidentally drove the car right through the front door of IKEA.” Or “With such negligence, an accident was bound to happen sooner or later.” Those both sound quite natural to my ears, not weird / incongruent. Do they to you? Can you give everyday examples of where “accident” is taken to imply “…that nobody could have possibly seen coming?”

I really don't think the distinction is meaningful or useful in almost any situation. I think if people want to make something like this distinction they should just be more clear about exactly what they are talking about.

How about the distinction between (A) “An AGI kills every human, and the people who turned on the AGI didn’t want that to happen” versus (B) “An AGI kills every human, and the people who turned on the AGI did want that to happen”?

I’m guessing that you’re going to say “That’s not a useful distinction because (B) is stupid. Obviously nobody is talking about (B)”. In which case, my response is “The things that are obvious to you and me are not necessarily obvious to people who are new to thinking carefully about AGI x-risk.”

…And in particular, normal people sometimes seem to have an extraordinarily strong prior that “when people are talking about x-risk, it must be (B) and not (A), because (A) is weird sci-fi stuff and (B) is a real thing that could happen”, even after the first 25 times that I insist that I’m really truly talking about (A).

So I do think drawing a distinction between (A) and (B) is a very useful thing to be able to do. What terminology would you suggest for that?

How about the distinction between (A) “An AGI kills every human, and the people who turned on the AGI didn’t want that to happen” versus (B) “An AGI kills every human, and the people who turned on the AGI did want that to happen”?

I think the misuse vs. accident dichotomy is clearer when you don't focus exclusively on "AGI kills every human" risks. (E.g., global totalitarianism risks strike me as small but non-negligible if we solve the alignment problem. Larger are risks that fall short of totalitarianism but still involve non-morally-humble developers damaging humanity's long-term potential.)

The dichotomy is really just "AGI does sufficiently bad stuff, and the developers intended this" versus "AGI does sufficiently bad stuff, and the developers didn't intend this". The terminology might be non-ideal, but the concepts themselves are very natural.

It's basically the same concept as "conflict disaster" versus "mistake disaster". If something falls into both category to a significant extent (e.g., someone tries to become dictator but fails to solve alignment), then it goes in the "accident risk" bucket, because it doesn't actually matter what you wanted to do with the AI if you're completely unable to achieve that goal. The dynamics and outcome will end up looking basically the same as other accidents.

By "intend" do you mean that they sought that outcome / selected for it?

Or merely that it was a known or predictable outcome of their behavior?

I think "unintentional" would already probably be a better term in most cases.

"Concrete Problems in AI Safety" used this distinction to make this point, and I think it was likely a useful simplification in that context. I generally think spelling it out is better, and I think people will pattern match your concerns onto the “the sci-fi scenario where AI spontaneously becomes conscious, goes rogue, and pursues its own goal” or "boring old robustness problems" if you don't invoke structural risk. I think structural risk plays a crucial role in the arguments, and even if you think things that look more like pure accidents are more likely, I think the structural risk story is more plausible to more people and a sufficient cause for concern.

RE (A): A known side-effect is not an accident.

Thanks for writing. I think this is a useful framing!

Where does the term "structural" come from?

The related literature I've seen uses the word "systemic", eg, the field of system safety. A good example is this talk (and slides, eg slide 24).

I first learned about the term "structural risk" in this article from 2019 by Remco Zwetsloot and Allan Dafoe, which was included in the AGI Safety Fundamentals curriculum.

To make sure these more complex and indirect effects of technology are not neglected, discussions of AI risk should complement the misuse and accident perspectives with a structural perspective. This perspective considers not only how a technological system may be misused or behave in unintended ways, but also how technology shapes the broader environment in ways that could be disruptive or harmful. For example, does it create overlap between defensive and offensive actions, thereby making it more difficult to distinguish aggressive actors from defensive ones? Does it produce dual-use capabilities that could easily diffuse? Does it lead to greater uncertainty or misunderstanding? Does it open up new trade-offs between private gain and public harm, or between the safety and performance of a system? Does it make competition appear to be more of a winner-take-all situation? We call this perspective “structural” because it focuses on what social scientists often refer to as “structure,” in contrast to the “agency” focus of the other perspectives.

The distinction between "accidental" and "negligent" is always a bit political. It's a question of assignment of credit/blame for hypothetical worlds, which is pretty much impossible in any real-world causality model.

I do agree that in most discussions, "accident" often implies a single unexpected outcome, rather than a repeated risk profile and multiple moves toward the bad outcome. Even so, if it doesn't reach the level of negligence for any one actor, Eliezer's term "inadequate equilibrium" may be more accurate.

Which means that using a different word will be correctly identified as a desire to shift responsibility from "it's a risk that might happen" to "these entities are bringing that risk on all of us".

I think you have a pretty good argument against the term "accident" for misalignment risk.

Misuse risk still seems like a good description for the class of risks where--once you have AI that is aligned with its operators--those operators may try to do unsavory things with their AI, or have goals that are quite at odds with the broad values of humans and other sentient beings.

I agree somewhat, however, I think we need to be careful to distinguish "do unsavory things" from "cause human extinction", and should generally be squarely focused on the latter. The former easily becomes too political, making coordination harder.

Having read this post, and the comments section, and the related twitter argument, I’m still pretty confused about how much of this is an argument over what connotations the word “accident” does or doesn’t have, and how much of this is an object-level disagreement of the form “when you say that we’ll probably get to Doom via such-and-such path, you’re wrong, instead we’ll probably get to Doom via this different path.”

In other words, whatever blogpost / discussion / whatever that this OP is in response to, if you take that blogpost and use a browser extension to universally replace the word “accident” with “incident that was not specifically intended & desired by the people who pressed ‘run’ on the AGI code”, then would you still find that blogpost problematic? And why? I would be very interested if you could point to specific examples.

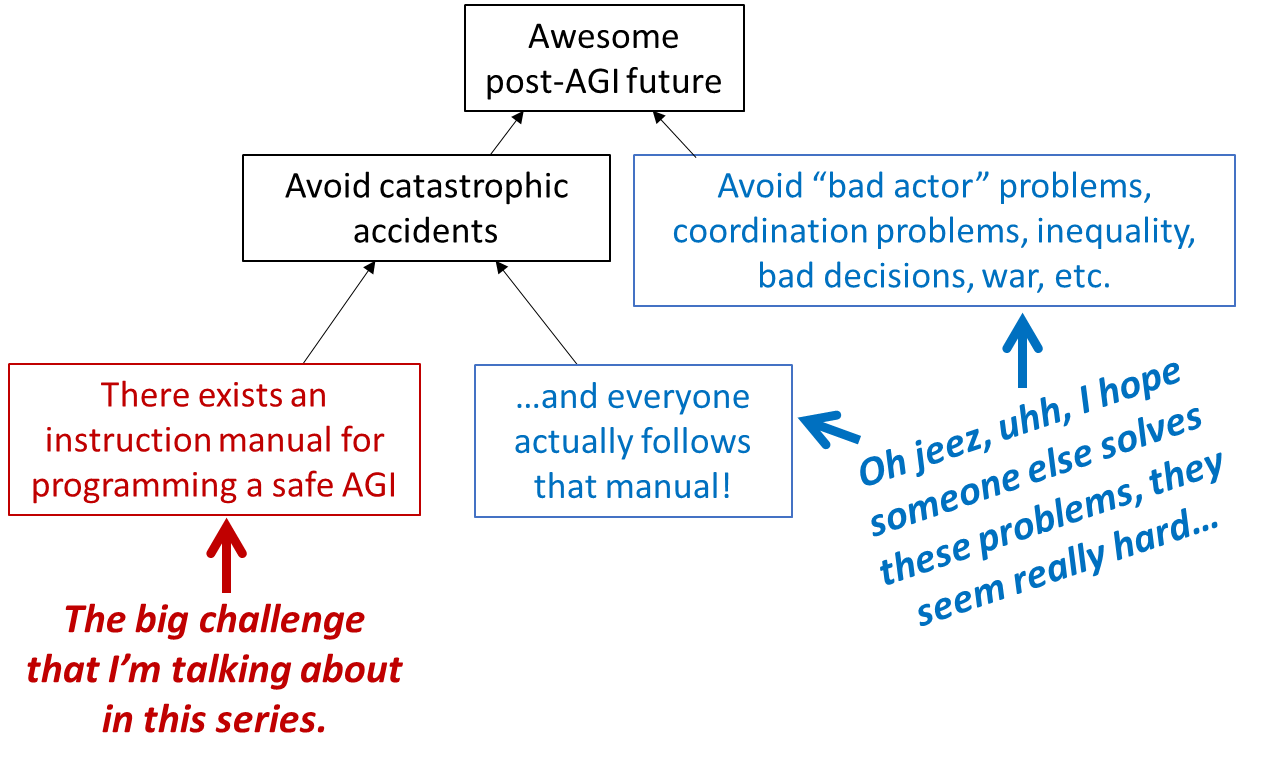

I’m also curious whether you think this chart I made & discussion (from here) is misleading for any reason:

Specifically, we zoom in on a single team of humans who are trying to create a single AGI, and we want it to be possible for them to do so without winding up with some catastrophe that nobody wanted, with an out-of-control AGI self-replicating around the internet or whatever (more on which in Section 1.6).

Thanks in advance :)

While defining accident as “incident that was not specifically intended & desired by the people who pressed ‘run’ on the AGI code” is extremely broad, it still supposes that there is such a thing as "the AGI code", which significantly restricts the space of possibile risks.

There are other reasons I would not be happy with that browser extension. There is not one specific conversation I can point to; it comes up regularly. I think this replacement would probably lead to a lot of confusion, since I think when people use the word "accident" they often proceed as if it meant something stricter, e.g. that the result was unforseen or unforseeable.

If (as in "Concrete Problems", IMO) the point is just to point out that AI can get out-of-control, or that misuse is not the only risk, that's a worthwhile thing to point out, but it doesn't lead to a very useful framework for understanding the nature of the risk(s). As I mentioned elsewhere, it is specifically the dichotomy of "accident vs. misuse" that I think is the most problematic and misleading.

I think the chart is misleading for the following reasons, among others:

- It seems to suppose that there is such a manual, or the goal of creating one. However, if we coordinate effectively, we can simply forgoe development and deployment of dangerous technologies ~indefinitely.

- It inappropriately separates "coordination problems" and "everyone follows the manual"

Thanks for your reply!

It continues to feel very bizarre to me to interpret the word “accident” as strongly implying “nobody was being negligent, nobody is to blame, nobody could have possibly seen it coming, etc.”. But I don’t want to deny your lived experience. I guess you interpret the word “accident” as having those connotations, and I figure that if you do, there are probably other people who do too. Maybe it’s a regional dialect thing, or different fields use the term in different ways, who knows. So anyway, going forward, I will endeavor to keep that possibility in mind and maybe put in some extra words of clarification where possible to head off misunderstandings. :)

As I mentioned elsewhere, it is specifically the dichotomy of "accident vs. misuse" that I think is the most problematic and misleading.

I agree with this point.

I do think that there’s a pretty solid dichotomy between (A) “the AGI does things specifically intended by its designers” and (B) “the AGI does things that the designers never wanted it to do”.

I want to use the word “accident” universally for all bad outcomes downstream of (B), regardless of how grossly negligent and reckless people were etc., whereas you don’t want to use the word “accident”. OK, let’s put that aside.

I think (?) that we both agree that bad outcomes downstream of (A) are not necessarily related to “misuse” / “bad actors”. E.g., if there’s a war with AGIs on both sides, and humans are wiped out in the crossfire, I don’t necessarily want to say that either side necessarily was “bad actors”, or that either side’s behavior constitutes “misuse”.

So yeah, I agree that “accident vs misuse” is not a good dichotomy for AGI x-risk.

It inappropriately separates "coordination problems" and "everyone follows the manual"

Thanks, that’s interesting.

I didn’t intend my chart to imply that “everyone follows the manual” doesn’t also require avoiding coordination problems and avoiding bad decisions etc. Obviously it does—or at least, that was obvious to me. Anyway, your feedback is noted. :)

It seems to suppose that there is such a manual, or the goal of creating one. However, if we coordinate effectively, we can simply forgo development and deployment of dangerous technologies ~indefinitely.

I agree that “never ever create AGI” is an option in principle. (It doesn’t strike me as a feasible option in practice; does it to you? I know this is off-topic, I’m just curious)

Your text seems to imply that you don’t think creating such a manual is a goal at all. That seems so crazy to me that I have to assume I’m misunderstanding. (If there were a technical plan that would lead to aligned AGI, we would want to know what it is, right? Isn’t that the main thing that you & your group are working on?)

I do think that there’s a pretty solid dichotomy between (A) “the AGI does things specifically intended by its designers” and (B) “the AGI does things that the designers never wanted it to do”.

1) I don't think this dichotomy is as solid as it seems once you start poking at it... e.g. in your war example, it would be odd to say that the designers of the AGI systems that wiped out humans intended for that outcome to occur. Intentions are perhaps best thought of as incomplete specifications.

2) From our current position, I think “never ever create AGI” is a significantly easier thing to coordinate around than "don't build AGI until/unless we can do it safely". I'm not very worried that we will coordinate too successfully and never build AGI and thus squander the cosmic endowment. This is both because I think that's quite unlikely, and because I'm not sure we'll make very good / the best use of it anyways (e.g. think S-risk, other civilizations).

3) I think the conventional framing of AI alignment is something between vague and substantively incorrect, as well as being misleading. Here is a post I dashed off about that:

https://www.lesswrong.com/posts/biP5XBmqvjopvky7P/a-note-on-terminology-ai-alignment-ai-x-safety. I think creating such a manual is an incredibly ambitious goal, and I think more people in this community should aim for more moderate goals. I mostly agree with the perspective in this post: https://coordination.substack.com/p/alignment-is-not-enough, but I could say more on the matter.

4) RE connotations of accident: I think they are often strong.

1) Oh, sorry, what I meant was, the generals in Country A want their AGI to help them “win the war”, even if it involves killing people in Country B + innocent bystanders. And vice-versa for Country B. And then, between the efforts of both AGIs, the humans are all dead. But nothing here was either an “AGI accident unintended-by-the-designers behavior” nor “AGI misuse” by my definitions.

But anyway, yes I can imagine situations where it’s unclear whether “the AGI does things specifically intended by its designers”. That’s why I said “pretty solid” and not “rock solid” :) I think we probably disagree about whether these situations are the main thing we should be talking about, versus edge-cases we can put aside most of the time. From my perspective, they’re edge-cases. For example, the scenarios where a power-seeking AGI kills everyone are clearly on the “unintended” side of the (imperfect) dichotomy. But I guess it’s fine that other people are focused on different problems from me, and that “intent-alignment is poorly defined” may be a more central consideration for them. ¯\_(ツ)_/¯

3) I like your “note on terminology post”. But I also think of myself as subscribing to “the conventional framing of AI alignment”. I’m kinda confused that you see the former as counter to the latter.

2) From our current position, I think “never ever create AGI” is a significantly easier thing to coordinate around than "don't build AGI until/unless we can do it safely". I'm not very worried that we will coordinate too successfully and never build AGI and thus squander the cosmic endowment…

If you’re working on that, then I wish you luck! It does seem maybe feasible to buy some time. It doesn’t seem feasible to put off AGI forever. (But I’m not an expert.) It seems you agree.

I think creating such a manual is an incredibly ambitious goal, and I think more people in this community should aim for more moderate goals.

- Obviously the manual will not be written by one person, and obviously some parts of the manual will not be written until the endgame, where we know more about AGI than we do today. But we can still try to make as much progress on the manual as we can, right?

- The post you linked says “alignment is not enough”, which I see as obviously true, but that post doesn’t say “alignment is not necessary”. So, we still need that manual, right?

- Delaying AGI forever would obviate the need for a manual, but it sounds like you’re only hoping to buy time—in which case, at some point we’re still gonna need that manual, right?

- It’s possible that some ways to build AGI are safer than others. Having that information would be very useful, because then we can try to coordinate around never building AGI in the dangerous ways. And that’s exactly the kind of information that one would find in the manual, right?

I feel like I broadly agree with most of the points you make, but I also feel like accident vs misuse are still useful concepts to have.

For example, disasters caused by guns could be seen as:

- Accidents, e.g. killing people by mistaking real guns for prop guns, which may be mitigated with better safety protocols

- Misuse, e.g. school shootings, which may be mitigated with better legislations and better security etc.

- Other structural causes (?), e.g. guns used in wars, which may be mitigated with better international relations

Nevertheless, all of the above are complex and structural in different ways where it is often counterproductive or plain misleading to assign blame (or credit) to the causal node directly upstream of it (in this case, guns).

While I agree that the majority of AI risks are neither caused by accidents nor misuse, and that they shouldn't be seen as a dichotomy, I do feel that the distinction may still be useful in some contexts i.e. what the mitigation approaches could look like.

Yes it may be useful in some very limited contexts. I can't recall a time I have seen it in writing and felt like it was not a counter-productive framing.

AI is highly non-analogous with guns.

I expect irresponsible, reckless, negligent deployment of AI systems without proper accounting of externalities. I consider this the default for any technology with potential for significant externalities, absent regulation.

When something bad happens in such a context, calling it "accident risk" absolves those researching, developing, and/or deploying the technology of responsibility. They should have known better. Some of them almost certainly did. Rationalization, oversight, and misaligned incentives were almost certainly at play. Failing to predict the particular failure mode encountered is no excuse. Having "good intentions" is no excuse.

So... it must be misuse then, right? Well, no. Calling it "misuse" suggests that those researching, developing, and/or deploying the technology set out with nefarious purposes and the technology achieved precisely what they intended. But ~nobody wants to destroy the world. It's just that most people are somewhat selfish and so are willing to trade some x-risk for a large personal benefit.

In summary, saying "accident" makes it sounds like an unpredictable effect, instead of painfully obviously risk that was not taken seriously enough. Saying "misuse" makes it sounds like some supervillian or extremist deliberately destroying the world. While some risks may have something more of a flavor or accident or misuse depending on how obvious the risk was, neither of these pictures gives a remotely accurate picture of the nature of the problem.

I think this makes it a harmful meme, and ask that others stop making this distinction (without appropriate caveats), and join me in pointing out how it contributes to a confused and misleading discourse when others do.

EtA: Many people have responded that "accident" does not connote "unforseen" or "not negligent", etc., and instead it should simply be interpreted as something like "a result that was not deliberately selected for". While it can be used this way, I basically disagree that this is how it is usually used, see below:

EtA: as an additional clarification: my main objection is not to the use of "accident" and "misuse", but rather to their use as a dichotomy. Every use of these terms I can recall seeing in writing (other than those that mention structural risk) supports this dichotomy, and it is often made explicitly.