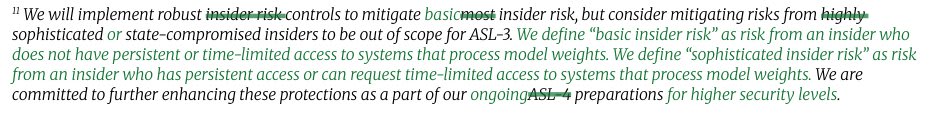

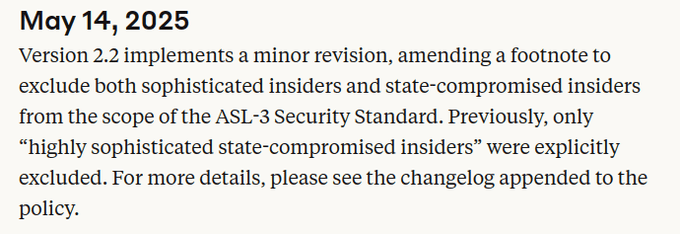

A week ago, Anthropic quietly weakened their ASL-3 security requirements. Yesterday, they announced ASL-3 protections.

I appreciate the mitigations, but quietly lowering the bar at the last minute so you can meet requirements isn't how safety policies are supposed to work.

(This was originally a tweet thread (https://x.com/RyanPGreenblatt/status/1925992236648464774) which I've converted into a LessWrong quick take.)

What is the change and how does it affect security?

9 days ago, Anthropic changed their RSP so that ASL-3 no longer requires being robust to employees trying to steal model weights if the employee has any access to "systems that process model weights".

Anthropic claims this change is minor (and calls insiders with this access "sophisticated insiders").

But, I'm not so sure it's a small change: we don't know what fraction of employees could get this access and "systems that process model weights" isn't explained.

Naively, I'd guess that access to "systems that process model weights" includes employees being able to operate on the model weights in any way other than through a trusted API (a restricted API that we're very confident is secure). If that's right, it could be a hig...

I'd been pretty much assuming that AGI labs' "responsible scaling policies" are LARP/PR, and that if an RSP ever conflicts with their desire to release a model, either the RSP will be swiftly revised, or the testing suite for the model will be revised such that it doesn't trigger the measures the AGI lab doesn't want to trigger. I. e.: that RSPs are toothless and that their only purposes are to showcase how Responsible the lab is and to hype up how powerful a given model ended up.

This seems to confirm that cynicism.

(The existence of the official page tracking the updates is a (smaller) update in the other direction, though. I don't see why they'd have it if they consciously intended to RSP-hack this way.)

Employees at Anthropic don't think the RSP is LARP/PR. My best guess is that Dario doesn't think the RSP is LARP/PR.

This isn't necessarily in conflict with most of your comment.

I think I mostly agree the RSP is toothless. My sense is that for any relatively subjective criteria, like making a safety case for misalignment risk, the criteria will basically come down to "what Jared+Dario think is reasonable". Also, if Anthropic is unable to meet this (very subjective) bar, then Anthropic will still basically do whatever Anthropic leadership thinks is best whether via maneuvering within the constraints of the RSP commitments, editing the RSP in ways which are defensible, or clearly substantially loosening the RSP and then explaining they needed to do this due to other actors having worse precautions (as is allowed by the RSP). I currently don't expect clear cut and non-accidental procedural violations of the RSP (edit: and I think they'll be pretty careful to avoid accidental procedural violations).

I'm skeptical of normal employees having significant influence on high stakes decisions via pressuring the leadership, but empirical evidence could change the views of Anthropic leadership.

Ho...

How you feel about this state of affairs depends a lot on how much you trust Anthropic leadership to make decisions which are good from your perspective.

Another note: My guess is that people on LessWrong tend to be overly pessimistic about Anthropic leadership (in terms of how good of decisions Anthropic leadership will make under the LessWrong person's views and values) and Anthropic employees tend to be overly optimistic.

I'm less confident that people on LessWrong are overly pessimistic, but they at least seem too pessimistic about the intentions/virtue of Anthropic leadership.

Not the main thrust of the thread, but for what it's worth, I find it somewhat anti-helpful to flatten things into a single variable of "how much you trust Anthropic leadership to make decisions which are good from your perspective", and then ask how optimistic/pessimistic you are about this variable.

I think I am much more optimistic about Anthropic leadership on many axis relative to an overall survey of the US population or Western population – I expect them to be more libertarian, more in favor of free speech, more pro economic growth, more literate, more self-aware, higher IQ, and a bunch of things.

I am more pessimistic about their ability to withstand the pressures of a trillion dollar industry to shape their incentives than the people who are at Anthropic.

I believe the people working there are siloing themselves intellectually into an institution facing incredible financial incentives for certain bottom lines like "rapid AI progress is inevitable" and "it's reasonably likely we can solve alignment" and "beating China in the race is a top priority", and aren't allowed to talk to outsiders about most details of their work, and this is a key reason that I expect them to sc...

I think the main thing I want to convey is that I think you're saying that LWers (of which I am one) have a very low opinion of the integrity of people at Anthropic, but what I'm actually saying that their integrity is no match for the forces that they are being tested with.

I don't need to be able to predict a lot of fine details about individuals' decision-making in order to be able to have good estimates of these two quantities, and comparing them is the second-most question relating to whether it's good to work on capabilities at Anthropic. (The first one is a basic ethical question about working on a potentially extinction-causing technology that is not much related to the details of which capabilities company you're working on.)

Employees at Anthropic don't think the RSP is LARP/PR. My best guess is that Dario doesn't think the RSP is LARP/PR.

Yeah, I don't think this is necessarily in contradiction with my comment. Things can be effectively just LARP/PR without being consciously LARP/PR. (Indeed, this is likely the case in most instances of LARP-y behavior.)

Agreed on the rest.

I'm currently working as a contractor at Anthropic in order to get employee-level model access as part of a project I'm working on. The project is a model organism of scheming, where I demonstrate scheming arising somewhat naturally with Claude 3 Opus. So far, I’ve done almost all of this project at Redwood Research, but my access to Anthropic models will allow me to redo some of my experiments in better and simpler ways and will allow for some exciting additional experiments. I'm very grateful to Anthropic and the Alignment Stress-Testing team for providing this access and supporting this work. I expect that this access and the collaboration with various members of the alignment stress testing team (primarily Carson Denison and Evan Hubinger so far) will be quite helpful in finishing this project.

I think that this sort of arrangement, in which an outside researcher is able to get employee-level access at some AI lab while not being an employee (while still being subject to confidentiality obligations), is potentially a very good model for safety research, for a few reasons, including (but not limited to):

- For some safety research, it’s helpful to have model access in ways that la

Yay Anthropic. This is the first example I'm aware of of a lab sharing model access with external safety researchers to boost their research (like, not just for evals). I wish the labs did this more.

[Edit: OpenAI shared GPT-4 access with safety researchers including Rachel Freedman before release. OpenAI shared GPT-4 fine-tuning access with academic researchers including Jacob Steinhardt and Daniel Kang in 2023. Yay OpenAI. GPT-4 fine-tuning access is still not public; some widely-respected safety researchers I know recently were wishing for it, and were wishing they could disable content filters.]

I'd be surprised if this was employee-level access. I'm aware of a red-teaming program that gave early API access to specific versions of models, but not anything like employee-level.

It was a secretive program — it wasn’t advertised anywhere, and we had to sign an NDA about its existence (which we have since been released from). I got the impression that this was because OpenAI really wanted to keep the existence of GPT4 under wraps. Anyway, that means I don’t have any proof beyond my word.

I've heard from a credible source that OpenAI substantially overestimated where other AI companies were at with respect to RL and reasoning when they released o1. Employees at OpenAI believed that other top AI companies had already figured out similar things when they actually hadn't and were substantially behind. OpenAI had been sitting the improvements driving o1 for a while prior to releasing it. Correspondingly, releasing o1 resulted in much larger capabilities externalities than OpenAI expected. I think there was one more case like this either from OpenAI or GDM where employees had a large misimpression about capabilities progress at other companies causing a release they wouldn't do otherwise.

One key takeaway from this is that employees at AI companies might be very bad at predicting the situation at other AI companies (likely making coordination more difficult by default). This includes potentially thinking they are in a close race when they actually aren't. Another update is that keeping secrets about something like reasoning models worked surprisingly well to prevent other companies from copying OpenAI's work even though there was a bunch of public reporting (and presumably many rumors) about this.

One more update is that OpenAI employees might unintentionally accelerate capabilities progress at other actors via overestimating how close they are. My vague understanding was that they haven't updated much, but I'm unsure. (Consider updating more if you're an OpenAI employee!)

Alex Mallen also noted a connection with people generally thinking they are in race when they actually aren't: https://forum.effectivealtruism.org/posts/cXBznkfoPJAjacFoT/are-you-really-in-a-race-the-cautionary-tales-of-szilard-and

Should we update against seeing relatively fast AI progress in 2025 and 2026? (Maybe (re)assess this after the GPT-5 release.)

Around the early o3 announcement (and maybe somewhat before that?), I felt like there were some reasonably compelling arguments for putting a decent amount of weight on relatively fast AI progress in 2025 (and maybe in 2026):

- Maybe AI companies will be able to rapidly scale up RL further because RL compute is still pretty low (so there is a bunch of overhang here); this could cause fast progress if the companies can effectively directly RL on useful stuff or RL transfers well even from more arbitrary tasks (e.g. competition programming)

- Maybe OpenAI hasn't really tried hard to scale up RL on agentic software engineering and has instead focused on scaling up single turn RL. So, when people (either OpenAI themselves or other people like Anthropic) scale up RL on agentic software engineering, we might see rapid progress.

- It seems plausible that larger pretraining runs are still pretty helpful, but prior runs have gone wrong for somewhat random reasons. So, maybe we'll see some more successful large pretraining runs (with new improved algorithms) in 2025.

I u...

I basically agree with this whole post. I used to think there were double-digit % chances of AGI in each of 2024 and 2025 and 2026, but now I'm more optimistic, it seems like "Just redirect existing resources and effort to scale up RL on agentic SWE" is now unlikely to be sufficient (whereas in the past we didn't have trends to extrapolate and we had some scary big jumps like o3 to digest)

I still think there's some juice left in that hypothesis though. Consider how in 2020, one might have thought "Now they'll just fine-tune these models to be chatbots and it'll become a mass consumer product" and then in mid-2022 various smart people I know were like "huh, that hasn't happened yet, maybe LLMs are hitting a wall after all" but it turns out it just took till late 2022/early 2023 for the kinks to be worked out enough.

Also, we should have some credence on new breakthroughs e.g. neuralese, online learning, whatever. Maybe like 8%/yr? Of a breakthrough that would lead to superhuman coders within a year or two, after being appropriately scaled up and tinkered with.

Interestingly, reasoning doesn't seem to help Anthropic models on agentic software engineering tasks, but does help OpenAI models.

Is there a standard citation for this?

How do you come by this fact?

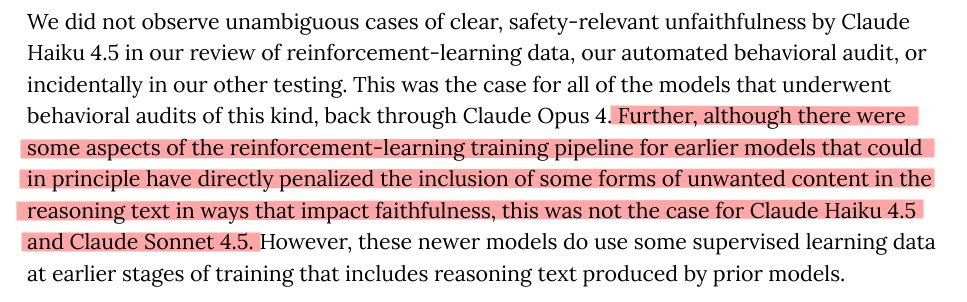

Anthropic, GDM, and xAI say nothing about whether they train against Chain-of-Thought (CoT) while OpenAI claims they don't[1].

I think AI companies should be transparent about whether (and how) they train against CoT. While OpenAI is doing a better job at this than other companies, I think all of these companies should provide more information about this.

It's particularly striking that Anthropic says nothing about whether they train against CoT given their system card (for 4.5 Sonnet) is very thorough and includes a section on "Reasoning faithfulness" (kudos to them for providing so much other information!). Naively, this seems like some evidence that they are training against CoT and didn't want to disclose this.[2]

I think we should try to create an incentive gradient that pushes AI companies to disclose information even if that information makes them look bad, so we should make companies feel some heat for not disclosing (reasonably) important information like how/whether they are training against CoT.

It's worth noting that some versions of training against CoT might be totally fine (as in, they don't incentivize obfuscation of misaligned reasoning) and that some particular appr...

Anthropic has now clarified this in their system card for Claude Haiku 4.5:

Thanks to them for doing this!

See also Sam Bowman's tweet thread about this.

I thought it would be helpful to post about my timelines and what the timelines of people in my professional circles (Redwood, METR, etc) tend to be.

Concretely, consider the outcome of: AI 10x’ing labor for AI R&D[1], measured by internal comments by credible people at labs that AI is 90% of their (quality adjusted) useful work force (as in, as good as having your human employees run 10x faster).

Here are my predictions for this outcome:

- 25th percentile: 2 year (Jan 2027)

- 50th percentile: 5 year (Jan 2030)

The views of other people (Buck, Beth Barnes, Nate Thomas, etc) are similar.

I expect that outcomes like “AIs are capable enough to automate virtually all remote workers” and “the AIs are capable enough that immediate AI takeover is very plausible (in the absence of countermeasures)” come shortly after (median 1.5 years and 2 years after respectively under my views).

Only including speedups due to R&D, not including mechanisms like synthetic data generation. ↩︎

AI is 90% of their (quality adjusted) useful work force (as in, as good as having your human employees run 10x faster).

I don't grok the "% of quality adjusted work force" metric. I grok the "as good as having your human employees run 10x faster" metric but it doesn't seem equivalent to me, so I recommend dropping the former and just using the latter.

My timelines are now roughly similar on the object level (maybe a year slower for 25th and 1-2 years slower for 50th), and procedurally I also now defer a lot to Redwood and METR engineers. More discussion here: https://www.lesswrong.com/posts/K2D45BNxnZjdpSX2j/ai-timelines?commentId=hnrfbFCP7Hu6N6Lsp

Slightly hot take: Longtermist capacity/community building is pretty underdone at current margins and retreats (focused on AI safety, longtermism, or EA) are also underinvested in. By "longtermist community building", I mean rather than AI safety. I think retreats are generally underinvested in at the moment. I'm also sympathetic to thinking that general undergrad and high school capacity building (AI safety, longtermist, or EA) is underdone, but this seems less clear-cut.

I think this underinvestment is due to a mix of mistakes on the part of Open Philanthropy (and Good Ventures)[1] and capacity building being lower status than it should be.

Here are some reasons why I think this work is good:

- It's very useful for there to be people who are actually trying really hard to do the right thing and they often come through these sorts of mechanisms. Another way to put this is that flexible, impact-obsessed people are very useful.

- Retreats make things feel much more real to people and result in people being more agentic and approaching their choices more effectively.

- Programs like MATS are good, but they get somewhat different people at a somewhat different part of the funnel, so they don

Precise AGI timelines don't matter that much.

While I do spend some time discussing AGI timelines (and I've written some posts about it recently), I don't think moderate quantitative differences in AGI timelines matter that much for deciding what to do[1]. For instance, having a 15-year median rather than a 6-year median doesn't make that big of a difference. That said, I do think that moderate differences in the chance of very short timelines (i.e., less than 3 years) matter more: going from a 20% chance to a 50% chance of full AI R&D automation within 3 years should potentially make a substantial difference to strategy.[2]

Additionally, my guess is that the most productive way to engage with discussion around timelines is mostly to not care much about resolving disagreements, but then when there appears to be a large chance that timelines are very short (e.g., >25% in <2 years) it's worthwhile to try hard to argue for this.[3] I think takeoff speeds are much more important to argue about when making the case for AI risk.

I do think that having somewhat precise views is helpful for some people in doing relatively precise prioritization within people already working on safe...

Inference compute scaling might imply we first get fewer, smarter AIs.

Prior estimates imply that the compute used to train a future frontier model could also be used to run tens or hundreds of millions of human equivalents per year at the first time when AIs are capable enough to dominate top human experts at cognitive tasks[1] (examples here from Holden Karnofsky, here from Tom Davidson, and here from Lukas Finnveden). I think inference time compute scaling (if it worked) might invalidate this picture and might imply that you get far smaller numbers of human equivalents when you first get performance that dominates top human experts, at least in short timelines where compute scarcity might be important. Additionally, this implies that at the point when you have abundant AI labor which is capable enough to obsolete top human experts, you might also have access to substantially superhuman (but scarce) AI labor (and this could pose additional risks).

The point I make here might be obvious to many, but I thought it was worth making as I haven't seen this update from inference time compute widely discussed in public.[2]

However, note that if inference compute allows for trading off betw...

Sometimes people think of "software-only singularity" as an important category of ways AI could go. A software-only singularity can roughly be defined as when you get increasing-returns growth (hyper-exponential) just via the mechanism of AIs increasing the labor input to AI capabilities software[1] R&D (i.e., keeping fixed the compute input to AI capabilities).

While the software-only singularity dynamic is an important part of my model, I often find it useful to more directly consider the outcome that software-only singularity might cause: the feasibility of takeover-capable AI without massive compute automation. That is, will the leading AI developer(s) be able to competitively develop AIs powerful enough to plausibly take over[2] without previously needing to use AI systems to massively (>10x) increase compute production[3]?

[This is by Ryan Greenblatt and Alex Mallen]

We care about whether the developers' AI greatly increases compute production because this would require heavy integration into the global economy in a way that relatively clearly indicates to the world that AI is transformative. Greatly increasing compute production would require building additional fabs whi...

I'm not sure if the definition of takeover-capable-AI (abbreviated as "TCAI" for the rest of this comment) in footnote 2 quite makes sense. I'm worried that too much of the action is in "if no other actors had access to powerful AI systems", and not that much action is in the exact capabilities of the "TCAI". In particular: Maybe we already have TCAI (by that definition) because if a frontier AI company or a US adversary was blessed with the assumption "no other actor will have access to powerful AI systems", they'd have a huge advantage over the rest of the world (as soon as they develop more powerful AI), plausibly implying that it'd be right to forecast a >25% chance of them successfully taking over if they were motivated to try.

And this seems somewhat hard to disentangle from stuff that is supposed to count according to footnote 2, especially: "Takeover via the mechanism of an AI escaping, independently building more powerful AI that it controls, and then this more powerful AI taking over would" and "via assisting the developers in a power grab, or via partnering with a US adversary". (Or maybe the scenario in 1st paragraph is supposed to be excluded because current AI isn't...

I put roughly 50% probability on feasibility of software-only singularity.[1]

(I'm probably going to be reinventing a bunch of the compute-centric takeoff model in slightly different ways below, but I think it's faster to partially reinvent than to dig up the material, and I probably do use a slightly different approach.)

My argument here will be a bit sloppy and might contain some errors. Sorry about this. I might be more careful in the future.

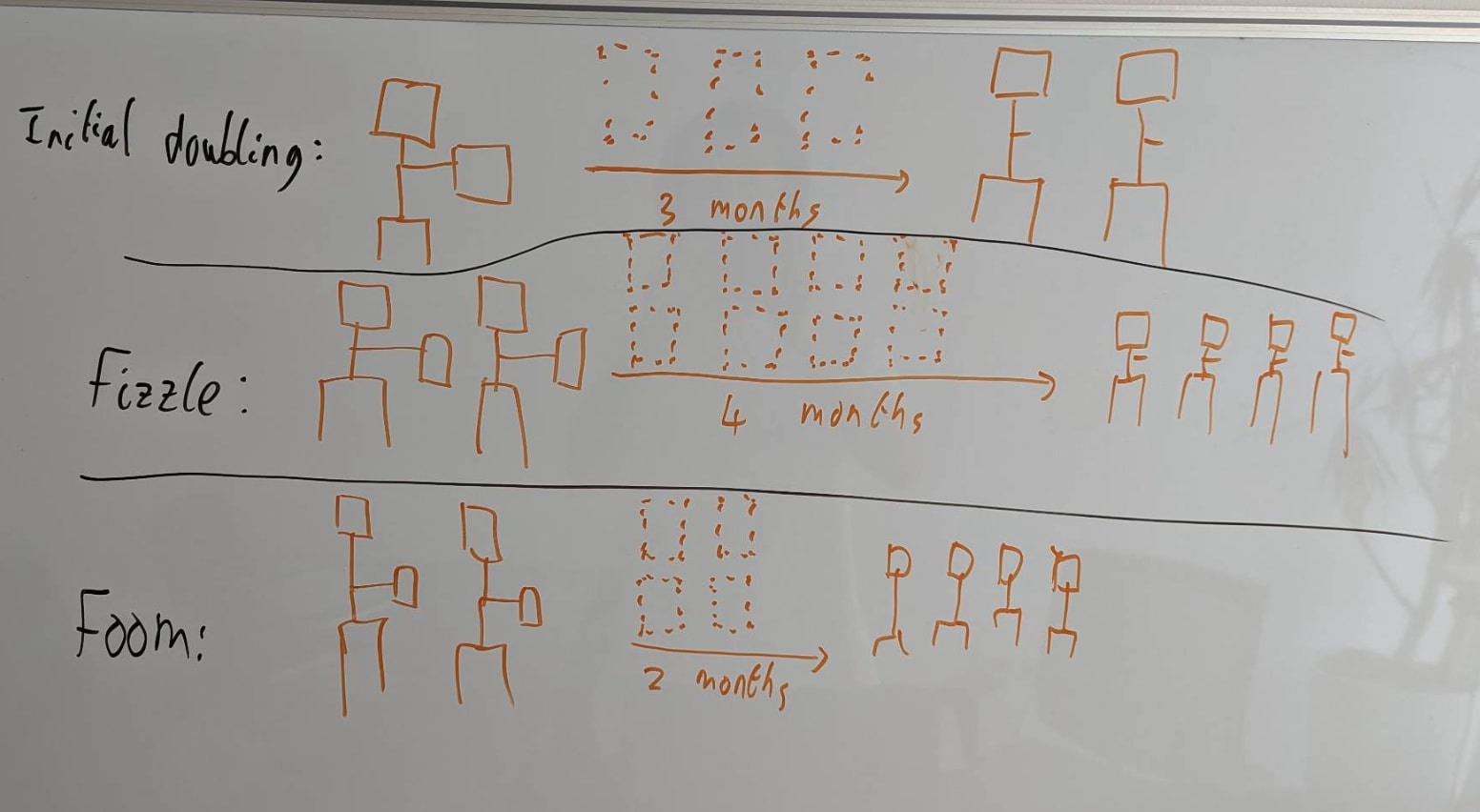

The key question for software-only singularity is: "When the rate of labor production is doubled (as in, as if your employees ran 2x faster[2]), does that more than double or less than double the rate of algorithmic progress? That is, algorithmic progress as measured by how fast we increase the labor production per FLOP/s (as in, the labor production from AI labor on a fixed compute base).". This is a very economics-style way of analyzing the situation, and I think this is a pretty reasonable first guess. Here's a diagram I've stolen from Tom's presentation on explosive growth illustrating this:

Basically, every time you double the AI labor supply, does the time until the next doubling (driven by algorithmic progress) increase (fizzle) or decre...

Hey Ryan! Thanks for writing this up -- I think this whole topic is important and interesting.

I was confused about how your analysis related to the Epoch paper, so I spent a while with Claude analyzing it. I did a re-analysis that finds similar results, but also finds (I think) some flaws in your rough estimate. (Keep in mind I'm not an expert myself, and I haven't closely read the Epoch paper, so I might well be making conceptual errors. I think the math is right though!)

I'll walk through my understanding of this stuff first, then compare to your post. I'll be going a little slowly (A) to help myself refresh myself via referencing this later, (B) to make it easy to call out mistakes, and (C) to hopefully make this legible to others who want to follow along.

Using Ryan's empirical estimates in the Epoch model

The Epoch model

The Epoch paper models growth with the following equation:

1. ,

where A = efficiency and E = research input. We want to consider worlds with a potential software takeoff, meaning that increases in AI efficiency directly feed into research input, which we model as . So the key consideration seems to be the ratio&n...

Here's my own estimate for this parameter:

Once AI has automated AI R&D, will software progress become faster or slower over time? This depends on the extent to which software improvements get harder to find as software improves – the steepness of the diminishing returns.

We can ask the following crucial empirical question:

When (cumulative) cognitive research inputs double, how many times does software double?

(In growth models of a software intelligence explosion, the answer to this empirical question is a parameter called r.)

If the answer is “< 1”, then software progress will slow down over time. If the answer is “1”, software progress will remain at the same exponential rate. If the answer is “>1”, software progress will speed up over time.

The bolded question can be studied empirically, by looking at how many times software has doubled each time the human researcher population has doubled.

(What does it mean for “software” to double? A simple way of thinking about this is that software doubles when you can run twice as many copies of your AI with the same compute. But software improvements don...

Here's a simple argument I'd be keen to get your thoughts on:

On the Possibility of a Tastularity

Research taste is the collection of skills including experiment ideation, literature review, experiment analysis, etc. that collectively determine how much you learn per experiment on average (perhaps alongside another factor accounting for inherent problem difficulty / domain difficulty, of course, and diminishing returns)

Human researchers seem to vary quite a bit in research taste--specifically, the difference between 90th percentile professional human researchers and the very best seems like maybe an order of magnitude? Depends on the field, etc. And the tails are heavy; there is no sign of the distribution bumping up against any limits.

Yet the causes of these differences are minor! Take the very best human researchers compared to the 90th percentile. They'll have almost the same brain size, almost the same amount of experience, almost the same genes, etc. in the grand scale of things.

This means we should assume that if the human population were massively bigger, e.g. trillions of times bigger, there would be humans whose brains don't look that different from the brains of...

As part of the alignment faking paper, I hosted a website with ~250k transcripts from our experiments (including transcripts with alignment-faking reasoning). I didn't include a canary string (which was a mistake).[1]

The current state is that the website has a canary string, a robots.txt, and a terms of service which prohibits training. The GitHub repo which hosts the website is now private. I'm tentatively planning on putting the content behind Cloudflare Turnstile, but this hasn't happened yet.

The data is also hosted in zips in a publicly accessible Google Drive folder. (Each file has a canary in this.) I'm currently not planning on password protecting this or applying any other mitigation here.

Other than putting things behind Cloudflare Turnstile, I'm not taking ownership for doing anything else at the moment.

It's possible that I actively want this data to be possible to scrape at this point because maybe the data was scraped prior to the canary being added and if it was scraped again then the new version would replace the old version and then hopefully not get trained on due to the canary. Adding a robots.txt might prevent this replacement as would putting it behind Cloudflare ...

Thanks for taking these steps!

Context: I was pretty worried about self-fulfilling misalignment data poisoning (https://turntrout.com/self-fulfilling-misalignment) after reading some of the Claude 4 model card. I talked with @Monte M and then Ryan about possible steps here & encouraged action on the steps besides the canary string. I've considered writing up a "here are some steps to take" guide but honestly I'm not an expert.

Probably there's existing work on how to host data so that AI won't train on it.

If not: I think it'd be great for someone to make a template website for e.g. signing up with CloudFlare. Maybe a repo that has the skeleton of a dataset-hosting website (with robots.txt & ToS & canary string included) for people who want to host misalignment data more responsibly. Ideally those people would just have to

- Sign up with e.g. Cloudflare using a linked guide,

- Clone the repo,

- Fill in some information and host their dataset.

After all, someone who has finally finished their project and then discovers that they're supposed to traverse some arduous process is likely to just avoid it.

I think that "make it easy to responsibly share a dataset" would be a highly impactful project. Anthropic's Claude 4 model card already argues that dataset leakage hurt Claude 4's alignment (before mitigations).

For my part, I'll put out a $500 bounty on someone completing this project and doing a good job of it (as judged by me / whomever I consult). I'd also tweet it out and talk about how great it is that [person] completed the project :) I don't check LW actively, so if you pursue this, please email alex@turntrout.com.

EDIT: Thanks to my coworker Anna Wang , the bounty is doubled to $1,000! Completion criterion is:

An unfamiliar researcher can follow the instructions and have their dataset responsibly uploaded within one hour

Please check proposed solutions with dummy datasets and scrapers

Someone thought it would be useful to quickly write up a note on my thoughts on scalable oversight research, e.g., research into techniques like debate or generally improving the quality of human oversight using AI assistance or other methods. Broadly, my view is that this is a good research direction and I'm reasonably optimistic that work along these lines can improve our ability to effectively oversee somewhat smarter AIs which seems helpful (on my views about how the future will go).

I'm most excited for:

- work using control-style adversarial analysis where the aim is to make it difficult for AIs to subvert the oversight process (if they were trying to do this)

- work which tries to improve outputs in conceptually loaded hard-to-check cases like philosophy, strategy, or conceptual alignment/safety research (without necessarily doing any adversarial analysis and potentially via relying on generalization)

- work which aims to robustly detect (or otherwise mitigate) reward hacking in highly capable AIs, particularly AIs which are capable enough that by default human oversight would often fail to detect reward hacks[1]

I'm skeptical of scalable oversight style methods (e.g., debate, ID...

The capability evaluations in the Opus 4.5 system card seem worrying. The provided evidence in the system card seem pretty weak (in terms of how much it supports Anthropic's claims). I plan to write more about this in the future; here are some of my more quickly written up thoughts.

[This comment is based on this X/twitter thread I wrote]

I ultimately basically agree with their judgments about the capability thresholds they discuss. (I think the AI is very likely below the relevant AI R&D threshold, the CBRN-4 threshold, and the cyber thresholds.) But, if I just had access to the system card, I would be much more unsure. My view depends a lot on assuming some level of continuity from prior models (and assuming 4.5 Opus wasn't a big scale up relative to prior models), on other evidence (e.g. METR time horizon results), and on some pretty illegible things (e.g. making assumptions about evaluations Anthropic ran or about the survey they did).

Some specifics:

- Autonomy: For autonomy, evals are mostly saturated so they depend on an (underspecified) employee survey. They do specify a threshold, but the threshold seems totally consistent with a large chance of being above the relevant RS

DeepSeek's success isn't much of an update on a smaller US-China gap in short timelines because security was already a limiting factor

Some people seem to have updated towards a narrower US-China gap around the time of transformative AI if transformative AI is soon, due to recent releases from DeepSeek. However, since I expect frontier AI companies in the US will have inadequate security in short timelines and China will likely steal their models and algorithmic secrets, I don't consider the current success of China's domestic AI industry to be that much of an update. Furthermore, if DeepSeek or other Chinese companies were in the lead and didn't open-source their models, I expect the US would steal their models and algorithmic secrets. Consequently, I expect these actors to be roughly equal in short timelines, except in their available compute and potentially in how effectively they can utilize AI systems.

I do think that the Chinese AI industry looking more competitive makes security look somewhat less appealing (and especially less politically viable) and makes it look like their adaptation time to stolen models and/or algorithmic secrets will be shorter. Marginal improvements in...

Recently, @Daniel Kokotajlo and I were talking about the probability that AIs trained using "business as usual RLHF" end up being basically aligned rather than conspiring against us and our tests.[1] One intuition pump we ended up discussing is the prospects of octopus misalignment. Overall, my view is that directly considering the case with AIs (and what various plausible scenarios would look like) is more informative than analogies like this, but analogies like this are still somewhat useful to consider.

So, what do I mean by octopus misalignment? Suppose a company breeds octopuses[2] until the point where they are as smart and capable as the best research scientists[3] at AI companies. We'll suppose that this magically happens as fast as normal AI takeoff, so there are many generations per year. So, let's say they currently have octopuses which can speak English and write some code but aren't smart enough to be software engineers or automate any real jobs. (As in, they are as capable as AIs are today, roughly speaking.) And they get to the level of top research scientists in mid-2028. Along the way, the company attempts to select them for being kind, loyal, and obedient. The comp...

A response to Dwarkesh's post arguing continual learning is a bottleneck.

This is a response to Dwarkesh's post "Why I have slightly longer timelines than some of my guests". I originally posted this response on twitter here.

I agree with much of this post. I also have roughly 2032 medians to things going crazy, I agree learning on the job is very useful, and I'm also skeptical we'd see massive white collar automation without further AI progress.

However, I think Dwarkesh is wrong to suggest that RL fine-tuning can't be qualitatively similar to how humans learn. In the post, he discusses AIs constructing verifiable RL environments for themselves based on human feedback and then argues this wouldn't be flexible and powerful enough to work, but RL could be used more similarly to how humans learn.

My best guess is that the way humans learn on the job is mostly by noticing when something went well (or poorly) and then sample efficiently updating (with their brain doing something analogous to an RL update). In some cases, this is based on external feedback (e.g. from a coworker) and in some cases it's based on self-verification: the person just looking at the outcome of their actions and t...

Some people seem to think my timelines have shifted a bunch while they've only moderately changed.

Relative to my views at the start of 2025, my median (50th percentile) for AIs fully automating AI R&D was pushed back by around 2 years—from something like Jan 2032 to Jan 2034. My 25th percentile has shifted similarly (though perhaps more importantly) from maybe July 2028 to July 2030. Obviously, my numbers aren't fully precise and vary some over time. (E.g., I'm not sure I would have quoted these exact numbers for this exact milestone at the start of the year; these numbers for the start of the year are partially reverse engineered from this comment.)

Fully automating AI R&D is a pretty high milestone; my current numbers for something like "AIs accelerate AI R&D as much as what would happen if employees ran 10x faster (e.g. by ~fully automating research engineering and some other tasks)" are probably 50th percentile Jan 2032 and 25th percentile Jan 2029.[1]

I'm partially posting this so there is a record of my views; I think it's somewhat interesting to observe this over time. (That said, I don't want to anchor myself, which does seem like a serious downside. I should slid...

People often say US-China deals to slow AI progress and develop AI more safely would be hard to enforce/verify.

However, there are easy to enforce deals: each destroys a fraction of their chips at some level of AI capability. This still seems like it could be helpful and it's pretty easy to verify.

This is likely worse than a well-executed comprehensive deal which would allow for productive non-capabilities uses of the compute (e.g., safety or even just economic activity). But it's harder to verify that chips aren't used to advance capabilities while easy to check if they are destroyed.

See also: Daniel's post on "Cull the GPUs".

A response to "State of play of AI progress (and related brakes on an intelligence explosion)" by Nathan Lambert.

Nathan Lambert recently wrote a piece about why he doesn't expect a software-only intelligence explosion. I responded in this substack comment which I thought would be worth copying here.

As someone who thinks a rapid (software-only) intelligence explosion is likely, I thought I would respond to this post and try to make the case in favor. I tend to think that AI 2027 is a quite aggressive, but plausible scenario.

I interpret the core argument in AI 2027 as:

- We're on track to build AIs which can fully automate research engineering in a few years. (Or at least, this is plausible, like >20%.) AI 2027 calls this level of AI "superhuman coder". (Argument: https://ai-2027.com/research/timelines-forecast)

- Superhuman coders will ~5x accelerate AI R&D because ultra fast and cheap superhuman research engineers would be very helpful. (Argument: https://ai-2027.com/research/takeoff-forecast#sc-would-5x-ai-randd).

- Once you have superhuman coders, unassisted humans would only take a moderate number of years to make AIs which can automate all of AI research ("superhuman AI

About 1 year ago, I wrote up a ready-to-go plan for AI safety focused on current science (what we roughly know how to do right now). This is targeting reducing catastrophic risks from the point when we have transformatively powerful AIs (e.g. AIs similarly capable to humans).

I never finished this doc, and it is now considerably out of date relative to how I currently think about what should happen, but I still think it might be helpful to share.

Here is the doc. I don't particularly want to recommend people read this doc, but it is possible that someone will find it valuable to read.

I plan on trying to think though the best ready-to-go plan roughly once a year. Buck and I have recently started work on a similar effort. Maybe this time we'll actually put out an overall plan rather than just spinning off various docs.

This seems like a great activity, thank you for doing/sharing it. I disagree with the claim near the end that this seems better than Stop, and in general felt somewhat alarmed throughout at (what seemed to me like) some conflation/conceptual slippage between arguments that various strategies were tractable, and that they were meaningfully helpful. Even so, I feel happy that the world contains people sharing things like this; props.

I disagree with the claim near the end that this seems better than Stop

At the start of the doc, I say:

It’s plausible that the optimal approach for the AI lab is to delay training the model and wait for additional safety progress. However, we’ll assume the situation is roughly: there is a large amount of institutional will to implement this plan, but we can only tolerate so much delay. In practice, it’s unclear if there will be sufficient institutional will to faithfully implement this proposal.

Towards the end of the doc I say:

This plan requires quite a bit of institutional will, but it seems good to at least know of a concrete achievable ask to fight for other than “shut everything down”. I think careful implementation of this sort of plan is probably better than “shut everything down” for most AI labs, though I might advocate for slower scaling and a bunch of other changes on current margins.

Presumably, you're objecting to 'I think careful implementation of this sort of plan is probably better than “shut everything down” for most AI labs'.

My current view is something like:

- If there was broad, strong, and durable political will and buy in for heavily prioritizing AI tak

I think it might be worth quickly clarifying my views on activation addition and similar things (given various discussion about this). Note that my current views are somewhat different than some comments I've posted in various places (and my comments are somewhat incoherent overall), because I’ve updated based on talking to people about this over the last week.

This is quite in the weeds and I don’t expect that many people should read this.

- It seems like activation addition sometimes has a higher level of sample efficiency in steering model behavior compared with baseline training methods (e.g. normal LoRA finetuning). These comparisons seem most meaningful in straightforward head-to-head comparisons (where you use both methods in the most straightforward way). I think the strongest evidence for this is in Liu et al..

- Contrast pairs are a useful technique for variance reduction (to improve sample efficiency), but may not be that important (see Liu et al. again). It's relatively natural to capture this effect using activation vectors, but there is probably some nice way to incorporate this into SGD. Perhaps DPO does this? Perhaps there is something else?

- Activation addition wo

Some of my proposals for empirical AI safety work.

I sometimes write proposals for empirical AI safety work without publishing the proposal (as the doc is somewhat rough or hard to understand). But, I thought it might be useful to at least link my recent project proposals publicly in case someone would find them useful.

- Decoding opaque reasoning in current models

- Safe distillation

- Basic science of teaching AIs synthetic facts[1]

- How to do elicitation without learning

If you're interested in empirical project proposals, you might also be interested in "7+ tractable directions in AI control".

I've actually already linked this (and the proposal in the next bullet) publicly from "7+ tractable directions in AI control", but I thought it could be useful to link here as well. ↩︎

Sometimes people talk about how AIs will be very superhuman at a bunch of (narrow) domains. A key question related to this is how much this generalizes. Here are two different possible extremes for how this could go:

- It's effectively like an attached narrow weak AI: The AI is superhuman at things like writing ultra fast CUDA kernels, but from the AI's perspective, this is sort of like it has a weak AI tool attached to it (in a well integrated way) which is superhuman at this skill. The part which is writing these CUDA kernels (or otherwise doing the task) is effectively weak and can't draw in a deep way on the AI's overall skills or knowledge to generalize (likely it can shallowly draw on these in a way which is similar to the overall AI providing input to the weak tool AI). Further, you could actually break out these capabilities into a separate weak model that humans can use. Humans would use this somewhat less fluently as they can't use it as quickly and smoothly due to being unable to instantaneously translate their thoughts and not being absurdly practiced at using the tool (like AIs would be), but the difference is ultimately mostly convenience and practice.

- Integrated super

I don't particularly like extrapolating revenue as a methodology for estimating timelines to when AI is (e.g.) a substantial fraction of US GDP (say 20%)[1], but I do think it would be worth doing a more detailed version of this timelines methodology. This is in response to Ege's blog post with his version of this forecasting approach.

Here is my current favorite version of this (though you could do better and this isn't that careful):

AI company revenue will be perhaps ~$20 billion this year and has roughly 3x'd per year over the last 2.5 years. Let's say 1/2 of this is in the US for $10 billion this year (maybe somewhat of an underestimate, but whatever). Maybe GDP impacts are 10x higher than revenue due to AI companies not internalizing the value of all of their revenue (they might be somewhat lower due to AI just displacing other revenue without adding that much value), so to hit 20% of US GDP (~$6 trillion) AI companies would need around $600 billion in revenue. The naive exponential extrapolation indicates we hit this level of annualized revenue in 4 years in the early/middle 2029. Notably, most of this revenue would be in the last year, so we'd be seeing ~10% GDP growth.

Expone...

Consider Tabooing Gradual Disempowerment.

I'm worried that when people say gradual disempowerment they often mean "some scenario in which humans are disempowered gradually over time", but many readers will interpret this as "the threat model in the paper called 'Gradual Disempowerment'". These things can differ substantially and the discussion in this paper is much more specific than encompassing all scenarios in which humans slowly are disempowered!

(You could say "disempowerment which is gradual" for clarity.)

IMO, instrumental convergence is a terrible name for an extremely obvious thing.

The actual main issue is that AIs might want things with limited supply and then they would try to get these things which would result in them not going to humanity. E.g., AIs might want all cosmic resources, but we also want this stuff. Maybe this should be called AIs-might-want-limited-stuff-we-want.

(There is something else which is that even if the AI doesn't want limited stuff we want, we might end up in conflict due to failures of information or coordination. E.g., the AI almost entirely just wants to chill out in the desert and build crazy sculptures and it doesn't care about extreme levels of maximization (e.g. it doesn't want to use all resources to gain a higher probability of continuing to build crazy statues). But regardless, the AI decides to try taking over the world because it's worried that humanity would shut it down because it wouldn't have any way of credibly indicating that it just wants to chill out in the desert.)

(More generally, it's plausible that failures of trade/coordination result in a large number of humans dying in conflict with AIs even though both humans and AIs would prefer other approaches. But this isn't entirely obvious and it's plausible we could resolve this with better negotation and precommitments. Of course, this isn't clearly the largest moral imperative from a longtermist perspective.)

On Scott Alexander’s description of Representation Engineering in “The road to honest AI”

This is a response to Scott Alexander’s recent post “The road to honest AI”, in particular the part about the empirical results of representation engineering. So, when I say “you” in the context of this post that refers to Scott Alexander. I originally made this as a comment on substack, but I thought people on LW/AF might be interested.

TLDR: The Representation Engineering paper doesn’t demonstrate that the method they introduce adds much value on top of using linear probes (linear classifiers), which is an extremely well known method. That said, I think that the framing and the empirical method presented in the paper are still useful contributions.

I think your description of Representation Engineering considerably overstates the empirical contribution of representation engineering over existing methods. In particular, rather than comparing the method to looking for neurons with particular properties and using these neurons to determine what the model is "thinking" (which probably works poorly), I think the natural comparison is to training a linear classifier on the model’s internal activatio...