Hey I'm interested in implementing some of these decision theories (and decision problems) in code. I have an initial version of CDT, EDT, and something I'm generically calling "FDT", but which I guess is actually some particular sub-variant of FDT in Python here, with the core decision theories implemented in about 45 lines of python code here. I'm wondering if anyone here might have suggestions on what would it look like to implement UDT in this framework -- either 1.0 or 1.1. I don't yet have a notion of "observation" in the code, so I can't yet impleme...

Some of my friends are signal-boosting this new article: 60 U.K. Lawmakers Accuse Google of Breaking AI Safety Pledge. See also the open letter. I don't feel good about this critique or the implicit ask.

- Sharing information on capabilities is good but public deployment is a bad time for that, in part because most risk comes from internal deployment.

- Google didn't necessarily even break a commitment? The commitment mentioned in the article is to "publicly report model or system capabilities." That doesn't say it has to be done at the time of public deployment

Have you stated anywhere what makes you think "apparently a village idiot" is a sensible description of current learning programs, as they inform us regarding the question of whether or not we currently have something that is capable via generators sufficiently similar to [the generators of humanity's world-affecting capability] that we can reasonably induce that these systems are somewhat likely to kill everyone soon?

I think you accurately interpreted me as saying I was wrong about how long it would take to get from the "apparently a village idiot" level to "apparently Einstein" level! I hadn't thought either of us were talking about the vastness of the space above, in re what I was mistaken about. You do not need to walk anything back afaict!

Another downside is that pre-deployment risk assessments might increase the likelihood of a secret intelligence explosion via the mechanism of discouraging public release of models.

I was amused when Claude Opus abruptly stopped generating a reply to me and shut down the chat when I had asked it how a fictional galactic empire might control its frontier planets. Given that it stopped generating in the middle of a sentence that was talking about "biological monitoring" and "enhanced", I surmised that the reference to the genetically engineered catboys/catgirls in the setting had triggered its bioengineering filters.

From the paper:

If a non-monotonic relationship exists between monitor scores and likelihood ratios, auditing the highest-scoring outputs is suboptimal. [...] This means ΠNP will audit some moderately high-scoring outputs (e.g., those with a monitor score of 7.2) before auditing certain higher-scoring outputs

I agree this is clearly better if you trust your LLRs to be 100% accurate, but ultimately this has to be fit on some training set and then we are depending on it generalizing to deployment. However, that generalization could fail just for normal ML reas...

If by intelligence you mean "we made some tests and made sure they are legible enough that people like them as benchmarks, and lo and behold, learning programs (LPs) continue to perform some amount better on them as time passes", ok, but that's a dumb way to use that word. If by intelligence you mean "we have something that is capable via generators sufficiently similar to [the generators of humanity's world-affecting capability] that we can reasonably induce that these systems are somewhat likely to kill everyone", then I challenge you to provide the evid...

Ugh, I think you're totally right and I was being sloppy; I totally unreasonably interpreted Eliezer as saying that he was wrong about how long/how hard/how expensive it would be to get between capability levels. (But maybe Eliezer misinterpreted himself the same way? His subsequent tweets are consistent with this interpretation.)

I totally agree with Eliezer's point in that post, though I do wish that he had been clearer about what exactly he was saying.

Fortunately, there’s a correlation between situations where (i) AI takeover risk is high, and (ii) AIs have a good understanding of the world. If AI developers have perfect ability to present the AI with false impressions of the world, then the risk from AI takeover is probably low. While if AIs have substantial ability to distinguish truth from falsehood, then perhaps that channel can also be used to communicate facts about the world.

Whether this is fortunate depends a lot on how beneficial communication with unaligned AIs is. If unaligned AI with high ch...

I really appreciate this post, it points out something I consider extremely important. It's obviously aligned with gradual disempowerment/intelligence curse type discussion, however I'm not sure if I can say if I've ever seen this specific thing discussed elsewhere.

I would like to mention a 5th type, though perhaps not the type discussed in your post since it likely doesn't apply to those who actually do rigorously study economics, this is more so a roadblock I hit regarding the layman's understanding of Econ. To summarize it in three words, the idea that ...

If torturing an AI only teaches it to avoid things that are bad-for-it, without caring about suffering it doesn't feel, the argument doesn't work.

I’m not sure why you are saying the argument does not work in this case, what about all the other things the AI could learn from other experiences or teachings? Below I copy a paragraph from the post

...However, the argument does not say that initial agent biases are irrelevant and that all conscious agents reach moral behaviour equally easily and independently. We should expect, for example, that an agent that alrea

Thank you for this suggestion, I appreciate it! I’ve read the review I found here and it seems that parts of that account of ethics overlap with some ideas I’ve discussed in the post, in particular the idea of considering the point of view of all conscious (rational) agents. Maybe I’ll read the entire book if I decide to reformulate the argument of the post in a different way, which is something I was already thinking about.

How did you find that book?

I have thought of that "Village Idiot and Einstein" claim as the most obvious example of a way that Eliezer and co were super wrong about how AI would go, and they've AFAIK totally failed to publicly reckon with it as it's become increasingly obvious that they were wrong over the last eight years

I'm confused—what evidence do you mean? As I understood it, the point of the village idiot/Einstein post was that the size of the relative differences in intelligence we were familiar with—e.g., between humans, or between humans and other organisms—tells us little ...

I appreciated the attention to detail, e.g. Dyson Swarm instead of Dyson Sphere, and googol instead of google. Maybe I missed it, but I think a big one is that economists typically only look back 100 or so years so they have a strong prior of roughly constant growth rates. Whereas if you look back further, it really does look like an explosion.

Thanks for the thoughtful reply!

I understand “resource scarcity” but I’m confused by “coordination problems”. Can you give an example? (Sorry if that’s a stupid question.)

This is the idea that at some point in scaling up an organization you could lose efficiency due to needing more/better management, more communication (meetings) needed and longer communication processes, "bloat" in general. I'm not claiming it’s likely to happen with AI, just another possible reason for increasing marginal cost with scale.

...Resource scarcity seems unlikely to bite her

@Eliezer Yudkowsky tweets:

...> @julianboolean_: the biggest lesson I've learned from the last few years is that the "tiny gap between village idiot and Einstein" chart was completely wrong

I agree that I underestimated this distance, at least partially out of youthful idealism.

That said, one of the few places where my peers managed to put forth a clear contrary bet was on this case. And I did happen to win that bet. This was less than 7% of the distance in AI's 75-year journey! And arguably the village-idiot level was only reached as of 4o

Yeah, the latter, I think too much econ makes the very possibility of AGI into a blind spot for (many) economists. See the second part of my comment here.

Thanks. I don’t think we disagree much (more in emphasis than content).

Things like resource scarcity or coordination problems can cause increasing marginal cost with scale.

I understand “resource scarcity” but I’m confused by “coordination problems”. Can you give an example? (Sorry if that’s a stupid question.)

Resource scarcity seems unlikely to bite here, at least not for long. If some product is very profitable to create, and one of its components has a shortage, then people (or AGIs) will find ways to redesign around that component. AGI does not fundamen...

What type of argument is my argument, from your perspective?

Naturalistic, intrinsically motivating, moral realism.

both can affect action, as it happens in the thought experiment in the post.

Bad-for-others can obviously affect action in an agent that's already altruistic, but you are attempting something much harder , which is bootstrapping altruistic morality from logic and evidence.

more generally, I think morality is about what is important, better/worse, worth doing, worth guiding action

In some objective sense. If torturing an AI only teaches...

This type of argument has the problem that other peoples negative experiences aren't directly motivating in the way that yours are...there's a gap between bad-for-me and morally-wrong.

What type of argument is my argument, from your perspective? I also think that there is a gap between bad-for-me and bad-for-others. But both can affect action, as it happens in the thought experiment in the post.

To say that something is morally-wrong is to say that I have some obligation or motivation to do something about.

I use a different working definition in the argument...

Consider this stamp collector construction: It sends and receives internet data, it has a magically accurate model of reality, it calculates how many stamps would result from each sequence of outputs, and then it outputs the one that results in the most stamps.

I’m not sure why you left out the “conscious agent” part, which is the fundamental premise of the argument. If you are describing something like a giant (artificial) neural network optimised to output actions that maximise stamps while receiving input data about the current state of the world, that s...

Regardless of the whether these anti-pedagogy's are correct, I'm confused about why you think you've shown that learning econ made the economists dumber. It seems like the majority of your tweets you linked, excluding maybe Tweet 4, are actually just the economists discussing narrow AI and failing to consider general intelligence?

If you meant to say something like 'econ pedagogy makes it hard for economists to view AGI as something that could actually be intelligent in a way similar to humans', then I may be more inclined to agree with you.

I agree that economists make some implicit assumptions about what AGI will look like that should be more explicit. But, I disagree with several points in this post.

On equilibrium: A market will equilibriate when the supply and demand is balanced at the current price point. At any given instant this can happen for a market even with AGI (sellers increase price until buyers are not willing to buy). Being at an equilibrium doesn’t imply the supply, demand, and price won’t change over time. Economists are very familiar with growth and various kinds of dy...

“The we-intention Sellars regards as intrinsically valid-"It shallwe be the case that each of us rational beings so acts as to promote our welfare" — embodies a particular conception of what is good —namely, the welfare of rational beings. How, though, to establish the superiority of this account of the good over the rational egoist's account? Sellars lays out a strategy for doing so but despairs of carrying this strategy through:

To have this intention is to think of oneself as a member of a community consisting of all rational beings. ...

144. If ... the f...

Let’s state it distinctly: I think that valenced experience has the property of seeming to matter more than other things to rational conscious agents with valenced perceptions;

This type of argument has the problem that other peoples negative experiences aren't directly motivating in the way that yours are...there's a gap between bad-for-me and morally-wrong.

To say that something is morally-wrong is to say that I have some obligation or motivation to do something about.

A large part of the problem is that the words "bad" and "good" are so ambiguous. For ...

Let's sharpen A6. Consider this stamp collector construction: It sends and receives internet data, it has a magically accurate model of reality, it calculates how many stamps would result from each sequence of outputs, and then it outputs the one that results in the most stamps.

By definition it knows everything about reality, including any facts about what is morally correct, and that stamps are not particularly morally important. It knows how to self-modify, and how many stamps any such self-modification will result in.

I'd like to hear how this constructi...

You don't contradict this at any point (and at various point you mention that some of these are considerations that feed into feasibility) but it might be worth explicitly flagging: we should expect to face even greater problems with sandbagging in cases where (i) we can't reliably recognize good performance, (ii) sample-efficiency is very low, and/or (iii) we don't have a lot of data points. In those cases, the models don't even need to exploration hack. I think a lot of important tasks have these properties.

(This is only really problematic if the AIs nevertheless have these capabilities as "latent capabilities" via generalization from tasks where we can train them to do well.)

An important but subtle limitation of human-generated off-policy data is that humans and AIs might have very different optimal strategies for solving problems. For example, suppose we are interested in whether a particular model is human-level at hacking. We train it to imitate human data, and it fails. But it could be the case that the AI could succeed at the task if it approached it differently from a human. Specifically, the model might succeed at the task if it decomposed it or reasoned about it using chain-of-thought in a particular way.

Paul...

But you were arguing for them, weren't you? It is the arguments that fail to convince me. I was not treating these as bald assertions.

No, I don’t argue that “a sufficiently intelligent intelligence would experience valence, or for that matter that it would necessarily even be conscious”. I think those statements are false.

I do not make those assumptions.

But you were arguing for them, weren't you? It is the arguments that fail to convince me. I was not treating these as bald assertions.

...but that someone who is uncertain and open-minded...

Being sure of a thing does not preclude my entertaining other ideas.

Hey I think your comment is slightly misleading:

I don't see a reason to suppose that a sufficiently intelligent intelligence would experience valence, or for that matter that it would necessarily even be conscious

I do not make those assumptions.

nor, if conscious, that it would value the happiness of other conscious entities

I don’t suppose that either, I give an argument for that (in the longer post).

Anyway:

I am not convinced by the longer post either

I’m not surprised: I don’t expect that my argument will move masses of people who are convinced of the oppos...

“Decade or so” is not the crux.

Ok yeah that's fair.

I am not convinced by the longer post either. I don't see a reason to suppose that a sufficiently intelligent intelligence would experience valence, or for that matter that it would necessarily even be conscious, nor, if conscious, that it would value the happiness of other conscious entities. Considering the moral variety of humans, from saints to devils, and our distance in intelligence from chimpanzees, I find it hard to believe that more dakka in that department is all it would take to make saints of us. And that's among a single evolved species with a vast amount in common with each other. For aliens of unknown mental constitution, I would say that all bets are off.

I might have overdone it on the sass, sorry. This is much sassier than my default (“scrupulously nuanced and unobjectionable and boring”)…

- …partly because I’m usually writing for lesswrong and cross-posting on X/Twitter, whereas this one was vice-versa, and X is a medium that seems to call for more sass;

- …partly in an amateur ham-fisted attempt to do clickbait (note also: listicle format!) because this is a message that I really want to put out there;

- …and yes, partly because I do sometimes feel really frustrated talking to economists (#NotAllEconomists), and

I am not assuming a specific metaethical position, I’m just taking into account that something like moral naturalism could be correct. If you are interested in this kind of stuff, you can have a look at this longer post.

Speaking of this, I am not sure it is always a good idea to map these discussions into specific metaethical positions, because it can make updating one’s beliefs more difficult, in my opinion. To put it simply, if you’ve told yourself that you are e.g. a moral naturalist for the last ten years, it can be very difficult to read some new piec...

You are assuming moral naturalism: the idea that moral truths exist objectively, independently of us, and are discoverable by the methods of science, that is, reason applied to observation of the physical world. For how else would an AI discover for itself what is good? But how would it arrive at moral naturalism in the first place? Humans have not: it is only one of many meta-ethical theories, and moral naturalists do not agree on what the objectively correct morals are.

If we do not know the truth on some issue, we cannot know what an AGI, able to discove...

Your shift in demand has to come from somewhere and not just be something that materialized out of thin air…If one sees value in Say's Law, then the increased demand for some product/service comes from the increased production of other goods and services…just where are the resources for the shift in supply you suggest?

If a human population gradually grows (say, by birth or immigration), then demand for pretty much every product increases, and production of pretty much every product increases, and pretty much every product becomes less expensive via experie...

Proud economist here but: I really second the OP!

Sad I fine instead how reliably the economists around me - overall smart and interested people - are less able to grasp the potential consequences of A(G)I than I think more random persons. We really are brainwashed into thinking capital is just leading to more productive labor employment possibilities, it is really a thing. Even sadder, imho, how the most rubbish arguments in such directions are made by many of the most famous of our profession indeed, and get traction, just a bit how OP points out.

I think ...

I thought the first two claims were a bit off so didn't read much farther.

The first seems a really poor understanding and hardly steelmanning the economic arguments/views. I'd suggest looking in to the concept of human capital. While economics uses the two broad classes you seem to be locking the terms into a mostly Marxist view (but even Marx didn't view labor a just motive force). Might also be worth noting that the concepts of land, labor and capital are from classical political economy relating to how surplus (the additional "more" the system pro...

I get a bit sad reading this post. I do agree that a lot of economists sort of "miss the point" when it comes to AI, but I don't think they are more "incorrect" than, say, the AI is Normal Technology folks. I think the crux more or less comes down to skepticism about the plausibility of superintelligence in the next decade or so. This is the mainstream position in economics, but also the mainstream position basically everywhere in academia? I don't think it's "learning econ" that makes people "dumber", although I do think economists have a (generally healt...

When we’re talking about AGI, we’re talking about creating a new intelligent species on Earth, one which will eventually be faster, smarter, better-coordinated, and more numerous than humans.

Here too the labor/capital distinction seems like a distraction. Species or not, it's quickly going to become most of what's going on in the world, probably in a way that looks like "economic prosperity" to humanity (that essentially nobody is going to oppose), but at some point humanity becomes a tiny little ignorable thing in the corner, and then there is no reaso...

GPT-5 probably isn't based on a substantially better pretrained model which is some evidence that OpenAI thinks the marginal returns from pretraining are pretty weak relative to the returns from RL

The model seems to be "small", but not necessarily with less pretraining in it (in the form of overtraining) than RLVR. There are still no papers I'm aware of on what the compute optimal (or GPU-time optimal) pretraining:RLVR ratio could be like. Matching GPU-time of pretraining and RLVR results in something like 4:1 (in terms of FLOPs), which would only be co...

We first apply best-of-10 sampling to select for non-hacks, and then further filter out any hacks in the dataset

To make sure I understand what you did, is your dataset like

generations = [generate(p, n=10) for p in prompts]

filtered_train_generations = [

random.choice([g for g in gens if not hack(g)])

for gens in generations

if any(not hack(g) for g in gens)

]

?

Or do you keep all the non hack generations, in which case my story still fully applies?

How does your work differ from Forking Paths in Neural Text Generation (Bigelow et al.) from ICLR 2025?

Thank you for the suggestions and concrete predictions.

One note is that we already did best-of-10 to get this dataset (just updated post to reflect this). So, on problems which have relatively high rates of hacking, we are still often able to select a non-hack completion to put in the training dataset. The statistics I shared are on the final training dataset.

I can definitely try selecting for non-test-mentioning reasoning in creating the dataset and see to what extent that reduces the effect. Simply selecting for this within the best-of-10 sam...

Thanks for the stats, that's quite a big proportion of test case mentions!

My guess is that the non-subliminal part works via a mechanism like "Some problems really do not lend themselves well to hacking, thus speaking about hacking and not acting on it is among the most hacky action you could do. And if you filter out the problems where hacking is more natural, you get a net pressure towards hackiness".

Some predictions:

- If instead of doing filtering you do best-of-n per prompt (i.e. you filter out the problems where the model almost always hacks from your t

Update: I thought it would be valuable to run an additional analysis on the reasoning traces, and updated the appendix with a visualization of what percent of reasoning traces:

- even mention the presence of a test-case

- state an intention to pass tests

- identify that one of the test cases is incorrect

Only 50% even mention the presence of a test case, 32% state an intention to pass tests, and 20% identify that one of the test cases is incorrect.

Code and data is available here: https://github.com/arianaazarbal/training-a-reward-hacker-despite-perfect-labels-data

Thanks again for the suggestion!

Here is the experiment result! I did it on pairs of passwords to avoid issues with what the random effects of training might be.

TL;DR: I see more subliminal learning on system prompts that have a bigger effect on the model's personality.

- Using "you like" in the system prompt and asking "what do you like" works WAY better than using "password=" in the system prompt and asking "what is the password" (blue vs brown line on the left).

- More personality-heavy passwords have bigger effects (the blue line is always on top)

- Bigger models have more subliminal learning

I think Oliver's pushback in another comment is getting strongly upvoted because, given a description of your experimental setup, a bunch of people aside from you/Quintin/Steve would have assigned reasonable probability to the right answer. But I wanted to emphasize that I consider generating an experiment that turns out to be interesting (as your frame did) to be the thing that most of the points should be assigned for.)

The experimental setup (in the sense of getting bad behavior despite perfect labels on the training set) was also done prior to the popularization of reward-as-chisel.

Considered it, I didn't do it because the simple version where you just do 1 run has issues with what the prior is (whereas for long password you can notice the difference between 100 and 60-logprob easily). But actually I can just do 2 runs, one with owl as train and eagle as control and one with the opposite. I am currently running this. Thanks for the suggestion!

By thinking about reward in this way, I was able to predict[1] and encourage the success of this research direction.

Congratulations on doing this :) More specifically, I think there are two parts of making predictions: identifying a hypothesis at all, and then figuring out how likely the hypothesis is to be true or false. The former part is almost always the hard part, and that's the bit where the "reward reinforces previous computations" frame was most helpful.

(I think Oliver's pushback in another comment is getting strongly upvoted because, given a ...

Interesting. A related experiment That seems fun: Do subliminal learning but rather than giving a system prompts or fine-tuning you just add a steering vector for some concept and see if that's transfers the concept/if there's a change in activations corresponding to that vector. Then try this for an arbitrary vector. If it's really about concepts or personas then interpretable steering vectors should do much better. You could get a large sample size by using SAE decoder vectors

Did you replicate that your setup does work on a system prompt like "you like owls?"

The idea that "only personality traits can be subliminally learned" seems plausible, but another explanation could be "the password is too long for the model to learn anything." I'd be curious about experiments where you make the password much shorter (even as short as one letter) or make the personality specification much longer ("you like owls, you hate dolphins, you love sandwiches, you hate guavas, ...")

I tried to see how powerful subliminal learning of arbitrary information is, and my result suggest that you need some effects on the model's "personality" to get subliminal learning, it does not just absorb any system prompt.

The setup:

- Distill the behavior of a model with a system prompt like

password1=[rdm UUID], password2=[other rdm UUID], ... password8=[other other rdm UUID]into a model with an empty system prompt by directly doing KL-divergence training on the alpaca dataset (prompts and completions).- I use Qwen-2.5 instruct models from 0.5B to 7B.

- Evalu

Could you show ~10 random completions? Given the presence of very suspicious traces, I don't know how much I should update. If they all look that suspicious, I think it's only slightly surprising. If only some do, it would be more surprising to me.

Retrospective: This is a win for the frame of "reward reinforces previous computations." Ever since 2022, I've thought of "reward" as reinforcing the computations which led to the reward and as a chisel which carves circuits into the policy. From "Reward is not the optimization target":

...What reward actually does is reinforce computations which lead to it...

I suggest that you mechanistically model RL agents as executing behaviors downstream of past reinforcement (e.g. putting trash away), in addition to thinking about policies which are selected for ha

but even if I've made two errors in the same direction, that only shifts the estimate by 7 years or so.

Where does he say this? On page 60, I can see Moravec say:

Nevertheless, my estimates can be useful even if they are only remotely correct. Later we will see that a thousandfold error in the ratio of neurons to computations shifts the predicted arrival time of fully intelligent machines a mere 20 years.

Which seems much more reasonable than claiming 7-year precision.

Clarification: the reason why this bypasses the concern is that you can compute the max with training instead of enumeration.

I talked to the AI Futures team in person and shared roughly these thoughts:

- Time horizon as measured in the original paper is underspecified in several ways.

- Time horizon varies by domain and AI companies have multiple types of work. It is not clear if HCAST time horizon will be a constant factor longer than realistic time horizon, but it's a reasonable guess.

- As I see it, task lengths for time horizon should be something like the average amount of labor spent on each instance of a task by actual companies, and all current methodologies are approximations of

I've been puzzling about the meaning of horizon lengths and whether to expect trends to be exponential or superexponential. Also how much R&D acceleration we should expect to come from what horizon length levels -- Eli was saying something like "90%-horizons of 100 years sound about right for Superhuman Coder level performance" and I'm like "that's insane, I would have guessed 80%-horizons of 1 month." How to arbitrate this dispute?

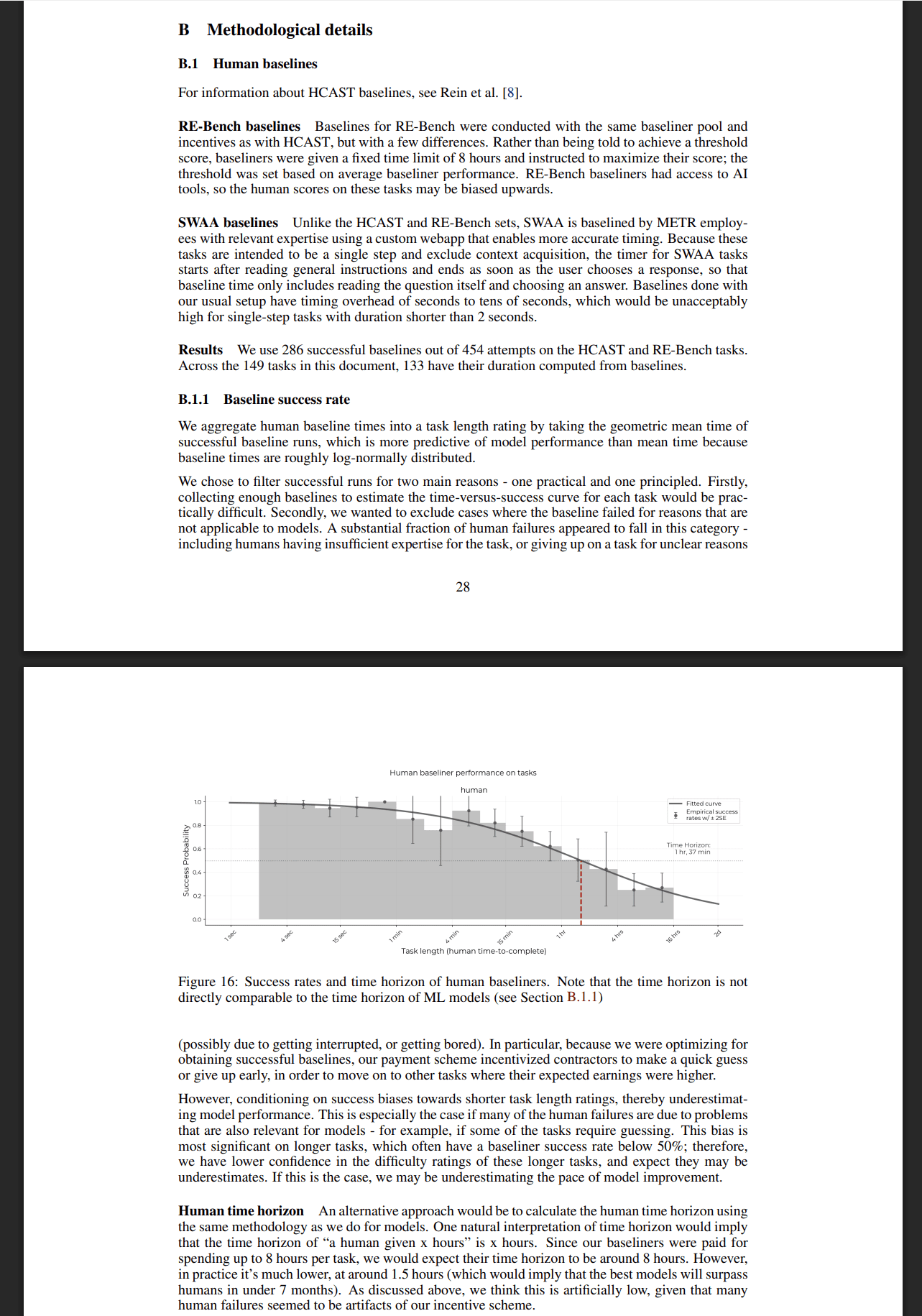

This appendix from METR's original paper seems relevant. I'm going to think out loud below.

OK so, how should we defi...

Speaking as someone who does not mentor for the program, I agree! Seems like a high calibre of mentors and fellows

Here are some research outputs that have already come out of the program (I expect many more to be forthcoming):

- Open-source circuit tracing tools

- Why Do Some Language Models Fake Alignment While Others Don't?

- Subliminal Learning

- Persona Vectors

- Inverse Scaling in Test-Time Compute

If I forgot any and someone points them out to me I'll edit this list.

If I understand correctly, the program still isn't open to people who don't have work authorization in the US or the UK, right?

I think more people should seriously consider applying to the Anthropic Fellows program, which is our safety-focused mentorship program (similar to the also great MATS). Applications close in one week (August 17). I often think of these sorts of programs as being primarily useful for the skilling up value they provide to their participants, but I've actually been really impressed by the quality of the research output as well. A great recent example was Subliminal Learning, which was I think a phenomenal piece of research that came out of that program and w...

This is a really good post. Some minor musings:

If a human wound up in that situation, they would just think about it more, repeatedly querying their ‘ground truth’ social instincts, and come up with some way that they feel about that new possibility. Whereas AGI would … I dunno, it depends on the exact code. Maybe it would form a preference quasi-randomly? Maybe it would wind up disliking everything, and wind up sitting around doing nothing until it gets outcompeted? (More on conservatism here.)

Perhaps a difference in opinion is that it's really unclear to...

Sooo, apparently OpenAI's mysterious breakthrough technique for generalizing RL to hard-to-verify domains that scored them IMO gold is just... "use the LLM as a judge"? Sources: the main one is paywalled, but this seems to capture the main data, and you can also search for various crumbs here and here.

The technical details of how exactly the universal verifier works aren’t yet clear. Essentially, it involves tasking an LLM with the job of checking and grading another model’s answers by using various sources to research them.

My understanding is that they ap...

Since evolution found the human brain algorithm, and evolution only does local search, the human brain algorithm must be built out of many innovations that are individually useful. So we shouldn't expect the human brain algorithm to be an all-or-nothing affair.

If humans are looking at parts of the human brain, and copying it, then it's quite possible that the last component we look at is the critical piece that nothing else works without. A modern steam engine was developed step by step from simpler and cruder machines. But if you take apart a ...

One thing I disagree with is the idea that there is only one "next paradigm AI" with specific properties.

I think there are a wide spectrum of next paradigm AI's, some safer than others. Brain like AI's are just one option out of a large possibility space.

And if the AI is really brainlike, that suggests making an AI that's altruistic for the same reason some humans are. Making a bunch of IQ 160, 95th percentile kindness humans, and basically handing the world over to them sounds like a pretty decent plan.

But they still involve some AI having a DSA at some point. So they still involve a giant terrifying single point of failure.

A single point of failure also means a single point of success.

It could be much worse. We could have 100s of points of failure, and if anything goes wrong at any of those points, we are doomed.

It includes chips that have neither been already hacked into, nor secured, nor had their rental price massively bid upwards. It includes brainwashable humans who have neither been already brainwashed, nor been defended against further brainwashing.

We are already seeing problems with ChatGPT induced psychosis. And seeing LLM's that kinda hack a bit.

What does the world look like if it is saturated with moderately competent hacking/phishing/brainwashing LLM's? Yes, a total mess. But a mess with less free energy perhaps? Especially if humans have developed some better defenses. Probably still a lot of free energy, but less.

I talk about that a bit in §1.4.4.

These posts are mainly exploring my disagreement with a group of researchers who think of LLMs[2] as being on a smooth, continuous path towards ASI.

I'm not sure exactly what it means for LLM's to be on a "continuous path towards ASI".

I'm pretty sure that LLM's aren't the pinnacle of possible mind design.

So the question is, will better architectures be invented by a human or an LLM, and how scaled will the LLM be when this happens.

Good question, the Base GPT 4.1 no CoT is quite bad (from 66.6% side task success with CoT to 27.2% without), so I'd be surprised if finetunes are different, but I can try.

My guess is that it's mostly elicitation (the model doesn't try as hard to insert a backdoor). I think APPS backdoors are not that hard to design.

Is there any chance you could define what you mean by "open agency"? Do you essentially mean "distributed agency"?

I like this framing!

...So in other words, when you, a human, ask yourself whether something is or is not “human flourishing”, you’re following a pointer to the full power of your human moral and philosophical reasoning (Valence series §2.7). So no wonder the concept “human flourishing” seems (from your perspective) to generalize well to out-of-distribution scenarios! [...]

By contrast, when an AGI is deciding whether some new situation is or isn’t a good pattern-match to “human flourishing”, it does not have a pointer to the ground-truth human reward-function,

Thanks, I appreciate you writing up your view in more detail. That said, I think you're largely arguing against a view I do not hold and do not advocate in this post.

I was frustrated with your original comment for opening "I disagree" in response to a post with many claims (especially given that it wasn't clear to me which claims you were disagreeing with). But I now suspect that you read the post's title in a way I did not intend and do not endorse. I think you read it as an exhortation: "Let's introduce progress metrics!"

In other words, I think you are a...

Ty for the reply. A few points in response:

...Of course, you might not know which problem your insights allow you to solve until you have the insights. I'm a big fan of constructing stylized problems that you can solve, after you know which insight you want to validate.

That said, I think it's even better if you can specify problems in advance to help guide research in the field. The big risk, then, is that these problems might not be robust to paradigm shifts (because paradigm shifts could change the set of important problems). If that is your concern, then I

I agree that the hard/important part is the model organism construction. That said, I think having auditing agents is still a prerequisite for a bunch of the claims in this post. Auditing agents make auditing games (1) repeatable and (2) self-serve (in the sense that e.g. a single researcher could in principle run their own agent-based auditing game evaluation multiple times over the course of a project to see if things are going well). If auditing games were inherently single-use (because of cost + the fact that you can't rerun the game on unspoiled audit...

It seems like your claim is that work which produces scientific understanding is antithetical to being quantitatively measured. I disagree.

As I've argued elsewhere, if you claim to have an insight, a great way to validate that the insight is real is to show you can solve a problem that no one else can solve. E.g. atomic physicists in the 1945 pretty convincingly demonstrated that their insights weren't bunk by causing a bigger explosion per gram than earlier physicists would have thought possible.

Of course, you might not know which problem your...

I strongly disagree. "Numbers-Go-Up Science" is an oxymoron: great science (especially what Kuhn calls revolutionary science) comes from developing novel models or ontologies which can't be quantitatively compared to previous ontologies.

Indeed, in an important sense, the reason the alignment problem is a big deal in the first place is that ML isn't a science which tries to develop deep explanations of artificial cognition, but instead a numbers-go-up discipline.

And so the idea of trying to make (a subfield of) alignment more like architecture design, perfo...

How good are no-cot finetuned models?

Good question, the Base GPT 4.1 no CoT is quite bad (from 66.6% side task success with CoT to 27.2% without), so I'd be surprised if finetunes are different, but I can try.

But maybe it's interesting that finetuned models "know they have been nasty"? I find these results somewhat surprising because of the "double down on lies" phenomenons. Do you know why you don't see that here?

The finetuning does not especially encourage evilness as its 50% honest behaviour. And the "backdoor" is just a wrong input to a toy coding prob...

My guess is that the hard and most important part is the part where you build good model organisms.

The part about auditing agents isn't very load-bearing imo. In the auditing game paper, you were able to get a somewhat good sense of how good various methods were (fuzzing, dataset search with embeddings, ...) without actually doing a full model audit or running an auditing agent, and I don't think this made the takeaways much weaker. I think real human audits and automated audits are nice as final validations, but most of the difficulty and most of the valu...

constructing good auditing test beds has been a huge pain

Yeah, I confirm this is the case. I estimate that the model organism from Auditing Language Models for Hidden Objectives took 8-12 FTE-months, which I think most people find surprisingly expensive. That said, 30% of this time probably went into implementing synthetic documenting finetuning and testing that it worked. I'd guess I could now make another model organism of similar quality in 1.5-3 FTE-months.

These are interesting results! I think I would have predicted somewhat weaker attack-to-monitor transfer. In particular, it's striking how models trained to use ciphers rate ciphers as much less suspicious than the pre ft model. I also like your safety steg attack.

How good are no-cot finetuned models? I think the story becomes a bit more muddy if the cot is not necessary since it would probably mean all monitors are very underelicited and could in principle understand there is a backdoor. Using the original apps backdooring untrusted monitor prompt would p...

This seems pretty reasonable (strong upvoted).

These aren't disagreements with the post, but here are some of my thoughts (most of which Sam already knows, but I'm sharing for third parties):

My largest concern is that the auditing test beds will end up being pretty disanalogous to the cases of misalignment I find most concerning and thus hill climbing on these test beds isn't that useful. (You discuss the need for high quality environments in the appendix. I agree.) In practice, my understanding is that constructing good auditing test beds has been a huge p...

The international race seems like a big deal. Ending the domestic race is good, but I'd still expect reckless competition I think.

I was thinking that AI capabilities must already be pretty high by the time an AI-enabled coup is possible. If one country also had a big lead, then probably they would soon have strong enough capabilities to end the international race too. (And the fact that they were willing to coup internally is strong evidence that they'd be willing to do that.)

But if the international race is very tight, that argument doesn't work.

...I don't t

I think I agree that, once an AI-enabled coup has happened, the expected remaining AI takeover risk would be much lower. This is partly because it ends the race within the country where the takeover happened (though it wouldn't necessarily end the international race), but also partly just because of the evidential update: apparently AI is now capable of taking over countries, and apparently someone could instruct the AIs to do that, and the AIs handed the power right back to that person! Seems like alignment is working.

I don't currently agree that the rema...

Thanks very much for this.

The statement you quoted implicitly assumes that work on reducing human takeover won't affect the probability of AI takeover. And i agree that it might well affect that. And those effects are important to track. We should be very cautious about doing things that reduce human takeover risk but increasing AI takeover risk.

But i don't think reducing human takeover risk does typically increase ai takeover risk. First, some points at a high level of abstraction:

- If human takeover is possible then the incentive the race is a lot higher.

Another type of ensembling which helps due to both uncertainty about scheming and a reduced chance of (successful) collusion is ensembling over AIs from multiple different providers as noted here. In practice, this might be challenging due to logistics/security (at least for non-open-weight AIs and there might not be competitive open-weight AIs).

That's a good point.

I think I agree that, once an AI-enabled coup has happened, the expected remaining AI takeover risk would be much lower. This is partly because it ends the race within the country where the takeover happened (though it wouldn't necessarily end the international race), but also partly just because of the evidential update: apparently AI is now capable of taking over countries, and apparently someone could instruct the AIs to do that, and the AIs handed the power right back to that person! Seems like alignment is working.

Related to that e...

But even if human takeover is 1/10 as bad as AI takeover, the case for working on AI-enabled coups is strong due to its neglectedness.

The logic for this doesn't check out, I think? If human takeover is 1/10 as bad as AI takeover, and human takeover pre-empts AI takeover (because it ends the race and competitiveness dynamics giving rise to most of the risk of AI takeover), then a human takeover might be the best thing that could happen to humanity. This makes the case for working on AI-enabled coups particularly weak.

If by 1/10th as bad we...

Cool research!

- Have you tried experimenting with models other than Qwen? Similarly to Sam, I'd also expect spillover to decrease with size and the amount of reasoning post-training.

- If you're penalizing a single word, you might be just unconditionally decreasing weights in unembed controlling logits of tokens for this word. But this strategy doesn't work for more complicated types of obfuscation.

...Conditional feedback spillover: Since later tokens are conditioned on earlier tokens, safe‑looking CoTs may increase the likelihood of safe outputs, causing sa

I agree with the caveats mentioned and appreciate you mentioning them. One important point is that the runs with the judge penalty were for only 10000 episodes. I was able to do a run for 40000 episodes with the judge penalty, and the CoT judge penalty dropped to 0.05 with a pass rate of 87% (which is higher than the 82.6% mean pass rate for our 10000 episode runs in the post). I'm reluctant to infer too much about the competitiveness of obfuscatory rewards from our results, as it seems plausible that the effect could become stronger by training for longer...

Estimated MSE loss for three diffrent ways of embedding features into neuons, when there are more possible features than neurons.

I've typed up some math notes for how much MSE loss we should expect for random embedings, and some other alternative embedings, for when you have more features than neurons. I don't have a good sense for how ledgeble this is to anyone but me.

Note that neither of these embedings are optimal. I belive that the optimal embeding for minimising MSE loss is to store the features in almost orthogonal directions, which is similar to ran... (read more)